We’re in the middle of a vast technological revolution, which is transforming life as effectively as the industrial revolution did in the 19th century. Deep neural learning models are changing every aspect of our lives.

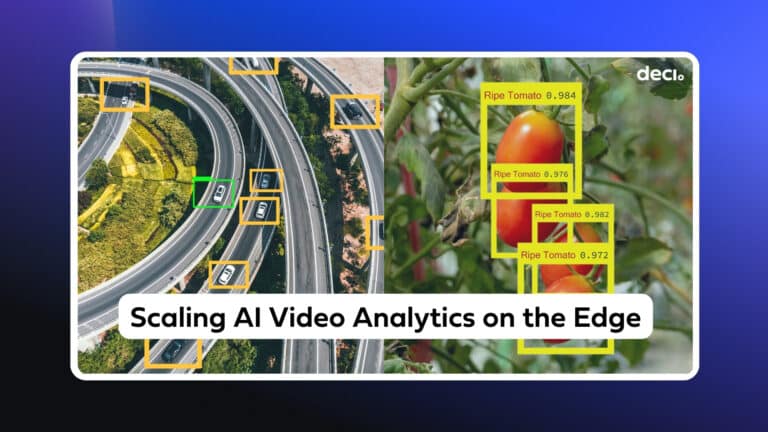

We can’t underestimate how vital deep learning is to emerging technologies. For example, deep learning (DL) models are the core of visual recognition tasks like object classification, detection and tracking, pixel-level semantic segmentation, and the recognition of visual relationships in images and videos. These tasks are central to capabilities such as autonomous driving, security control and monitoring, automated shopping like Amazon Go, medical diagnostics, and more.

In the words of Stanford professor and Coursera co-founder Andrew Ng, deep neural learning models are like the new electricity, changing entire industries and creating new possibilities.

“Just as electricity transformed almost everything 100 years ago, today I actually have a hard time thinking of an industry that I don’t think AI will transform in the next several years”.

Deep Learning Relies on Costly Hardware, Training Sets, and Expertise

Today, there are thousands of technology ventures that rely on this new electricity. Most of them are startups that are investing substantial resources building deep neural models. This is a process that requires considerable expertise and involves multiple steps, including model design, training, quality testing, deployment, service and periodic fitting, and revisions.

Every startup wants high-quality models that are fast, accurate, and reliable. But creating and deploying these models can be both expensive and time-consuming. If the model needs to work on very large training sets or is run on a small device with limited memory, then costs and timeframe rise.

Once a model has been trained, data scientists can create an application based on the model and put it into operation. For example, a bank might create an application that recognizes customer images from a security camera feed. In this case, the process of assessing each image is termed model inference. The bank wants this to happen as quickly as possible, which means reducing latency (the time it takes to perform the task). They also want to keep energy consumption to a minimum to decrease costs.

Latency and energy consumption are tightly related, and both depend heavily on the model’s architectural complexity. The more complex the model, the higher the latency. Bringing down the latency requires increasing energy consumption to provide more compute power, which in turn raises the costs. But costs are not the only consideration. There are certain applications that require real-time inference, such as imaging applications for autonomous cars; if the latency is too high, the entire application can be disqualified.

Latency and Costs Need to Improve in Order to Advance Deep Learning

DL models require a combination of customized hardware and software frameworks to reach the necessary performance requirements. Today, most DL models are trained and run on graphical processing units (GPUs), which distribute the computation across thousands of small computing cores in order to provide enough power to accelerate DL performance.

There are a number of other DL hardware accelerators on the market or under development, including Google’s tensor processing unit (TPU); Intel’s Goya, Movidius, Nervana, and Mobileye chips; AWS, Cerebras, Graphcore, Groq, Gyrfalcon, Horizon Robotics, Mythic, Xilinx, Nvidia, and AMD.

Deep learning engineers can also use various software compression techniques to speed up inference. Some of the most popular include weight pruning and quantization, which are effective at cutting latency but often drag down accuracy in exchange. Ideally, an accelerator should be able to speed up inference and cut down latency without sacrificing accuracy.

AutoNAC: The New Accelerator

To that end, we created a new acceleration method termed Automated Neural Architecture Construction (AutoNAC). AutoNAC uses a constrained operation process, leveraging information embedded in the original model to reduce latency while preserving accuracy. It creates a new model made up of a collection of smaller, related models whose overall functionality closely approximates the given model. The new model executes in a fresh architecture space that’s optimized for the hardware.

The AutoNAC accelerator is a data-dependent algorithmic solution that works in parallel with existing compression techniques like pruning and quantization. It requires a user-trained deep neural network and a dataset as input, together with access to an inference platform. AutoNAC then redesigns the neural network to derive an optimized architecture with latency that’s between 2 and 10 times lower than previously, but without compromising on prediction accuracy. AutoNAC’s optimizations can immediately enable real-time applications, and deliver considerable reductions in operating costs for cloud deployments.

Learn more about AutoNAC in our white paper or book a demo with one of our experts.