The annual Computer Vision and Pattern Recognition (CVPR) Conference, organized by the IEEE/CVF, is regarded as one of the most important conferences in the field. From academia to industry, many AI teams choose to unveil breakthroughs during the event.

In addition to the main conference, CVPR organizes several co-located workshops and short courses. This year, there are close to a hundred workshops packed in just two days, and you might be wondering which ones you should go to.

The Deci team handpicked the following workshops to ensure that you don’t miss out on the must-attend ones.

1. Embedded Vision Workshop

What is it about and why attend:

Embedded vision combines efficient learning models with fast computer vision and pattern recognition algorithms. It is an active sub-field that tackles many areas of robotics and intelligent systems that are growing in popularity and usage today. However, it also poses challenges. In the Embedded Vision Workshop, which is the 19th edition since 2005, participants aim to discuss and address the difficulty of comprehending complex visual scenes while operating within the strict computational limitations imposed by real-time applications on embedded devices.

Who are the speakers:

- Dr. Lars Ebbesson, NORCE

- Mai Xu, Beihang University

- Rahul Sukthankar, Google Research

2. Workshop on Neural Architecture Search

What is it about and why attend:

Neural Architecture Search (NAS) automates the design of deep neural network architectures, achieving results that outperform manually-designed models in many computer vision tasks. The first goal of the Workshop on Neural Architecture Search is to gather the latest research in the areas of automatic architecture search, optimization, and related techniques. The second goal is to benchmark lightweight NAS in a systematic and realistic approach. Moreover, there are three competition tracks where participants can propose novel solutions to advance the state-of-the-art.

Who are the speakers:

- Frank Hutter, University of Freiburg and the Bosch Center for Artificial Intelligence

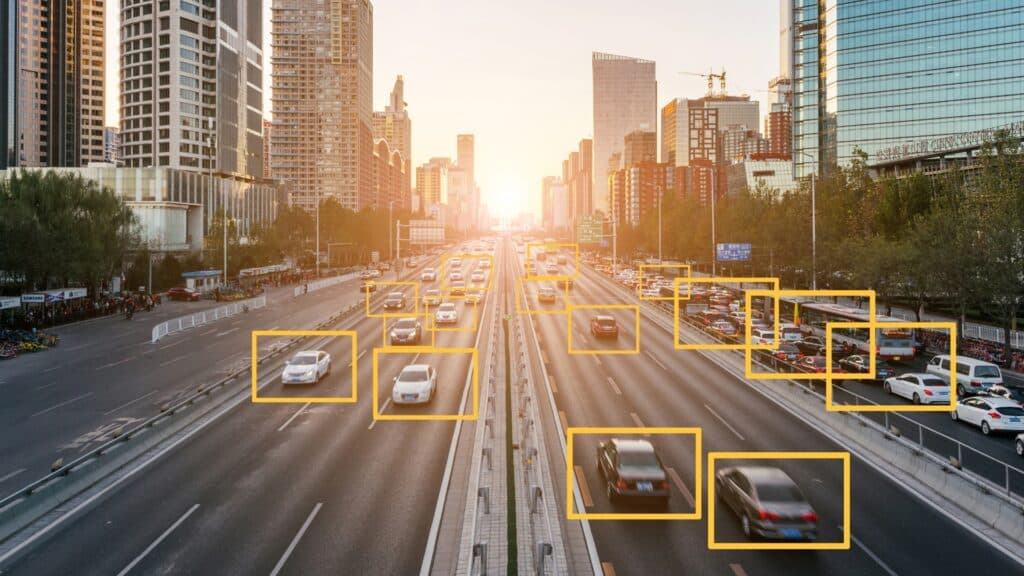

3. Workshop on Autonomous Driving

What is it about, and why attend:

Autonomous driving is not only one of the most promising applications of computer vision but also a fast-evolving field. The CVPR 2023 Workshop on Autonomous Driving (WAD) aims to discuss the latest advances in perception for autonomous driving. A full-day event, the workshop brings into spotlight the current state of the art, limitations, and future directions in the autonomous driving space.

There are papers on a variety of topics, including vision-based advanced driving assistance systems, deep learning and image analysis techniques, in-vehicle technology, and more. The workshop also features three challenges from Waymo and Argoverse, as well as one with the BDD100K dataset.

Who are the speakers:

- Sanja Fidler, University of Toronto/NVIDIA

- Hang Zhao, Tsinghua University

- Andreas Wendel, Kodiak Robotics

- Chelsea Finn, Stanford University/Google

- Ashok Elluswamy, Tesla

- Chen Wu, Waymo

- Jiyang Go, Momenta

4. End-to-End Autonomous Driving: Perception, Prediction, Planning, and Simulation

What is it about and why attend:

From 2D to 3D perception, prediction, and planning, to scene simulation, a variety of computer vision capabilities enables the successful implementation of autonomous driving. However, currently, the seamless integration of these functions is far from reality.

The End-to-End Autonomous Driving: Perception, Prediction, Planning, and Simulation workshop seeks to tackle this issue by providing a platform that encourages holistic system-aware understanding and facilitates collaboration among these different sub-fields. It aims to pave the way for future advancements in autonomous driving. Topics include 3D object detection, HD map construction, environment simulation, and more.

Who are the speakers:

- Angjoo Kanazawa, University of California, Berkeley

- Philipp Krähenbühl, University of Texas at Austin

- Jia Deng, Princeton University

- Matthias Niessner, Technical University Munich

- Kashyap Chitta, Max Planck Institute/University of Tübingen

- Alex Kendall, Wayve

- Pei Sun, Waymo

- Nikolai Smolyanskiy, NVIDIA

- Bolei Zhou, University of California, Los Angeles

- Raquel Urtasun, University of Toronto & Waabi

- Daniel Cremers, Technical University of Munich

5. Vision-based InduStrial InspectiON

What is it about and why attend:

Working on a vision-based industrial inspection project? Through a series of keynote talks, technical presentations, and data challenges, the VISION workshop aims to create a space for sharing innovations and challenges in the industrial inspection computer-vision use case. Its goals are to facilitate collaboration among researchers from diverse research domains and foster connections between recent research advancements and current requirements in industrial applications.

Who are the speakers:

- Dr. Bianca Maria Colosimo, Politecnico di Milano

- Dr. Tzyy-Shuh Chang, OG Technologies

- Dr. Jianjun Shi, Georgia Institute of Technology

- Dr. Leonid Sigal, University of British Columbia

6. Computer Vision in the Built Environment

What is it about and why attend:

The Computer Vision in the Built Environment workshop connects the domains of Architecture, Engineering, and Construction (AEC) with that of Computer Vision by creating a shared platform for collaboration and identifying common research areas. Its focus is to examine the current semantic state of built environments and the transformations they undergo over time. The goal is for participants to gain a deeper understanding of AEC-FM (Facility Management) and the various real-world problems that, if addressed, could significantly impact the industry and enhance the overall quality of life worldwide.

Who are the speakers:

- Avideh Zakhor, UC Berkeley

- Pingbo Tang, CMU

- Manmohan Chandraker, UC San Diego

- Manu Golpavar, UIUC

- Despoina Paschalidou, Stanford

- Fernanda Leite, UT Austin

7. Generative Models for Computer Vision

What is it about and why attend:

The focus of the Generative Models for Computer Vision workshop revolves around exploring the potential benefits of incorporating advancements in generative image modeling into visual recognition tasks. It aims to address the question of how visual recognition can leverage the recent progress made in generative modeling techniques like generative adversarial networks, auto-regressive models, neural fields, and diffusion models.

Because despite these advancements, their widespread adoption in computer vision for visual recognition tasks has been limited. By bringing together experts from the fields of image synthesis and computer vision, the workshop wants to foster discussions and advancements at the intersection of these two subfields.

Who are the speakers:

- Phillip Isola, MIT

- Angjoo Kanazawa, UC Berkeley

- Yi Ma, UC Berkeley

- Gordon Wtzstein, Stanford University

- Shubham Tulsiani, CMU

- Ben Mildenhall, Google Research

- Angela Dai, TUM

- Björn Ommer, LMU

- Andrea Tagliasacchi, SFU

- Alan Yuille, JHU

8. Workshop on Open-Domain Reasoning Under Multi-Modal Settings

What is it about and why attend:

The connection between vision and language (V+L) is now an integral part of AI. It has direct use cases in robotics, graphics, cybersecurity, and HCI. With the link between vision and language much more complex than simple images, the Open-Domain Reasoning in Multi-Modal Settings workshop provides a platform for advancing multimodal V+L topics with special emphasis on reasoning capabilities. Featuring expert talks and a panel discussion, it also aims to discuss trends, challenges, and different perspectives of researchers from the related domains of NLP, vision, machine learning, and robotics.

Who are the speakers:

- Kristen Grauman, University of Texas at Austin

- Jiajun Wu, Stanford University

- Alane Suhr, Allen Institute for AI

- Jean_Baptiste Alayrac, Deepmind

- Angel Xuan Chang, Simon Fraser University

9. Retail Vision Workshop

What is it about and why attend:

Computer vision and machine learning advancements have significantly disrupted the retail industry, transforming traditional brick-and-mortar and online shopping experiences. But in both physical and digital realms, numerous obstacles need to be overcome. These challenges include accurately detecting products in crowded store displays and effectively integrating textual captions with product search and recognition. The primary objective of the Retail Vision Workshop is to showcase the advancements made in tackling these difficulties and foster the forming of a community dedicated to retail computer vision.

Who are the speakers:

- Raffay Hamid, Amazon

- Antonino Furnari, University of Catania

- Emanuele Frontoni, VRAI Lab – University of Macerata

- Weilin Huang, Alibaba

- Cristina Mata, Stony Brook University

10. Vision for All Seasons: Adverse Weather and Lighting Conditions

What is it about and why attend:

Numerous outdoor applications, including autonomous cars and surveillance systems, must effectively function even in challenging weather conditions. However, adverse weather elements such as fog, rain, snow, low light, nighttime, glare, and shadows pose visibility challenges for the sensors that drive automated systems. Even the most advanced algorithms experience significant performance degradation in such conditions.

The main goal of the Vision for All Seasons: Adverse Weather and Lighting Conditions workshop is to encourage research focused on developing resilient vision algorithms capable of handling adverse weather and lighting conditions effectively.

Who are the speakers:

- Daniel Cremers, TU Munich

- Judy Hoffman, Georgia Tech

- Felix Heide, Princeton University

- Adam Kortylewski, MPI for Informatics

- Werner Ritter, Mercedes-Benz AG

- Patrick Pérez, Valeo AI

- Robby Tan, NUS

- Eren Erdal Aksoy, Halmstad University

- Tim Barfoot, University of Toronto

11. New Frontiers in Visual Language Reasoning: Compositionality, Prompts, and Causality

What is it about and why attend:

Visual Language Pre-training (VLP) models have brought significant transformation in some fundamental principles of visual language reasoning (VLR). But there are remaining challenges that hinder these models from emulating human-like “thinking,” which include:

- How to reason the world from breaking into parts (compositionality)

- How to achieve the generalization towards novel concepts provided a glimpse of demonstrations in context (prompts)

- How to debias visual language reasoning by imagining what would have happened in the counterfactual scenarios (causality)

The New Frontiers in Visual Language Reasoning: Compositionality, Prompts, and Causality workshop offers a valuable platform to explore the latest technological advancements in these three areas. Additionally, it features two multi-modal reasoning challenges centered around cross-modal math-word calculation and proving problems.

Who are the speakers:

- Elias Bareinboim, Columbia University

- Ranjay Krishna, University of Washington

- Hanwang Zhang, Nanyang Technological University

- Roi Reichart, Technion

- Zhiyuan Liu, Tsinghua University

- Ziwei Liu, Nanyang Technological University

- Zeynep Akata, EML Tübingen

- Anna Rohrbach, UC Berkeley

- Alan Yuille, Johns Hopkins University

12. International Workshop on Computer Vision for Physiological Measurement

What is it about and why attend:

The field of capturing physiological signals from the human face and body through cameras has gained significant momentum in the past ten years. It holds immense potential in various domains, including health monitoring, the development of imaging techniques to assess vital signs, and the analysis of facial expressions, emotions, and other contextual information from images and videos.

The International Workshop on Computer Vision for Physiological Measurement brings together researchers actively involved in this field, as well as individuals who can benefit from or contribute to it. Another set of target participants are practitioners interested in related applications, ranging from biometric security to affective computing.

Who are the speakers:

- Dina Katabi, MIT

- Ioannis Pavlidis, University of Houston

- Jun Luo, Nanyang Technological University

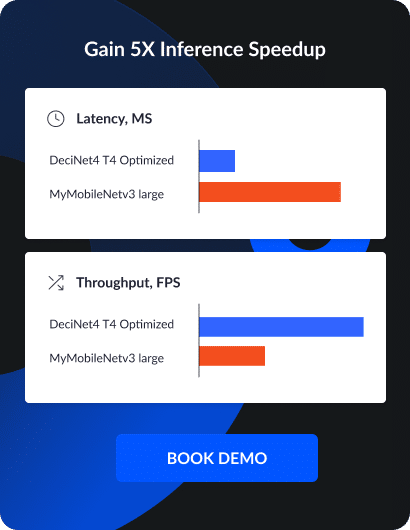

Any of the workshops sparked your interest? You can see the full list of sessions on the CVPR website. Make sure to also drop by the Deci booth #1517 – especially if you want to learn more about NAS and how it can help you build better deep learning models.