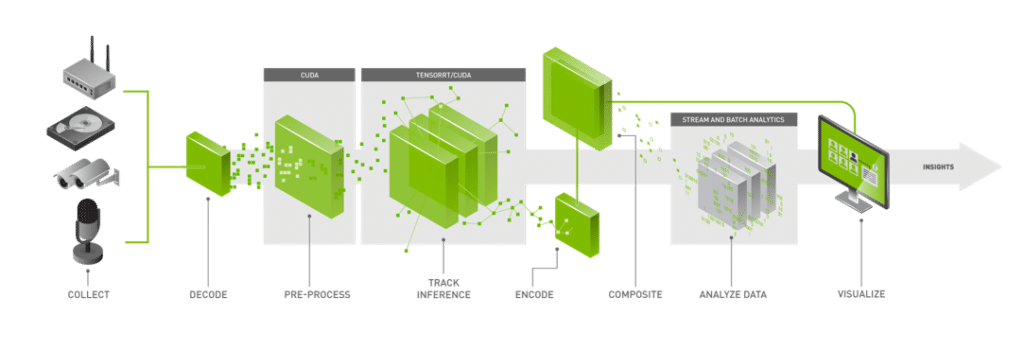

Intelligent video analytics has become an integral part of an endless number of important applications. Whether reducing congestion at traffic intersections, monitoring security cameras for threats, or tracking anomalies in industrial settings, the ability to process and analyze videos in real-time is now essential. While they provide invaluable insights, the videos used in these applications also involve tremendous technical challenges.

A primary challenge is that the app developer has to efficiently transfer data using the appropriate formats and available hardware accelerators. All of this must be done while applying the correct statistical models and collecting analytics.

These are exactly the challenges NVIDIA had in mind when they introduced the DeepStream SDK™. DeepStream is a complete streaming analytic toolkit for AI-based media processing applications. DeepStream developers designed it to be utilized for a wide variety of computer vision models, including object detection, image classification and instance segmentation.

If you’re a developer and not sure whether NVIDIA DeepStream is right for your application, this blog is for you. We start with an overview of Gstreamer, an open source framework that DeepStream extends. We then discuss DeepStream, including some of the most popular plugins available and illustrate how they may be linked.

A bit about GStreamer

GStreamer is a well-known open source framework for multimedia processing. You can use GStreamer to build complex cross-platform applications for multimedia format conversions, audio filtering, video streaming, recording, editing, and more.

The beauty of the framework lies in its high-level API, which reduces much of the complexity when dealing with multimedia data. This API has four main components:

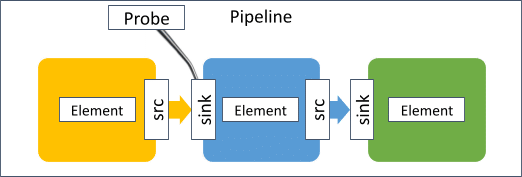

1. Elements

Elements are the basic building block of a GStreamer application. They often represent a source of data (e.g., a filesrc element that reads from a file “into” the application) – these are called source elements. They can also represent a destination for data (such as an autoaudiosink that feeds into a computer’s sound card) – these are known as sink elements. Or lastly, they may perform a transformation of data (a videoconvert element that transforms an RGBA video into an NV12 formatted video).

2. Pads

GStreamer elements interface with each other using pads. Every element has at least one pad. A source element has one or more source pads and a sink element has one or more sink pads. An element will have pads of both types if it performs some type of transformation or multiplexing of the data.

3. Pipelines

A collection of elements that are chained together is referred to as a pipeline. Usually, a GStreamer-based application will contain a single pipeline with one or more inputs and outputs. Within the pipeline, GStreamer can intelligently manage thread scheduling, minimal copy data flow, event handling, and more.

4. Probes

These are callbacks that can be “registered” to a pad to perform certain logic. Probes allow developers to expand the capabilities of their pipeline. You can use them to collect analytics, react to certain events, or alter a stream dynamically based on certain parameters.

A final note: GStreamer provides an interface that you can implement to create your own types of elements or “plugins.” Essentially, if there is no element available to perform the transformation or data handling you need, you can implement one!

For developers coding in Python, DeepStream provides Python bindings for customizing and adding new features to your applications. You can also use the DeepStream 6.0 Graph Composer for a simpler visual construction of your pipeline. Keep in mind that some of the more advanced GStreamer features might not be available. DeepStream also provides Docker images that contain the entire SDK for quick deployment on Jetson or other Nvidia GPU environments.

What you can do with DeepStream

Armed with new knowledge about GStreamer, it’s easier to understand what the DeepStream SDK really is – a collection of GStreamer plugins that NVIDIA implemented using low-level libraries optimized for their hardware.

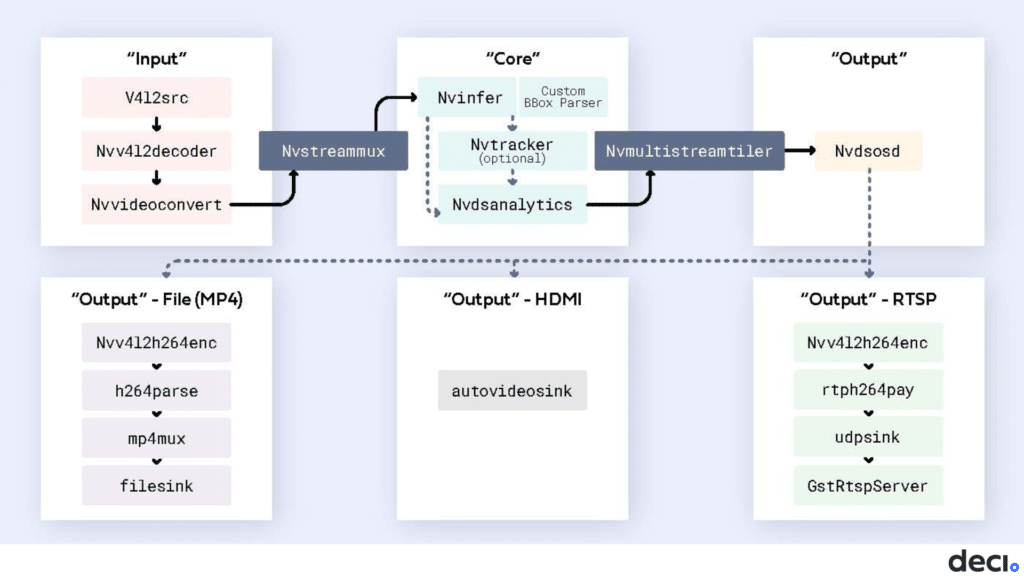

If you understood the previous sentence, you’re on your way to putting together an efficient NVIDIA-based video processing DeepStream application. Here are a few examples of useful plugins that NVIDIA prepared and packaged as part of the DeepStream SDK:

1. Nvv4l2decoder

This plugin’s input streams are generally encoded camera streams (H264, H265, JPEG, MJPEG). The output is a stream of NV12 buffers batched together by Nvstreammux and provided as input to the inference engine, Nvinfer. This plugin is based on the GStreamer v4l2videodec plugin, but allows you to use hardware accelerated codecs on the Jetson devices.

2. Nvstreamux

The main objective of the nvstreammux plugin is to create a batch to feed into the pipeline’s inference engine, the nvinfer plugin.

The nvstreammux plugin is also in charge of rescaling the input to the desired output size. For example, it can downsample a 720p (1280×720) image for a 640×640 model. You should carefully configure what hardware the scaling will be performed on – CPU, GPU or VIC.

3. Nvinfer

The nvinfer plugin lies at the heart of an AI video processing pipeline. It performs the actual inference on the input data using Nvidia’s TensorRT as a backend. The nvinfer plugin allows you to configure which GPU to use if there are multiple GPUs.

It also lets you run models on DLAs, the Jetson’s Deep Learning Accelerators. What’s more, the nvinfer plugin can also perform transformations, such color format conversions and standardization, on the input buffers’ batch before it’s fed into the model.

4. Nvdsanalytics

This plugin allows you to perform or accumulate analytics on metadata appended to the image buffers by the nvinfer plugin.

5. Nvmultistreamtiler

Given a batch of buffers, this plugin creates a 2D tiled image containing all the frames. The tiler looks at the unique ID given to each input stream. It then associates a buffer with a specific “tile” in the 2D image.

6. Nvosd

This plugin actually draws the bounding boxes, text, and other DeepStream data that needs to be rendered. Surprisingly, this drawing and rendering can quickly become a pipeline’s bottleneck.

At this point you should have the background and understanding you need to implement your first DeepStream video processing application. Congratulations!

But, what if your DeepStream app doesn’t perform as well as expected? What if the number of frames processed per second doesn’t match your model’s benchmark?

This is where the interesting part begins. Now you can profile your pipeline to understand where time is being spent and what resources are being under or over utilized. If that sounds relevant to you, continue to our next blog, “How to Identify the Bottlenecks in your DeepStream Pipeline.”