How do you identify your pipeline bottlenecks before you reach production? Are the assumptions you’re making hurting your successful deployment? This blog features the highlights from our AMA session on productizing AI models—with questions submitted by the community and answers from our deep learning experts.

Taking Deep Learning Models to Production is Tough

Deep learning creates value when it’s productized and can be used by people and businesses. However, transitioning deep learning models from research to production-ready applications is a challenge for AI teams of any size. According to Gartner, about 80% of AI projects never reach deployment.

Data scientists and deep learning engineers often face an extensive model development process. The initial stages include data preparation, architecture selection, and model training. But once those steps are done, there’s still a long way to go before a model is productized and the application is up and running.

Our first AMA (Ask Me Anything) session with Deci’s deep learning experts, Sefi Kligler, VP of AI, and Lotem Fridman, Head of Technology, was about this very topic of productizing deep learning models. Moderated by Ofer Baratz, Deep Learning Product Manager, you can watch the video for the full AMA or keep reading for the highlights (edited for brevity and clarity) on common challenges and practical tips around deep learning development and deployment.

Q&A on Model Development Stage

Ofer: How do you make a deep learning model light enough, but with sufficiently high performance to run on a mobile phone? What changes should you make within a deep neural network?

Sefi: It really depends on what you’re looking for. If you’re asking which type of models are the best fit for mobile phones, the best answer is simply to try. Take a ResNet, MobileNet, EfficientNet, or DenseNet. Run them on a mobile phone and see which ones get the best performance. But if you’re looking at the layers, layers of grouped convolutions, specifically depth wise separable convolutions, tend to be the best fit for mobile phones. It’s not a coincidence that you see them in MobileNet.

Ofer: Is there a task that’s better suited for mobile phones than others?

Sefi: It’s the opposite. There are tests that you’ll struggle with running on mobile phones. For instance, analyzing a large image without the ability to decrease the resolution is very memory-consuming. In many cases, mobile phones heat up or don’t have enough memory. That’s why, it’s common to see only object detection, pose estimation, or very simple segmentations run on phones. More than those is usually more difficult.

Ofer: Which one is better: compress a large model to increase speed or train a new lighter model?

Sefi: It’s not simple, because you can do either. In fact, they’re very similar to each other. But in Deci, we usually go to the approach of training the smallest model first because it’s cheaper to compute instead of training the larger model and then finding out how to make it smaller. A small model also trains faster. There are papers claiming that the other way can provide better results, but you should consider the compute power necessary to develop each model. There’s always a tradeoff between the compute power needed and the results.

Ofer: How would you choose your model and architecture, and would hardware be one of the factors for choosing this architecture? Do you have any tips for deploying the same model on multiple hardware types?

Lotem: Deploying the same model on multiple hardware types is not something that we encourage you to do. But the best practice here is to build a concrete deployment pipeline for each hardware. If you want to deploy on CPU, then you should build a pipeline that tests, optimizes, and more for CPU. The same goes for GPU. We really recommend having a specific model for the target hardware in a manner that fits and performs as expected.

Sefi: Let’s say you have a model for ten different types of Samsung phones or ten different iPhones. The problem with that is, to get the optimal model, you need a different model for each mobile phone. Otherwise, the best practice is to take the oldest phone or the phone with the lowest compute power out of the series, try to fit the model to it, and hope it works for the others. But you should consider that you could get much better performance if you develop and optimize a different model or architecture for every phone.

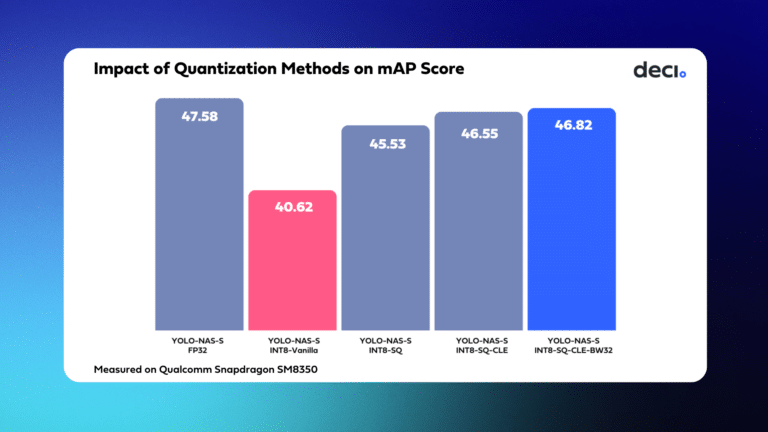

Ofer: Does model accuracy change with respect to hardware?

Sefi: While it’s true that accuracy is set by the training and weights of the model and not necessarily affected by the hardware, accuracy can still change due to compilation and quantization. For example, when using TFLite for mobile phones or TensorRT via GPU, the compiler itself could cause a degradation in accuracy, but it’s not due to the hardware.

Q&A on the Optimization Stage

Ofer: Can you have model acceleration as part of CI/CD or continuous training?

Lotem: You can. As opposed to a continuous deployment pipeline without an optimization phase, the major difference in adding the optimization component depends on what type of hardware you’re trying to deploy. Obviously, if you’re trying to deploy to a cloud-based virtual machine (VM), it will be simple. Deci has tools that do it in different manners and everything should work as expected.

But if you’re talking about deployment for edge devices, custom hardware, or anything that requires a more complex implementation to optimize, it depends on the actual scenario. In general, though, we recommend investing in the resources of optimization as a pre-deployment step before adding it as a candidate for continuous integration.

Only after running tests on the environment or validation set and knowing that you’re getting the correct performance and accuracy, should you deploy. It’s because optimization, especially quantization, can lead to some accuracy loss if not handled correctly.

Q&A on the Deployment Stage

Ofer: What are the most common challenges in taking deep learning models to production?

Lotem: In the past few years, edge devices are becoming more common. There’s now an abundance of different hardware types with their corresponding installations, operations support, and deployment SDKs. It takes a lot of time to master as opposed to working in a cloud environment, where there’s a very structured way of dockerizing everything. In terms of specific challenges, the common ones are compiling on particular edge hardware, reaching target performance, and optimizing after deployment.

Ofer: How would you describe the challenges in cloud environments?

Lotem: Cloud providers always build new tools and solutions that solve deployments for deep learning models. However, what can create friction are instances when personas who aren’t data scientists need to wrap all the data science code. In particular, the network overhead and management of the actual transmission can sometimes be a big challenge. On top of that, even if everything is working as expected from the communication standpoint, GPU utilization can still be low. Usually, this requires a complex skillset to solve.

Ofer: When GPU utilization is very low or even idle, why is it happening and how can you improve it in production?

Lotem: Often, the first assumption you make is it’s one model for one GPU, and this is not necessarily the case. What usually happens is you deploy multiple networks and increase throughput by running either replicas or different models on the same GPU. You then need to manage all the resources around the GPU on the VM or in the machine, and make sure that there are enough CPU cores and memory to load and offload the data from the GPU.

So, to improve GPU utilization, deploying multiple models should be taken into account. You must also consider setting up a monitoring tool that can properly profile the behavior of the end-to-end application and then analyze what can be improved in terms of GPU usage.

Ofer: What are the most common bottlenecks in the inference pipeline?

Lotem: Let’s first define what an inference pipeline is. It’s comprised of a few different steps including pre-processing, networking, inference, post-processing, and networking again, and this can differ depending on the device and VM.

First, batch size. Most devices, GPUs specifically, are built to work in large batches. So, you would want to use batching techniques to get significant improvement as opposed to running requests as they come.

Second, serialization. Python as a language is great at some things, but efficiency is not one of them. If you’ll get different requests and want to utilize the hardware with multiple processes, it’ll be best to run code that’s not necessarily Python but able to utilize the hardware properly. There are cases where it makes more sense to write everything in C++ or some other language that requires a lot of manual handling of complex code.

Third, pre-processing and post-processing. In small models, for instance, these processes can take more time, sometimes a lot more time than the actual inference time. Object detection is one. It can sometimes even deliver output that’s huge if there’s non-max suppression and a lot of data overhead involved. So, handling pre-processing and post-processing steps is crucial if you want to get the optimal performance out of the entire pipeline.

Lastly, profiling. Writing proper code and using proper tuning to know where the bottlenecks are coming from is very important if you want to know how to make the best use of your time.

We recommend identifying the major bottleneck in the inference pipeline as the initial step. This will be the most painful point or resource-consuming area that’s hurting your performance. Solve that, and then move forward from there.

Ofer: In terms of silos between teams that can lead to deployment issues, how do you improve the collaboration and coordination between data science and engineering?

Sefi: The problem that we tend to see a lot is that there are two separate teams. One is working on the data science or training of the model, which is then delivered to another team that’s in charge of taking it to production. If they didn’t communicate before the handover, we see a lot of cases where the model is tremendous and can never be run in production.

To address this situation which usually happens in larger AI teams, we try to encourage the data science team to do the handover and start to communicate with the engineers at an earlier stage—not only after training the model and the project is finished. There are also tools out there by Deci that can help you understand very fast, with very low effort, how your model performs before you even train it or take it to production. You can also understand whether this model will reach production or not.

Enjoyed this AMA? Check out some of our related videos including “How to Deploy Deep Learning Models to Production” and “An End-to-End Walkthrough for Deploying Deep Learning Models on Jetson” if you’re using NVIDIA Jetson devices.

Transform Your AI Models into Production-Ready Applications with Deci’s Platform

Productizing your deep learning models just got faster and easier. Deci’s end-to-end deep learning development platform enables AI developers to build, optimize, and deploy computer vision models on any hardware and environment with outstanding accuracy and runtime performance. You can use our Neural Architecture Search-based AutoNAC engine to generate custom hardware-aware architectures, or use pre-trained and optimized DeciNets models generated by AutoNAC.

The platform also includes lots of great tools that can support data scientists and deep learning engineers across the development phases of any deep learning project. The result? Improved the collaboration and coordination between data science and engineering teams.

- Hardware Aware Model Zoo contains pre-trained and optimized models that can be compared and simplify model selection.

- Benchmark HW allows you to see benchmarks that can help you find the best hardware for your application.

- SuperGradients is an open-source library that features proven recipes for training or finetuning CV models.

- Optimization tools enable you to compile and quantize your models with a click of a button and get results in minutes.

- Deployment tools, such as Infery, provide you with a unified model inference API to deploy with just three lines of code.

Book a demo and productize your deep learning models today.