When starting a deep learning project, the process of selecting the right model can be tough. In addition to the problem and use case, many considerations regarding the structure of the neural architecture itself need to be taken into account. This can often be a tedious and time-consuming process, resulting in a lot of trial and error.

We discussed this and more at our recent Ask Me Anything (AMA) session. Ofri Masad, Head of AI, and Amos Gropp, Deep Learning Research Engineer, answered questions on model selection sent by the community. Ariela Karmel, Content Marketing Manager, served as the moderator.

Here are the highlights (edited for brevity and clarity) from the AMA including tips on how to approach model selection for various computer vision (CV) tasks, as well as best practices for increasing the chance that your selected architecture successfully reaches production. You can also watch the video.

Choosing which SOTA Model to Use

Ariela: In an object detection project, which model is best to use, a YOLOv5 model or SSD?

Ofri: There’s no single answer. We’ve got a few families including the older SSDs, Faster R-CNN, and YOLO series. All of which are great options. But at the end of the day, it depends very heavily on your use case. Here are some general considerations:

- Your goal – Is it real-time object detection, or something else?

- Model size

- Accuracy

- Latency

- What type of data do you have?

- Did someone already solve your specific use case on your specific data or data that looks the same?

Our strong advice, though, is to understand your deployment setting or production environment at a very early stage. For example, if you know that your model will run on a Jetson Xavier NX, perhaps you want to benchmark that model on it. Don’t wait until the last moment after you train your model repeatedly. Because this is how people usually get to the point where they have a great model with great accuracy, which they love, but underperforms in terms of latency on the deployment setting.

You can use models already available on the Deci Model Zoo, which are already in ONNX form and get benchmarks on any of the GPU, CPU, and Jetson devices we support.

Ariela: I need to run on a CPU. What type of model is best suited?

Amos: There are many factors to consider including the type of CPU, how many cores you care about, the latency, or throughput. It’s very different for different cases. But generally, when you plan to use new hardware, whether it’s CPU, Jetson, or GPU, you must first consider the specific state of your hardware, what you want to do, the latency cost, and more. This can help you better understand operations that aren’t supported by the hardware. For instance, not everything that you can do on CPU and GPU, you can do on Jetsons.

Community: When should I consider using Transformers?

Ofri: Transformers are getting more and more relevant in the CV field. For now, at least, transformers will generally be a bit slower, but more accurate. So, it depends on your use case. If you’re trying to achieve very high accuracy, but the latency is not a big issue—for example, you’re not trying to run in real-time—then Transformers might be a good solution for that.

With that said, some papers show Transformers to be very fast and accurate at the same time. Even so, in object detection, for example, where there are a few transformers, we think that convolutional neural nets still win on the latency side.

What we suggest is to compare Transformers with convolutional networks, and just choose the best one in terms of latency versus accuracy in your specific use case.

Ariela: Why should you use CNN when Vision Transformers provide better results?

Amos: Both are great networks. But we’ve seen some research trends that take ideas from CNNs and bring them to Vision Transformers, and vice versa. Papers like ConvNeXt and COVNET are some examples. What you must keep in mind is that they work very differently so if you choose either of them, like one’s design space, you don’t mix them together, because then the searching will be a bit complicated.

Ariela: When should I use deep learning versus classic machine learning algorithms?

Ofri: Just the fact that we have deep learning models doesn’t mean that we must use them in all cases. If you’re getting the results you need from a classic CV algorithm, that’s great. If you’re doing something which is covered by a known algorithm that works well and gives you the right results, that’s great as well.

The general thing is that, in most cases, when we’re talking about more complex tasks like object detection, segmentation, classification, and so on, CNNs are much more accurate than classical CV algorithms. There are usually no comparisons. So, if you want something more complex, with more understanding and more vision inside it, then you might need to use a CNN.

Model Selection Techniques

Ariela: How much effort should you put into model selection versus model optimization?

Ofri: It depends on the use case. We recommend starting with a good model selection though because a good model selection makes all the difference. Spend more of your effort on selecting the actual architecture at the beginning of your work, because if you’re not using the correct tool then all the work around that will be difficult and might not even yield the results you’re looking for.

For instance, if your network is not fast enough, then that’s not something that you can fix by training differently. You need to choose the right network in terms of the deployment and the use case. Then, in the later stages, you go deeper into the fine-tuning of the parameters, and the rest.

Ariela: Should I use neural architecture search for model selection and what framework should I use?

Amos: The short answer is, of course. Neural architecture search is a great idea that automates the process of finding a model. However, currently, this approach is mainly used by big companies such as Google, NVIDIA, and Facebook. Because to use NAS, you need a lot of computing power. Moreover, there isn’t a good platform for using NAS as of now. Most papers don’t have a good benchmark for real usage of neural architecture search also.

Community: Can pruning replace the model selection process?

Ofri: No. Pruning is great and might be a great option to optimize your model in the post-training process, but it’s not the perfect alternative to choosing the right model. Pruning can also just reduce, not add. So, for example, if your model is not built to detect small objects or to segment the smaller details, then pruning would not help with that at all.

You might want to start with a huge model and then reduce it, that wouldn’t also work up to a certain point. If you start with a huge model, your training process will be very long, and you might not even get the best results possible.

Another thing that you should consider is that pruning works, but not always in the same way on all hardware. Some hardware doesn’t support pruning. You might prune your network and see almost no effect on the latency. Moreover, if you got to the point where you need to prune your network dramatically, then perhaps, it was too big to begin with. You could’ve found a network that was easier to train, faster to train, and so on.

Liked this AMA? Check out some of our related videos including “How to Select the Right CNN Architecture for Your Application,” “Can You Achieve GPU Performance When Running CNNs on a CPU?” and “How to Find the Correct Deep Learning Model for Your Task.”

Automate Model Selection with Deci’s Deep Learning Development Platform

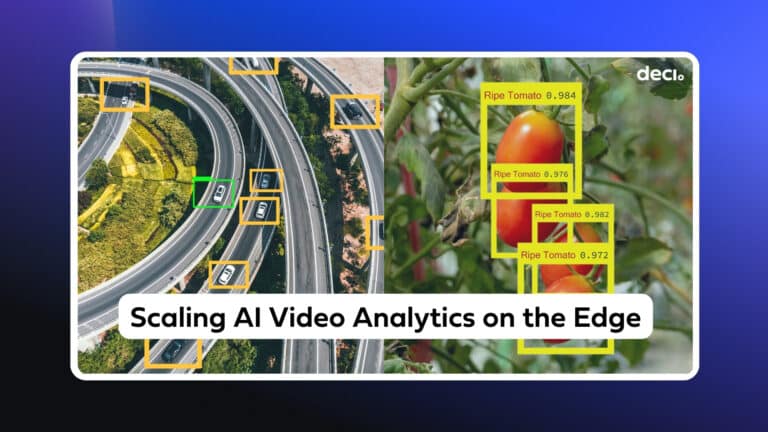

Selecting the right model for your use case just got faster and easier. Deci’s end-to-end deep learning development platform enables AI developers to build, optimize, and deploy CV models on any hardware and environment with outstanding accuracy and runtime performance. You can use our Neural Architecture Search-based AutoNAC engine to generate custom hardware-aware architectures, or use pre-trained and optimized DeciNets models generated by AutoNAC.

The platform also includes lots of great tools that can support data scientists and deep learning engineers across the development phases of any deep learning project. The result? Improved the collaboration and coordination between data science and engineering teams.

- Hardware Aware Model Zoo contains pre-trained and optimized models that can be compared and simplify model selection.

- Benchmark HW allows you to see benchmarks that can help you find the best hardware for your application.

- SuperGradients is an open-source library that features proven recipes for training or finetuning CV models.

- Optimization tools enable you to compile and quantize your models with a click of a button and get results in minutes.

- Deployment tools, such as Infery, provide you with a unified model inference API to deploy with just three lines of code.

Book a demo and select the right deep learning model for your use case today.