With the success that deep learning has achieved across various domains, the community has acknowledged the value of open-source neural network training libraries and tools. Researchers, data scientists, and engineers can experiment with deep learning models with greater ease, thanks to the accessible and comprehensive resources for neural network training.

This blog post explores some of the popular PyTorch-based deep learning libraries and tools used by the community and also introduces Deci’s new and free open-source library SuperGradients.

A Quick Word About the Rise of PyTorch-based Models

Despite being used less frequently overall, there is an increasing interest in PyTorch as compared to TensorFlow. Kaggle’s State of Data Science and Machine Learning 2021 survey illustrates that there is strong year-over-year growth in the usage of the PyTorch framework.

PyTorch’s growth and acceptance in the community is a testament to the fact that researchers, developers, and engineers favor simplicity and performance. This has led to the development of an organic self-sustaining ecosystem of PyTorch-based libraries, packages, and tools that aim to simplify the model development and training processes. In the spirit of collaboration, the open-source PyTorch ecosystem of tools, libraries, and packages seeks to support and accelerate AI development.

Neural Network Training Libraries and Tools Overview

PyTorch Image Models (timm)

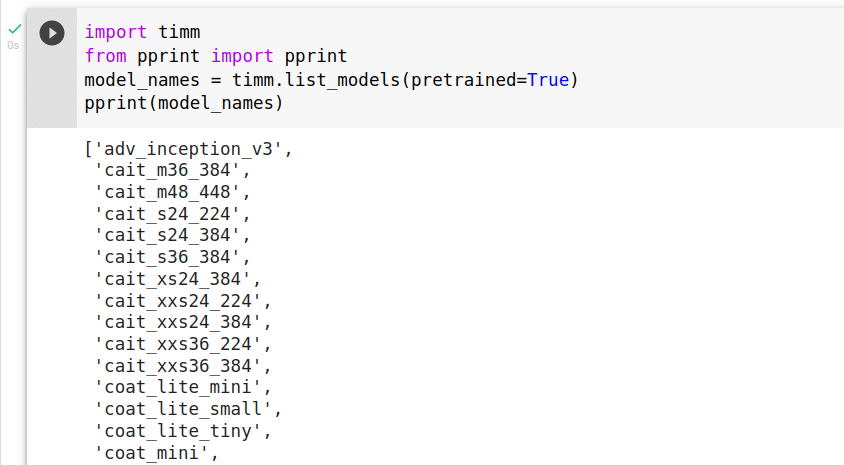

‘timm’ is a PyTorch-based neural network training library created by Ross Wightman and is focused on state-of-the-art (SOTA) image classification.

Pros:

- In addition to providing access to a collection of over 300 pre-trained SOTA image classification models, it also enables users to leverage data-loaders, optimizers, schedulers, and scripts for reproducing and fine-tuning deep learning models over custom datasets.

- The ‘timm’ library supports loading a pre-trained model, listing of models with pre-trained weights, and also searching for model architectures by wildcard.

- The GitHub repository of the library includes training, validation, inferencing, and checkpoint cleaning scripts to help users reproduce results and fine-tune models over custom datasets.

Cons:

- It currently supports only classification models and does not support other computer vision tasks such as semantic segmentation, instance segmentation, and object detection.

- It is difficult to add custom features such as optimizers and loss functions, among others.

- There are only a few documented example recipes for training; most of the models are not accompanied by their recipes for training.

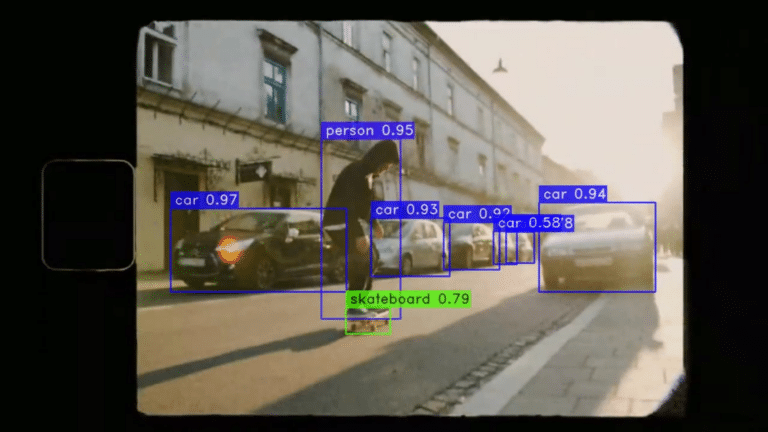

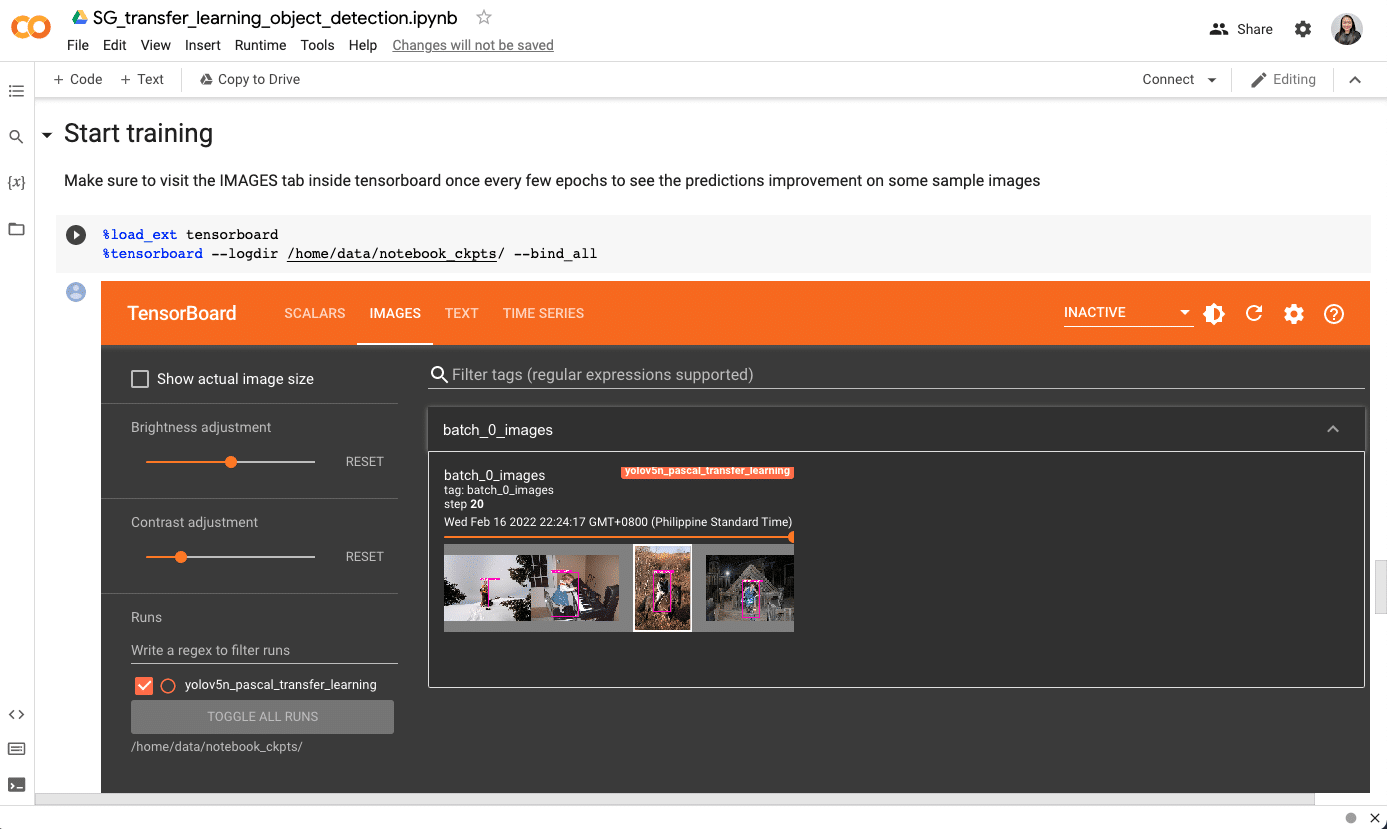

Ultralytics’ YOLOv5

YOLOv5 is a family of deep learning architectures for object detection by Ultralytics. The open-sourced repository by Ultralytics showcases futuristic vision-based AI methods and has been developed by inculcating learnings and practices that have evolved during research and development.

Pros:

- The PyTorch based code-base enables users to load models that have been pre-trained on the COCO dataset.

- The repository contains scripts to train and reproduce results on the COCO dataset.

- The code-base supports training and fine-tuning over custom data.

- The different variants of pre-trained YOLOv5 models are available for use on PyTorch Hub.

Cons:

- It currently supports only the YOLOv5 object detection models.

- Its complex and hard integration makes it very difficult to add custom features such as optimizers and loss functions among others.

- It relies on some ‘magic numbers’—constant values for strides and augmentation scales that cannot be modified without affecting the results. Moreover, the rationale behind them is not documented.

Detectron2

Detectron2 is a PyTorch-based neural network training library by Facebook AI Research that provides users with access to SOTA detection and segmentation algorithms.

Pros:

- It has been designed to be modular, flexible, and extensible for efficient training on single or multiple GPUs.

- The Detectron2—the successor of Detectron and maskrcnn-benchmark includes SOTA object detection algorithms such as DensePose, panoptic feature pyramid networks, and numerous variants of the pioneering Mask R-CNN.

- It is one of the tools published in the PyTorch ecosystem.

Cons:

- It does not offer access to all SOTA models.

- It is restricted to detection and segmentation and does not support other computer vision tasks such as classification.

- Its hard integration involving a modular yet abstract approach makes it very difficult to make changes to the deep learning model.

Mmdetection

‘mmdetection’ is a PyTorch-based object detection toolbox that was developed as a part of the OpenMMLab project.

Pros:

- It provides access to SOTA object detection deep learning models such as FasterRCNN, DETR, VFNet, and others.

- The major features of the toolbox include modular design, support of multiple frameworks out of box, and high efficiency.

- The toolbox also includes scripts for visualization, model conversion, benchmarking, hyper-parameter optimization, among other related tasks.

Cons:

- It is restricted to supporting object detection models and some instance detection models.

- It does not support other computer vision problems such as classification.

Fast.AI

fast.ai is a PyTorch-based neural network training library for researchers. The design philosophy of fast.ai enables it to be rapidly productive as well as amenable to customization based on user preferences.

Pros:

- It seeks to provide researchers with both high-level abstractions for ease of use, flexibility, and performance as well as low-level components that can be leveraged to create novel approaches.

- Its modular hierarchical architecture of layered APIs enables users to leverage high-level API and also to rewrite parts of the high-level API or add particular behavior to suit their needs without having to learn to use the low-level API.

Cons:

- It offers a very narrow variety of classification and segmentation models.

- It is not easy to make changes or add custom features. Although it allows users access to low-level components that can be juxtaposed to create novel approaches, it is difficult to make changes and add custom features.

PyTorch Lightning

PyTorch Lightning is a wrapper of PyTorch that aims to ease the software development process toward training models and is a more fundamental layer in the model training ecosystem. The wrapper re-structures PyTorch code based on the design principles of maximum flexibility, modular, and self-contained models.

Pros:

- It enables users to develop scalable deep learning models with a hardware-agnostic approach.

- The PyTorch-based library advocates for decoupling of research from engineering to ease the process of experimenting and reproducing deep learning models.

- It has been developed to enable high-performance AI research.

- It is one of the tools published in the PyTorch ecosystem.

- It encourages users to write code that is reusable and shareable. This ensures that the code can run on multiple GPUs, TPUs, CPUs, and even on 16-bit precision without any changes.

Cons:

- It is only a wrapper for simplifying PyTorch code for high-performance scalable deep learning. It does not offer any models to load and experiment with.

- It lacks documentation on ready-to-use training recipes for deep learning models.

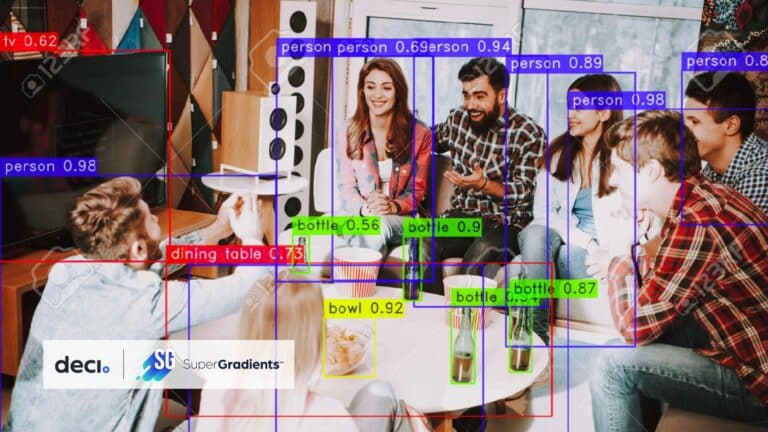

SuperGradients

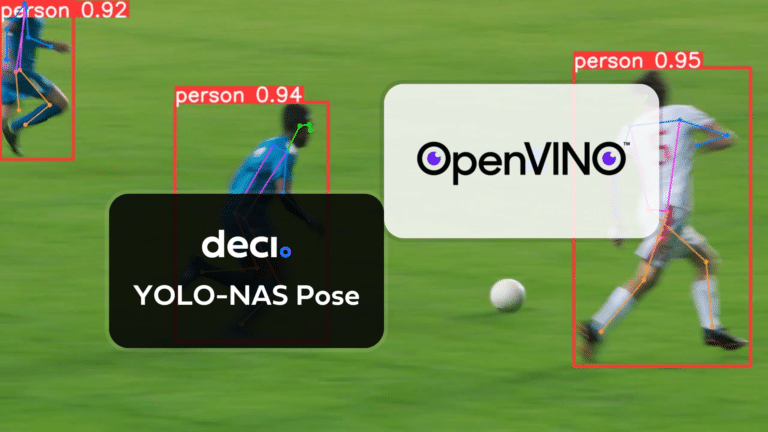

SuperGradients is Deci’s new free and open-source neural network training library that enables users to easily train from scratch or fine-tune pre-trained models using SOTA techniques developed by Deci’s deep learning experts. Having studied and understood the limitations of the existing tools and libraries, the SuperGradients training toolkit package has been designed to be a one-stop shop for SOTA computer vision models.

The library has been developed in line with Deci’s mission to enable AI developers to focus on what they do best—solving our world’s most complex problems. Thus, SuperGradients facilitates a high level of abstraction for leveraging SOTA models that guarantee ease of use, at the same time, it also allows users to customize and tweak approaches to suit their needs.

SuperGradients enables AI developers and engineers to focus on the research and training with simplified configuration mechanisms that can be customized according to specific engineering requirements.

Pros:

- This PyTorch-based library supports all the common computer vision tasks of classification, object detection, as well as segmentation.

- It delivers ease of use to its users, as well as equips advanced users with ways to configure and tweak training data and model architecture-related parameters, among other processes.

- All of its models are ready to use for production (e.g., conversion to TRT has been verified) and they can be easily integrated with inference servers (more easily with Deci’s Infery).

- It includes well-documented training recipes for SOTA models for different computer vision problems.

- It is integrated with professional DLOps tools such as ClearML, WandB, and DagsHub.

Cons:

- At the moment SuperGradients offers only 25 pre-trained models.

- The SuperGradients community is in its early days in terms of contribution, issues, and mutual support.

Summary

Many open-source deep learning libraries and tools can help researchers, data scientists, and engineers train their models effectively. But along with numerous options, it becomes crucial to do the necessary due diligence and carefully select the most appropriate library/tool based on the specific problem and requirements.

This blog post explores in detail some of the most well-known PyTorch-based neural network training libraries and toolboxes and objectively enumerates their pros and cons. May this serve as a great resource that can enable users to select the most appropriate deep learning library.