Deci has just released a new efficient frontier for semantic segmentation models outperforming known segmentation models both in terms of accuracy and latency performance.

These new models dubbed DeciSegs were generated with Deci’s AutoNAC engine, a neural architecture search algorithm that designs accurate and fast, hardware aware model architectures.

Data scientists use the AutoNAC engine to easily design custom model architectures that meet their specific performance requirements on their target hardware. With this new way of developing models, teams reduce development risks and achieve higher model accuracy and speed in less time.

DeciSegs – Delivering the Best Accuracy vs Latency Trade-Off for Semantic Segmentation

In deep learning, accuracy and latency are two important considerations that often involve a trade-off. While achieving high accuracy is desirable, it may come at the cost of increased latency or slower inference times, especially when dealing with large and complex models. Conversely, optimizing for low latency may result in sacrificing accuracy to some extent. Finding the right balance between accuracy and latency is crucial in many real-world applications, especially in autonomous vehicles.

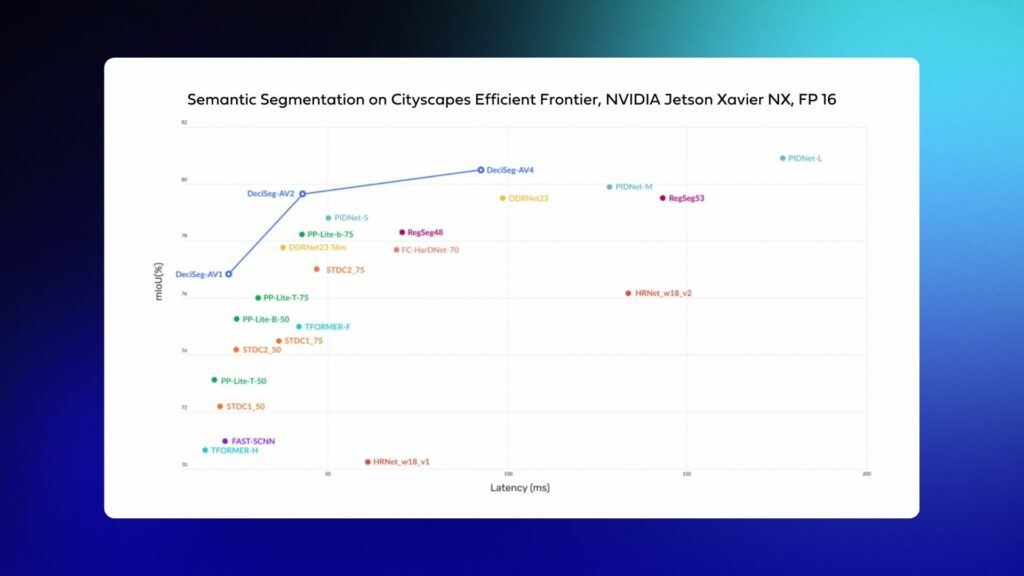

Deci used its AutoNAC engine – a neural architecture search technology to develop a family of models called DeciSegs, which demonstrate the most optimal accuracy to latency trade-off compared to other known segmentation models. The resulting DeciSeg models deliver unparalleled results that can dramatically improve the performance of real-world applications that require high accuracy and low latency.

In the following chart, you’re able to see a comparison of state-of-the-art models such as PP-Lite, FC-HardNet, STDC, DDRnet, PIDNet, and how they compare to the AutoNAC generated DeciSeg models both in terms of accuracy and latency.

For example, one of the DeciSegs is 2.31x faster and +0.84 more accurate than DDRNet23.

All the models presented were trained on the Cityscapes dataset and compiled to FP16 with NVIDIA TensorRT on the NVIDIA Jetson Xavier NX device.

If you’re interested in learning more about the AutoNAC engine and how you can harness it to simplify and accelerate your deep learning development process, visit this page to learn more.

Cityscapes Dataset

The Cityscapes dataset is a valuable resource for autonomous vehicle research and development, providing large-scale, high-quality images of urban environments with detailed annotations for objects and regions. The dataset consists of high-resolution urban street scenes, captured in different cities, with diverse weather conditions and lighting conditions. The dataset’s complexity and diversity challenge autonomous driving algorithms to perform well in real-world scenarios, helping researchers to create more robust and reliable autonomous vehicles that can navigate safely in complex urban environments. Ultimately, the Cityscapes dataset plays a crucial role in advancing the development of autonomous vehicle technologies, helping to bring us closer to a future with safer and more efficient transportation systems.

Semantic Segmentation & Autonomous Vehicles

Semantic segmentation is a fundamental task within the computer vision domain, and is frequently applied in various fields such as industrial and medical applications, and especially in the context of autonomous driving. It refers to the process of classifying every pixel in an image or video into one of several predefined categories, such as road, sky, building, and car. In the automotive industry, this type of information is used by autonomous vehicles to make informed decisions, such as navigating around obstacles or recognizing traffic signs.

Autonomous Vehicles & Edge Devices

Edge devices are compact and power-efficient computing devices that can be placed directly at the source of data, in this case, an autonomous vehicle. They are designed to perform complex computational tasks on-device, eliminating the need for continuous data transfer to a remote server. High throughput edge devices are critical for real-time autonomous driving with deep learning. Edge devices such as the NVIDIA Jetson Xavier NX need to process large amounts of sensor data from cameras, lidar, radar, and other sources quickly and accurately to make split-second decisions. This requires efficient deep learning algorithms that can operate on the limited computational resources of these devices without sacrificing performance. Additionally, optimizing data pipelines and minimizing data movement can help reduce latency and improve overall throughput. Without high throughput, autonomous vehicles may not be able to react quickly enough to changing road conditions, potentially endangering passengers and other drivers on the road.

Train Semantic Segmentation Models with SuperGradients

SuperGradients is Deci’s open source “all-in-one” deep learning training library for PyTorch-based computer vision models. It supports the most commonly used computer vision tasks, including object detection, image classification, and semantic segmentation with just one training script.

With SuperGradients, you can either train models from scratch or use our pre-trained models (classification, detection, segmentation) – it will save you the time of searching for the right model on various places and papers, the overhead of integration and implementation, and training iterations.

SuperGradients also includes classes, functions, and methods that were already tested and used by many others (like when you use PyTorch or NumPy, and not implementing yourself). You can use our recipes to easily reproduce our SOTA reported results on your machine and save time on hyperparameter tuning while getting the highest scores.

Last but not least, all of our models were tested and validated for compiling into production frameworks and have all the documentation, code examples, notebooks and user guides that you need to do it yourself – no other repo takes production readiness into consideration.

Please refer to the Semantic Segmentation Quickstart notebook for a comprehensive example covering the following stages: Experiment setup, Dataset definition, Architecture definition, Training setup, Training and Evaluation, Prediction, and Conversion to ONNX.

Deci’s State-of-the-Art Foundation Models

The DeciSegs model family represents just one part of Deci’s extensive range of foundation models. Within this collection, you’ll find the YOLO-NAS hyper-performant object detection models and the YOLO-NAS Pose models, designed for hyper-efficient pose estimation. Each family within Deci’s foundation models is meticulously crafted using the AutoNAC engine. It is tailored to excel in specific computer vision tasks and optimized for given hardware and dataset characteristics.

Upon choosing a foundation or custom model, you can train or fine-tune it using your own data via Deci’s SuperGradients PyTorch training library, as noted above. To further boost your model’s efficiency and inference speed, Deci’s Infery SDK is an invaluable tool.

Discover more about Deci’s foundation models and the Infery SDK, and see how they can transform your AI initiatives, by booking a demo with us.