A new version of Deci’s platform delivers enhanced tools for model design, all‑new inference acceleration capabilities, and seamless ways to deploy your models.

At Deci, we never stop moving forward. With constant innovation and iteration, we strive to give our customers even more powerful tools to develop and deploy their deep learning models to production. We’re excited to announce the release of the latest version of our deep learning development platform that is empowering AI developers with new ways to develop and deploy deep learning based applications.

With the advent of generative AI in mainstream applications, it’s clearer than ever before that to grow and survive in this new world, companies must adopt and implement AI as part of their products. The pressure is on. While AI has been introduced into many verticals, the process of building AI algorithms and taking them to production still remains highly manual, time-consuming, and complex—with many trial and error iterations. In fact, according to Gartner, 85% of AI projects fail to reach production. Organizations that leverage AI to empower their data science teams and AI development processes will win the future.

With Deci’s platform, data scientists and machine learning engineers can harness powerful AI algorithms to design production-grade deep neural networks with ease. We’re creating more time and space for AI developers to focus on solving bigger problems and building even better deep learning applications.

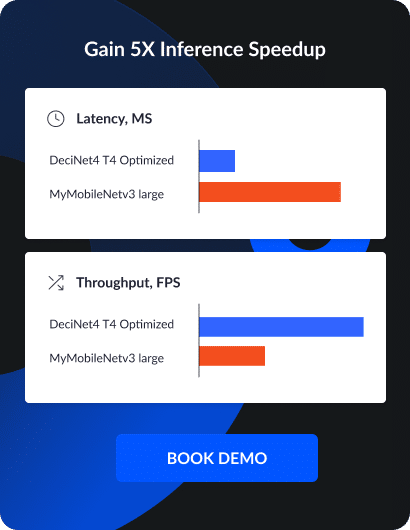

Teams that use Deci are building better-performing models in less time and lowering dev costs by 30% on average, while also cutting inference compute costs by up to 80%.

So What’s New at Deci?

Deep Learning Model Development Just Got Much Simpler

Enhancements to the AutoNAC Engine

The latest improvements to AutoNAC, Deci’s proprietary Neural Architecture Search Engine, enable teams to use it across more tasks, generate faster and more efficient architectures, and to do so in even less time.

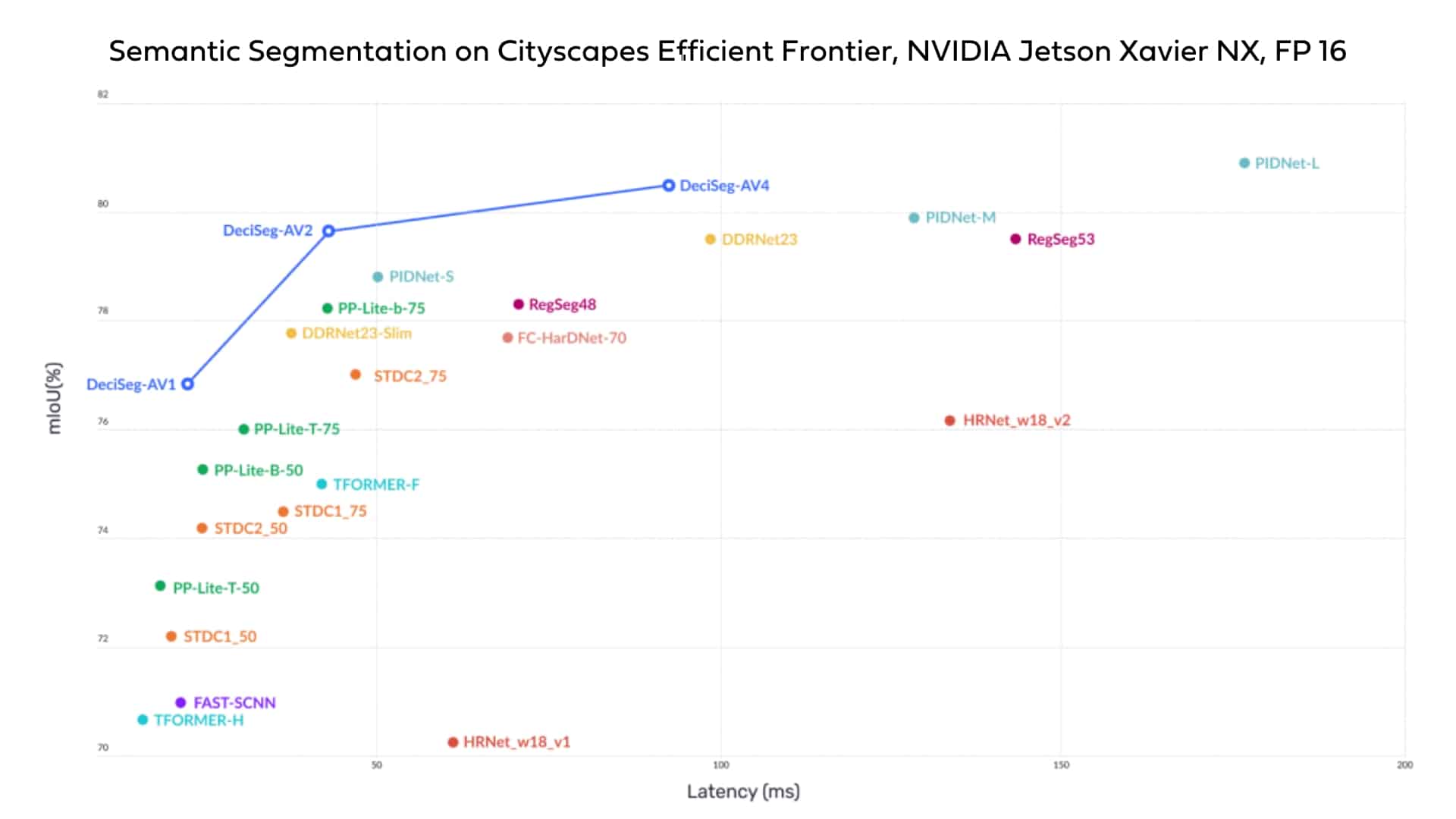

The enriched version of Deci’s AutoNAC engine has already generated a series of breakthrough semantic segmentation models. These new, production-ready models outperform any known image-to-image models and deliver better speed and accuracy compared to networks such as DDRNet, STDC, and PP-Lite, among others. This is testimony to the power of AutoNAC. For example, one of the DeciSegs is 2.31x faster and +0.84 more accurate than DDRNet23. Read the blog to learn more.

AutoNAC is equipping data scientists and machine learning engineers with the superpowers they need to deliver powerful models to production in less time and effort. No more months on end in countless iterations to find and manually tweak various architectures. It is now possible to go from data to production-ready models in days.

Experiment Tracking Capabilities

The integration of TensorBoards to the Deci platform means AI practitioners can now visualize and track their training processes. Training logs are automatically uploaded to the platform for each experiment. Using the platform, the team can visualize and track experiments, and easily compare between different architecture candidates.

Take Your Inference Acceleration Capabilities to the Next Level

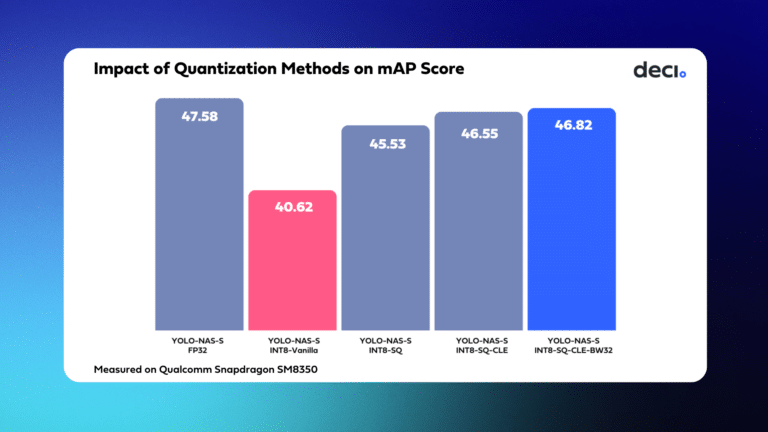

Support for INT8 Selective Quantization

Get even higher speed from models with no accuracy degradation, by performing INT8 selective quantization and quantization-aware training. Read the blog to learn more.

Inference Pipeline Optimization

Speed up performance and maximize the utilization of inference hardware by running asynchronous inference with Infery, Deci’s inference engine.

Generative AI Optimization

Easily compile and quantize generative AI vision and language models to enhance applications including chatbots, image and video synthesis, personalized marketing, medical imaging analysis, virtual assistants, and more.

Expanded Support for New Edge and Cloud Hardware

Benchmark and optimize models across various hardware devices using our online hardware fleet. Our latest additions include support for NVIDIA’s latest Jetson Orin GPU family, the NVIDIA GPU A100 and A10G, and Intel Sapphire Rapids CPUs.

Out-of-the-box Framework Support

Enjoy extended deployment possibilities with out-of-the box support for mobile (iphone, Android) and browsers among other platforms. Easily convert PyTorch models into popular production frameworks such as TensorRT and OpenVino, TFJS, TFLite, and CoreML.

Curious About How Developers are Using Deci?

Teams around the world are using Deci. Here’s what they are saying:

Pallav Vyas, Senior Manager, Document AI/ML at Adobe

“By using Deci’s platform, we significantly shortened our time to market and transitioned inference workloads from cloud to edge devices. As a result, we improved the user experience and dramatically reduced our spending on cloud inference cost.”

Amir Bar, Head of SW and Algorithm at Applied Materials

Applied Materials is at the forefront of materials engineering solutions and leverages AI to deliver best-in-class products. We have been working with Deci on optimizing the performance of our AI model, and managed to reduce its GPU inference time by 33%. This was done on an architecture that was already optimized. We will continue using the Deci platform to build more powerful AI models to increase our inspection and production capacity with better accuracy and higher throughput.

Lior Hakim, Co-Founder & CTO at HourOne

Our advanced text to videos solution is powered by proprietary and complex generative AI algorithms. Deci allows us to reduce our cloud computing cost and improve our user experience with faster time to video by accelerating our models’ inference performance and maximizing GPU utilization on the cloud.

Interested in stepping up your deep learning development process and building models using practices favored by the world’s leading AI teams? Watch our product event for a demo of our latest and greatest features.