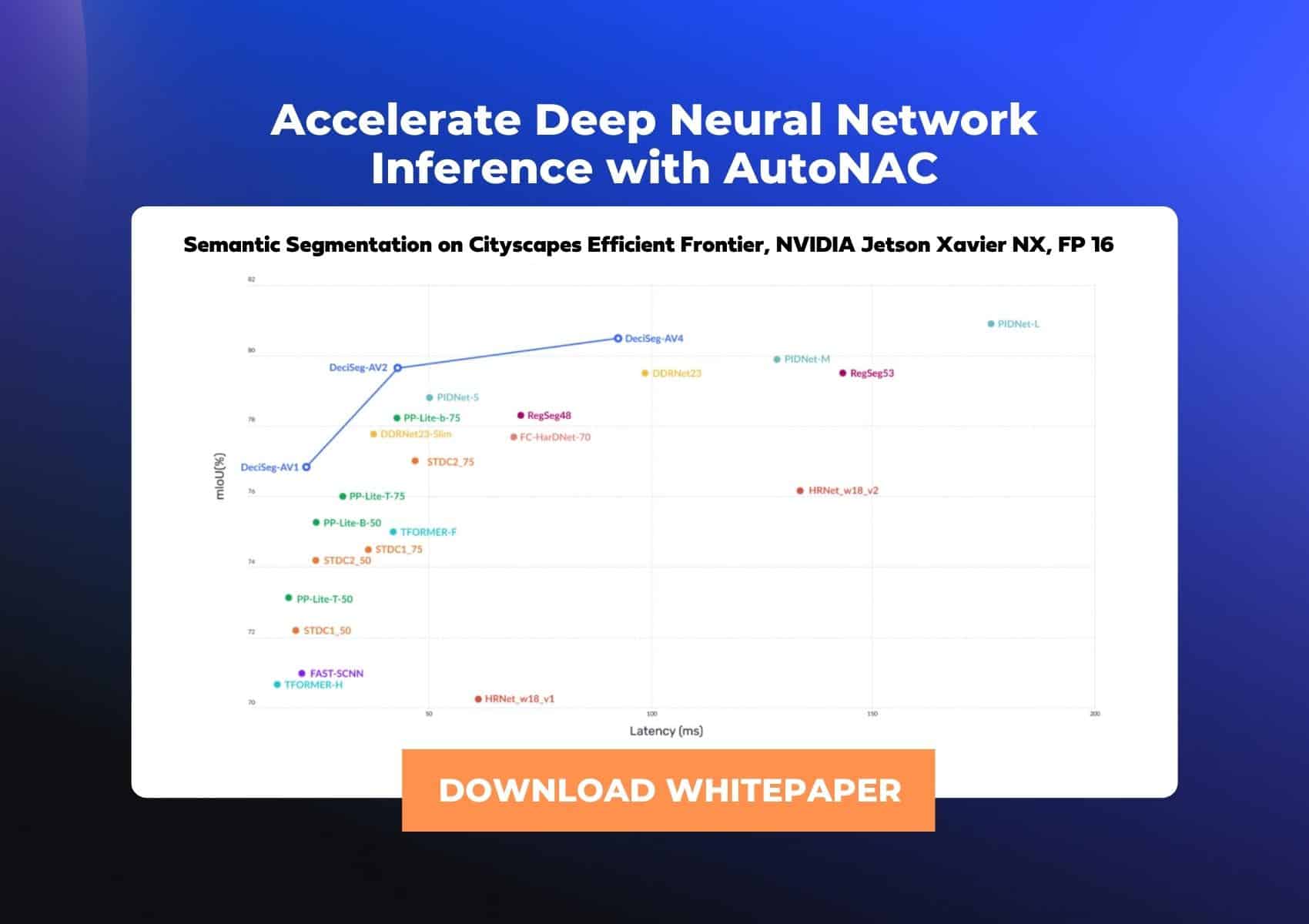

Accelerate Deep Neural Network Inference on Any Hardware while Preserving Accuracy with AutoNAC

Complex neural models tend to get bloated during development, which slows them down in production. AutoNAC technology helps deep learning engineers achieve a massive breakthrough in efficiency, speed, and accuracy.

In this 20-page white paper, you will learn about:

- Technical issues that create an inference barrier

- Methods typically used to improve deep learning performance (e.g., pruning, quantization, and NAS)

- The deep learning inference stack

- Ways to achieve real-time deep learning in inference

The white paper was authored by Ran El-Yaniv PhD, Deci’s Chief Scientist, a professor at the Technion – Israel Institute of Technology, and a former research scientist at Google.

Complete the form to get immediate access to the technical white paper.