An open-source library for training PyTorch-based computer vision models. Developed by Deci’s deep learning experts for the benefit of the AI community.

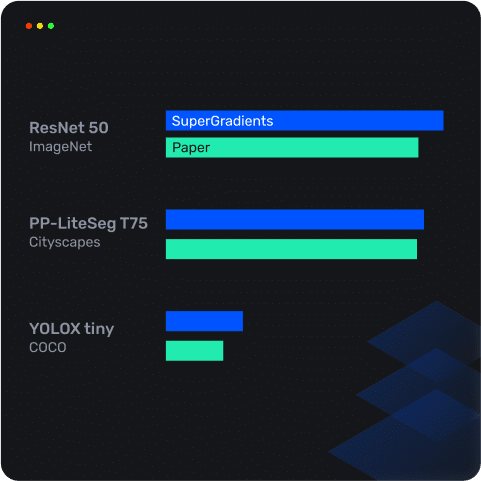

Get proven training recipes that deliver SOTA accuracy results with one line of code.

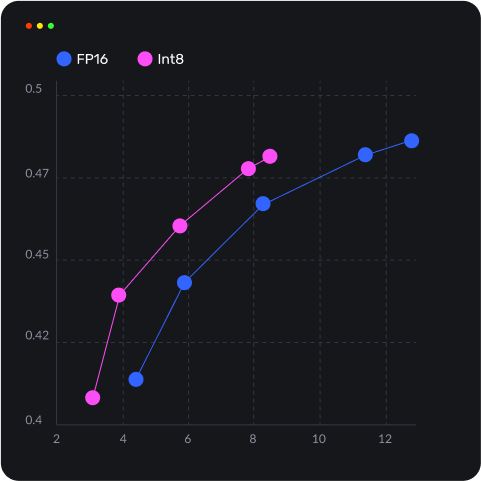

Boost your model’s inference performance without compromising on accuracy by quantizing your model to INT8 during the training process with one line of code.

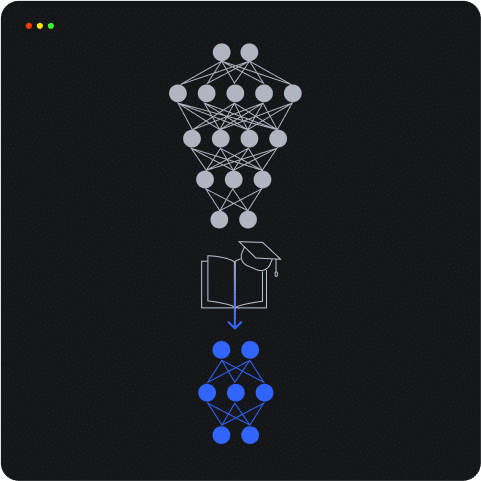

Easily boost smaller models’ accuracy by leveraging pre-trained larger models with a few lines of code.

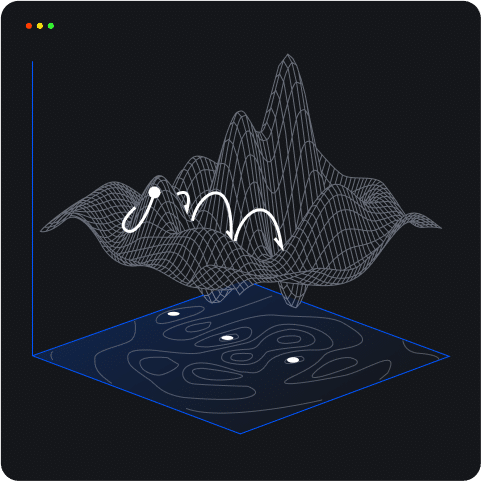

Easily apply techniques such as LR scheduling, easily integratable training callbacks, batch accumulate, exponential moving average across all tasks.

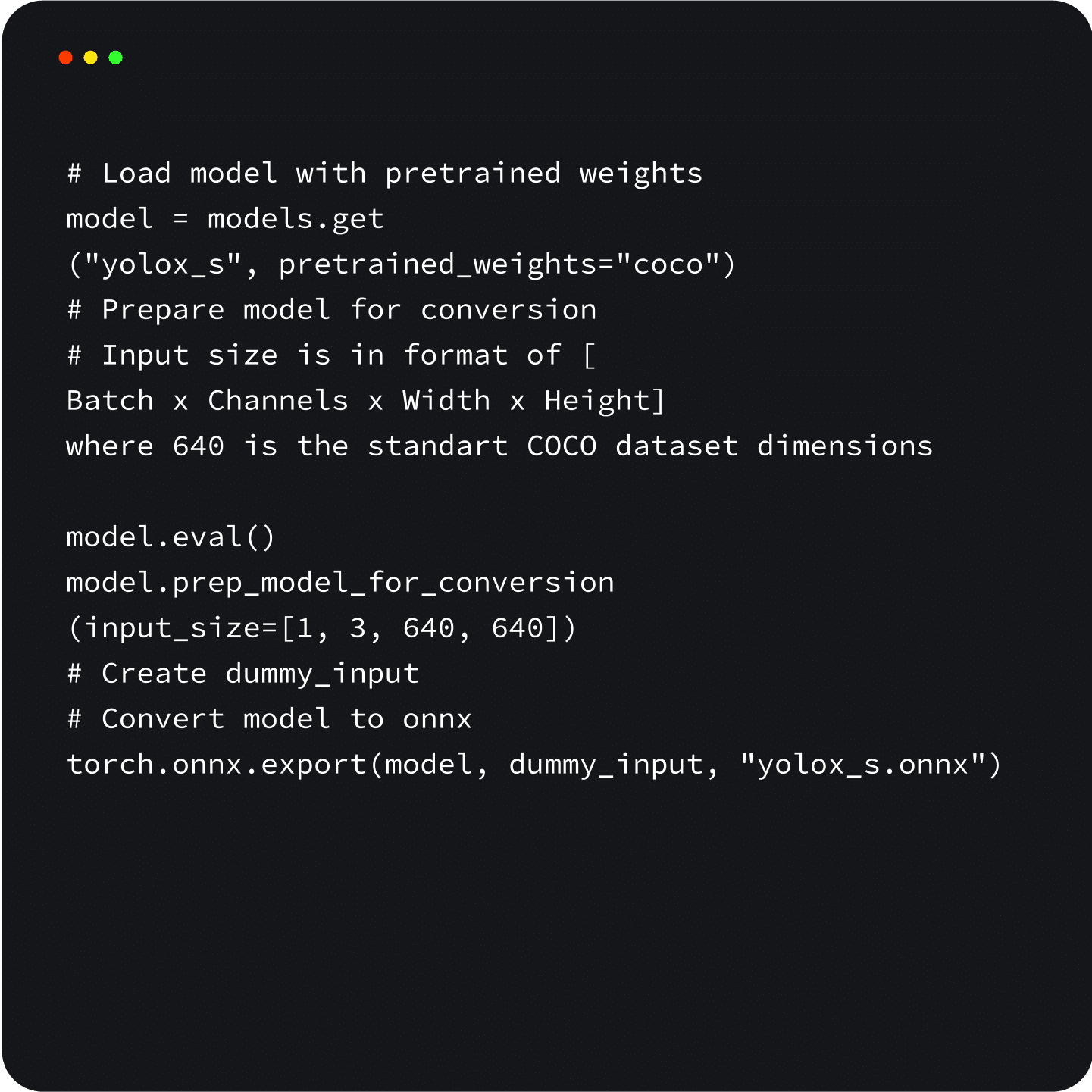

Easily load and fine-tune production-ready, pre-trained SOTA models that incorporate best practices and validated hyper-parameters for achieving best-in-class accuracy.

Easy plug and play your own PyTorch data loaders. Compatible with PyTorch dataset, losses and metrics instead of PyTorch based data loader.

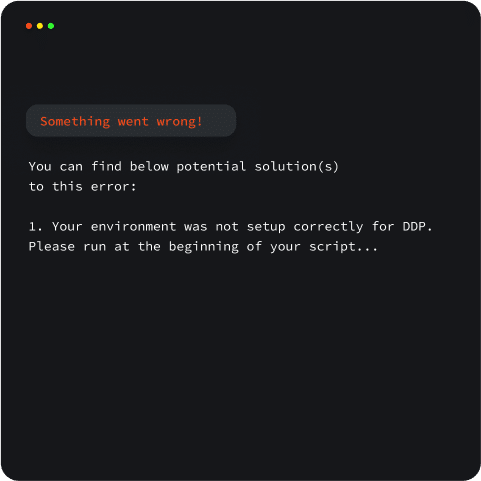

Save time with better insight into the most common training errors. Quickly understand how to overcome them with suggested remediation tips.

Easily load and fine-tune production-ready, pre-trained SOTA models that incorporate best practices and validated hyper-parameters for achieving best-in-class accuracy.

Integrate any custom dataset, losses, metrics to SuperGradients.

Easy plug and play your own PyTorch data loaders. Compatible with PyTorch dataset, losses, and metrics instead of PyTorch based data loader.

Save time with better insight into the most common training errors. Quickly understand how to overcome them with suggested remediations tips.

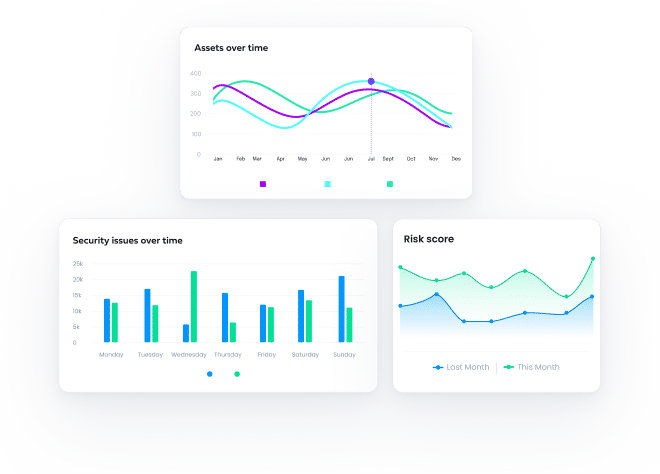

Monitor your training and save precious training time and resources with automated alerts.

Speed up your model training process by using multiple GPUs at once.

| Model | Dataset | Resolution | Paper Top-1 Accuracy | SG Top-1 Accuracy |

|---|---|---|---|---|

| ViT base | ImageNet21K | 224×224 | 84.15 | |

| ViT large | ImageNet21K | 224×224 | 85.64 | |

| EfficientNet B0 | ImageNet | 224×224 | 77.62 | 93.49 |

| RegNetY200 | ImageNet | 224×224 | 70.88 | 89.35 |

| RegNetY400 | ImageNet | 224×224 | 74.74 | 91.46 |

| RegNetY600 | ImageNet | 224×224 | 76.18 | 92.34 |

| RegNetY800 | ImageNet | 224×224 | 77.07 | 93.26 |

| ResNet18 | ImageNet | 224×224 | 70.6 | 89.64 |

| ResNet34 | ImageNet | 224×224 | 74.13 | 91.7 |

| ResNet50 | ImageNet | 224×224 | 76.3 | 93 |

| MobileNetV3_large-150 epochs | ImageNet | 224×224 | 73.79 | 91.54 |

| MobileNetV3_large-300 epochs | ImageNet | 224×224 | 74.52 | 91.92 |

| MobileNetV3_small | ImageNet | 224×224 | 67.45 | 87.47 |

| MobileNetV2_w1 | ImageNet | 224×224 | 73.08 | 91.1 |

| Model | Dataset | Resolution | Paper mAPval 0.5:0.95 | SG mAPval 0.5:0.95 |

|---|---|---|---|---|

| SSD lite MobileNet v2 | COCO | 320×320 | 21.5 | |

| SSD lite MobileNet v1 | COCO | 320×320 | 24.3 | |

| YOLOX Nano | COCO | 640×640 | 25.8 | 26.77 |

| YOLOX Tiny | COCO | 640×640 | 32.8 | 37.18 |

| YOLOX Small | COCO | 640×640 | 40.5 | 40.47 |

| YOLOX Medium | COCO | 640×640 | 46.9 | 46.4 |

| YOLOX Large | COCO | 640×640 | 46.9 | 49.25 |

| Model | Dataset | Resolution | Paper mIoU | SG mIoU |

|---|---|---|---|---|

| PP-LiteSeg B50 | Cityscapes | 512×1024 | 75.3 | 76.48 |

| PP-LiteSeg B75 | Cityscapes | 768×1536 | 78.2 | 78.52 |

| PP-LiteSeg T50 | Cityscapes | 512×1024 | 73.1 | 74.92 |

| PP-LiteSeg T75 | Cityscapes | 768×1536 | 76 | 77.56 |

| DDRNet23 Slim | Cityscapes | 1024×2048 | 77.3 | 78.01 |

| DDRNet23 | Cityscapes | 1024×2048 | 79.1 | 80.26 |

| STDC1-Seg50 | Cityscapes | 512×1024 | 72.2 | 75.11 |

| STDC1-Seg75 | Cityscapes | 768×1536 | 74.5 | 77.8 |

| STDC2-Seg50 | Cityscapes | 512×1024 | 74.2 | 76.44 |

| STDC2-Seg75 | Cityscapes | 768×1536 | 77 | 78.93 |

| RegSeg (exp48) | Cityscapes | 1024×2048 | 78.3 | 78.15 |

| Larger RegSeg (exp53) | Cityscapes | 1024×2048 | 79.5 | 79.2 |

Find open source computer vision model architectures for your needs.

Easily load and fine-tune production-ready, pre-trained SOTA models that incorporate best practices and validated hyper-parameters for achieving best-in-class accuracy.

Join the Deep Learning Daily community to ask questions, learn from other practitioners and grow your professional network.

ResNet is an artificial neural network. It is a gateless or open-gated variant of the HighwayNet, the first working very deep feedforward neural network with hundreds of layers. In this walkthrough, Harpreet Sahota, DevRel Manager at Deci, demonstrates ResNet in action. You can check out the notebook here and follow along!

EfficientNet is a convolutional neural network architecture and scaling method that uniformly scales all dimensions of depth/width/resolution using a compound coefficient. Join Harpreet Sahota, DevRel Manager at Deci, for a walkthrough of ResNet in action. You can check out the notebook and follow along!

RegNet is a highly flexible network design space defined by a quantized linear function. It can be specified and scaled for high efficiency or high accuracy. Join Harpreet Sahota, DevRel Manager at Deci, for a walkthrough of RegNet in action, using it to determine whether an image is Santa or not. You can check out the notebook and follow along!

Deci is ISO 27001

Certified

from transformers import AutoFeatureExtractor, AutoModelForImageClassification

extractor = AutoFeatureExtractor.from_pretrained("microsoft/resnet-50")

model = AutoModelForImageClassification.from_pretrained("microsoft/resnet-50")