The size and autoregressive nature of today’s large language models (LLMs) pose significant challenges for fast LLM inference. Substantial computational and memory demands profoundly affect latency and cost. Achieving rapid, cost-efficient inference requires the development of smaller, memory-efficient models alongside the implementation of advanced runtime optimization techniques.

Watch the webinar for an in-depth exploration into the forefront of model design and optimization techniques. Discover strategies to accelerate LLM inference speed without sacrificing quality or escalating operational expenses.

What you’ll learn:

- Explore efficient modeling techniques: Dive into modeling techniques that enhance LLM efficiency while maintaining quality, including grouped query attention (GQA) and variable GQA.

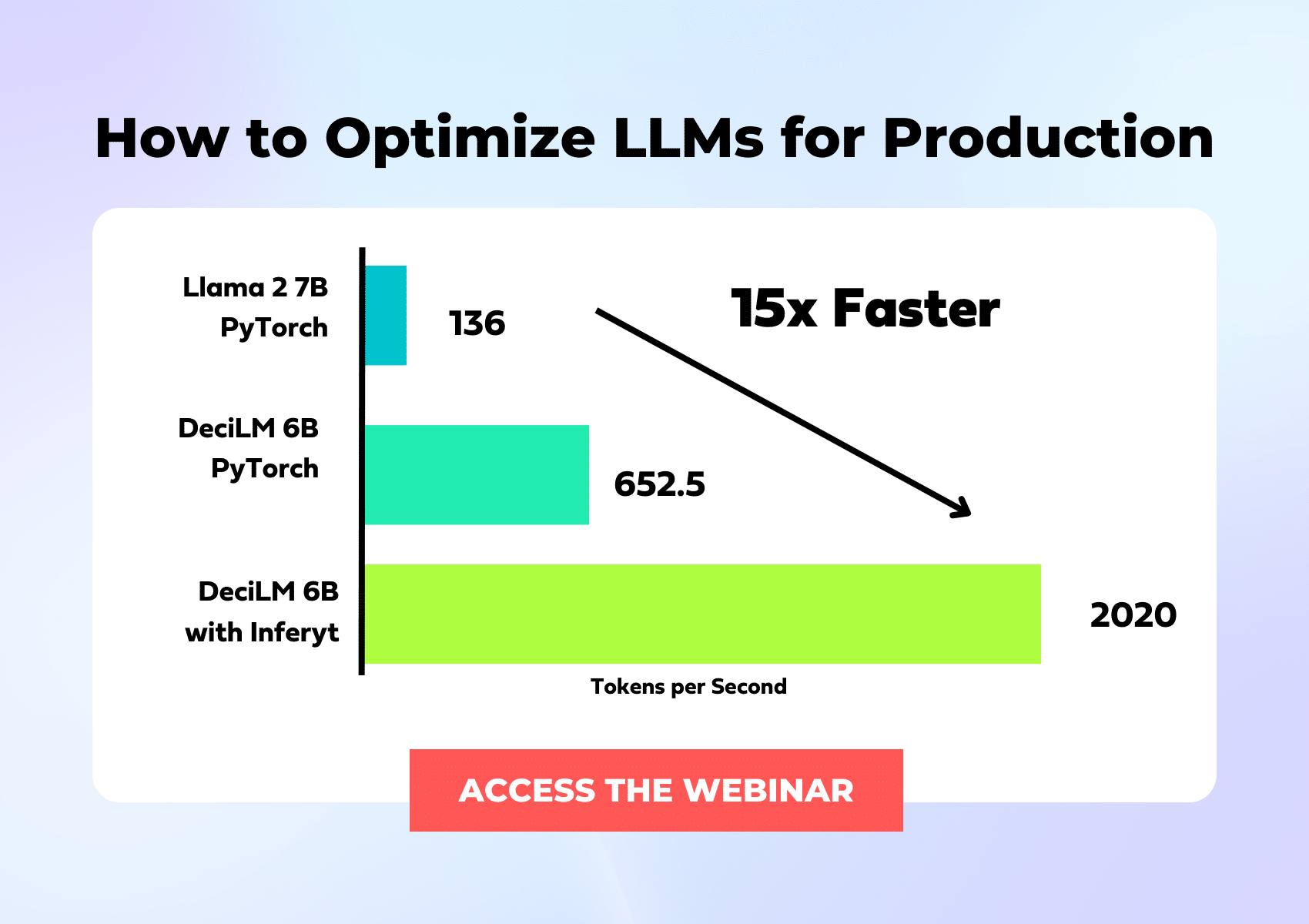

- Understand recent LLMs: Discover why recent LLMs, such as Llama 2 7B and DeciLM 6B outperform older and significantly larger LLMs.

- Uncover advanced optimization techniques: Learn about advanced runtime optimization strategies like selective quantization, CUDA kernels, optimized batch search, and dynamic batching.

Fill out the form to access the webinar!