This pose estimation model skillfully and effectively detects individual movements while simultaneously estimating their poses, making it ideal for real-time applications on edge devices across industries.

TEL AVIV, Israel, November 7, 2023 — Deci, the deep learning company harnessing AI to build AI, announced today the launch of YOLO-NAS Pose, a groundbreaking pose estimation model generated with Deci’s cutting-edge Automated Neural Architecture Construction (AutoNAC) engine, the most advanced Neural Architecture Search (NAS)-based technology on the market. This revolutionary model is redefining capabilities in the technical pose estimation domain, demonstrating unparalleled accuracy and latency performance.

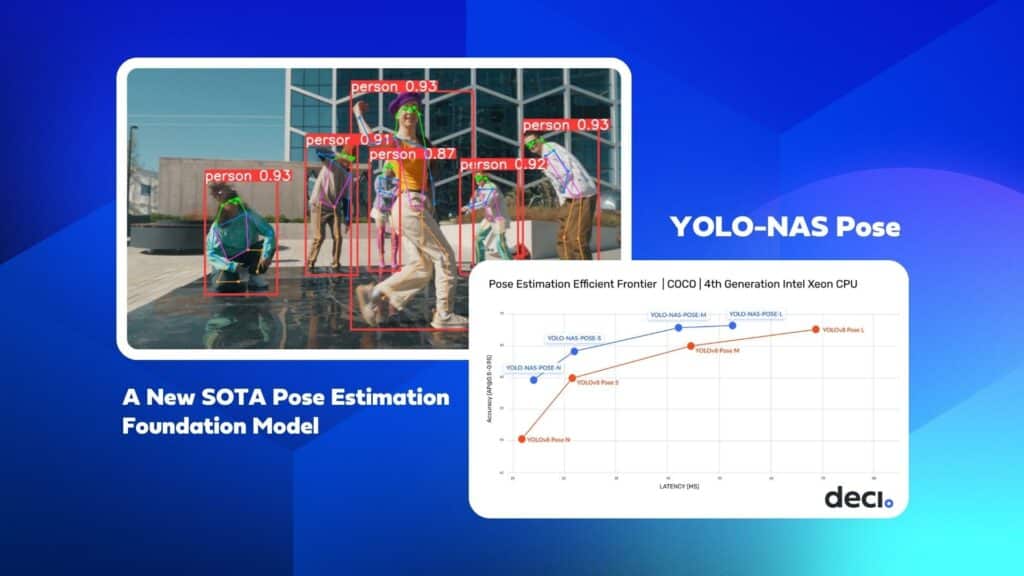

Pose estimation, a computer vision technique enabling the precise determination of human or object positions in space, is a broad spectrum of sectors, including monitoring patient movements in healthcare, evaluating athletic performances, and crafting intuitive human-computer interfaces to enhance the capabilities of robotic systems. With superior latency-accuracy performance that eclipses other state-of-the-art models in the space, including YOLOv8 Pose, YOLO-NAS Pose holds the potential to transform industries, including healthcare, security, and beyond.

With four bespoke size variants (Nano, Small, Medium & Large), YOLO-NAS Pose addresses a wide range of computational needs. All four variants deliver significantly higher accuracy with similar or lower latency compared to their YOLOv8 Pose equivalent model variants. When comparing across variants, a significant boost in speed is evident. For example, the YOLO-NAS Pose M variant boasts 38% lower latency and achieves a +0.27 AP higher accuracy over YOLOV8 Pose L, measured on Intel Gen 4 Xeon CPUs. YOLO-NAS Pose excels at efficiently detecting objects while concurrently estimating their poses, making it the go-to solution for applications requiring real time insights.

“We’re excited to introduce YOLO-NAS Pose, a testament to our commitment to pushing the boundaries of AI for practical, real-world applications. Our AutoNAC technology is the powerhouse behind this advancement, allowing us to consistently craft models that not only achieve new performance heights but also unlock transformative use cases. We believe this model will be a vital asset to developers in the field of pose estimation and look forward to seeing the innovative ways in which it will be employed.”

Yonatan Geifman, CEO and Co-founder of Deci

YOLO-NAS Pose model architecture is available under an open source license. The pre-trained weights are available for non commercial use.

Deci’s new model follows the trailblazing success of YOLO-NAS, an object detection model that garnered widespread acclaim earlier this year. Released earlier this year, the YOLO-NAS model took the developer community by storm, with fantastic applications of the technology shared across the board. Alongside YOLO-NAS, Deci’s AutoNAC has generated some of the world’s most efficient computer vision and Generative AI models such as DeciCoder, DeciLM 6B, DeciDiffusion, and many others. In the case of YOLO-NAS Pose, AutoNAC created this foundation model featuring an innovative head design, meticulously optimized for dual objectives: locating individuals and estimating their poses.

For those keen on delving deeper into YOLO-NAS Pose or interested in requesting a demo, go to https://deci.ai/

This announcement was originally published on Cision PRWeb.