The DeciLM-7B-instruct text generation model offers top-notch accuracy with higher throughputs than other models in its class. This makes it suitable for building intelligent, versatile chatbot applications. Harnessing its power with multiple functionalities from LangChain, we can create intelligent chat applications capable of handling queries across different documents, providing users with comprehensive and accurate responses. This article will comprehensively take you through how to use DeciLM-7B-instruct and LangChain to build a working chatbot to enable you to chat with multiple PDF files of your choice.

By the end of this article, you will:

- Understand the capabilities and functionalities of Langchain in chatbot development.

- Learn how to load and process PDF documents for textual data extraction using LangChain.

- Understand the challenges encountered when chunking large documents like multi-page PDFs or books

- Grasp the concept of text embeddings and their role in information retrieval.

- Develop a retrieval-based question-answering system that can extract relevant information from documents.

- Recognize the benefits of using multiple documents as a knowledge base for chat with pdfs.

First, I will go through each step and detail each process on the various components we will use to create the application. You can follow along with this notebook.

First, we import the required packages:

import torch import PyPDF2 # pdf reader import time from pypdf import PdfReader from io import BytesIO from langchain.prompts import PromptTemplate # for custom prompt specification from langchain.text_splitter import RecursiveCharacterTextSplitter # splitter for chunks from langchain.embeddings import HuggingFaceEmbeddings # embeddings from langchain.vectorstores import FAISS # vector store database from langchain.chains import RetrievalQA # qa and retriever chain from langchain.memory import ConversationBufferMemory # for model's memory on past conversations from langchain.document_loaders import PyPDFDirectoryLoader # loader fo files from firectory from langchain.llms.huggingface_pipeline import HuggingFacePipeline # pipeline from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline, BitsAndBytesConfig

Let’s first instantiate the DeciLM-7B-instruct LLM.

Model configuration:

# transformer model configuration

# this massively model's precision for memory efficiency

# The model's accuracy is reduced.

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

)

model_id = "Deci/DeciLM-7B-instruct" # model repo id

device = 'cuda'

#

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id,

trust_remote_code=True,

device_map = "auto",

quantization_config=quant_config)

# create a pipeline

pipe = pipeline("text-generation",

model=model,

tokenizer=tokenizer,

temperature=1e-3,

return_full_text = False,

max_new_tokens=2048)

llm = HuggingFacePipeline(pipeline=pipe)

We set the quantization_config of the model to lower its precision to save on memory massively.

How to load and process PDF documents for textual data extraction using LangChain

LLMs are ‘text-prediction’ agents. They are highly dependent on the data and, more importantly, its quality being used as input. Loading data for textual extraction is crucial in creating a question-answering chatbot system. A language model needs a good knowledge base to generate responses correctly and accurately, as requested.

An essential part of loading the data is structuring it to get high and precise responses from the language model. In our case, suppose we have a PDF with hundreds or thousands of pages. Scanning through such a document can be daunting.

Creating a chatbot system that can do that task in seconds is crucial. However, we can not pass the entire PDF file to the language mode involved. LLMs have limitations on how much data they can receive at a time(max-tokens). For instance, DeciLM-7B-instruct supports 8k-token sequence length. To address the issue, we need to give the model the most relevant parts of the document about the query to improve the precision and speed of retrieving answers.

We need to:

- Load the PDFs with Document loaders from LangChain.

- Split the files into smaller chunks(Chunking)

- Convert them into a vector representation(Embedding), which captures the semantic meaning of each word, thus grouping similar inputs close together.

- Store the vector representations of the chunks in a vector store database for quick retrieval.

LangChain provides methods to load and process these files efficiently. Let’s learn.

Step 1: Loading multiple PDF files with LangChain

We’ll use the ArxivLoader from LangChain to load the Deep Unlearning paper and also load a few of the papers mentioned in the references:

- Towards Unbounded Machine Unlearning

- Subspace based Federated Unlearning

- Certifiable Machine Unlearning for Linear Models

- Opening the Black Box of Deep Neural Networks via Information

from langchain.document_loaders import ArxivLoader

from langchain.document_loaders.merge import MergedDataLoader

doc_to_query = ["2312.00761", "2302.09880", "2302.12448", "2106.15093", "1703.00810"]

docs_to_merge = []

for doc in doc_to_query:

loader = ArxivLoader(query=doc)

docs_to_merge.append(loader)

all_loaders = MergedDataLoader(loaders=docs_to_merge)

all_docs = all_loaders.load()

The loader returns a list of document objects. A document contains the page content and the metadata(source, page numbers, etc).

Step 2: Splitting the documents into smaller chunks

Once we have extracted the page content of each uploaded PDF, we split them into smaller chunks. Splitting documents into chunks has several benefits:

- It reduces the amount of text we feed to the LLM as its knowledge base. This boosts the LLM’s ability to follow instructions, reduces generation costs, and helps us get faster responses.

- This ensures we do not get maximum token errors since every model has a maximum context window of how many tokens(size of a word or sub-word) it can receive. For instance, DeciLM-7B-instruct has a context window of 8k tokens. We can not feed it more tokens. Splitting these documents makes it possible to feed longer documents into the model’s knowledge base.

- Helps the model respond with precise answers since it can easily narrow down the information source to smaller chunks than with huge chunks.

LangChain provides several text splitters(Document transformers). We will favor the RecursiveCharacterTextSplitter. The splitter takes the list of documents we created above and tries to chunk based on splitting on the first character, but if any chunks are too large, it then moves on to the next character. We can define the chunk size (the maximum size of your chunks) and the chunk overlap (chunks to include in the next chunk from the previous chunk, which helps preserve context).

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1024, chunk_overlap=16)

splits = text_splitter.split_documents(all_docs)

print(f"We have {len(splits)} chunks in memory")

What to consider while chunking:

When it comes to chunking data, think about these factors:

- The shape and density of your documents. If you need intact text or passages, larger chunks and variable chunking that preserves sentence structure can produce better results.

- User queries: Larger chunks and overlapping strategies help preserve context and semantic richness for queries that target specific information.

- LLMs have performance guidelines for chunk size. You need to set a chunk size that works best for all your models. For instance, if you use different models for summarization and embeddings, choose an optimal chunk size that works for both. Large documents may contain some irrelevant information that may significantly degrade retrieval.

The advantage of loading multiple PDF files is that it gives the model a broad knowledge base; thus, it can provide more relevant and precise answers.

Step 3: Embedding the chunks and creating a vector store database

The model cannot make sense of human-readable text with the text chunks set. So, we need to convert them into vectors, which are numerical representations. The embeddings also capture some of their semantics, thus placing semantically similar texts close together in the embedding space.

There are various models for embedding. These models have learned to translate human-readable text into AI-readable embeddings. We will use Hugging Face embedding models, specifically the sentence-transformers/all-mpnet-base-v2 model.

You can also explore alternative embedding models, such as those offered by OpenAI, although these are not available free of charge.

embeddings_model_id = "BAAI/bge-large-en-v1.5"

embeddings_model = HuggingFaceEmbeddings(model_name=embeddings_model_id,model_kwargs = {"device": "cuda"})

We must store the chunks in a database with the specified embedding model for easier and quicker retrieval. We will pass the embedding model and the chunks into the vector store. Vector stores streamline the process of storing embeddings and conducting similarity searches among these embeddings, simplifying the management and retrieval of high-dimensional data representations.

The vector store is simply responsible for storing and performing similarity checks between our query and the stored embeddings. Similarity search involves quantifying the proximity or relatedness between two or more embeddings.

LangChain provides several vector store databases. We will use FAISS, which uses the Facebook AI Similarity Search (FAISS) library.

# create vector db for similarity search vectorstore_db = FAISS.from_documents(splits, embeddings)

Creating a question-answering chain

Now that we have our text chunks stored as embeddings in the vector store, we need to create a way to retrieve those documents when we ask the model a question. The solution is to set up a retrieval question answering chain.

LangChain provides a couple of chains for retrieving documents. In our case, we will use the RetrievalQAChain. The chain combines a retriever and a question-answering chain(chain_type=“stuff”). It retrieves documents from a retriever and then uses the question-answering chain to answer a question based on the retrieved documents.

We will use the “stuff” document chain type. It will take a list of the documents and insert them all into a prompt, which is then passed to an LLM.

Step 1: Specifying a retriever

We will use the FAISS vector store as the retriever. The retriever will search for documents closely similar to the query and return the top 8 of them.

# performs a similarity check and returns the top K embeddings

# that are similar to the question's embeddings

retriever = vectorstore_db.as_retriever(search_type="mmr",

search_kwargs={"k": 8})

We can test the outputs from the retriever by passing in a query:

retrieved_relevant_docs = retriever.get_relevant_documents(

"What are the core concepts of unlearning in deep learning as presented in these papers, and how do the approaches differ or align?"

)

for doc in retrieved_relevant_docs:

print(doc.page_content)

print('\n')

Step 2: Creating a prompt

Language models are heavily impacted by the prompts they receive. The way we query or prompt the model can lead to varied outputs. By crafting a good prompt, we guarantee that our model’s answers align with our desired results.

LangChain provides prompt templates to allow us to pass instructions to our model. We will use the PrompTemplate tool to pass in our query, the context, and the history of previous chats. The history helps the model account for earlier chats in case of any follow-up questions.

custom_prompt_template = """

### System:

You are an AI assistant that follows instructions extremely well. Help as much as you can.

### User:

You are a research assistant for an artificial intelligence student. Use only the following information to answer user queries:

Context= {context}

History = {history}

Question= {question}

### Assistant:

"""

prompt = PromptTemplate(template=custom_prompt_template,

input_variables=["question", "context", "history"])

Step 3: Implementing chain memory

LLMs are, by default, stateless, meaning each incoming query is processed independently of other interactions. The only thing that exists for a stateless agent is the current input.

For our chatbot, remembering previous interactions is essential. So, we will allow the DeciLM-7B-instruct LLM to remember previous interactions with the user.

We will use LangChain’s ConversationBufferMemory.

memory = ConversationBufferMemory(input_key="question",

memory_key="history",

return_messages=True)

Step 4: Generating responses

Incorporating all we have done, we create the final question-answering chain, which will take the question and pass in the prompt and the chat history in memory.

qa_chain = RetrievalQA.from_chain_type(

llm=llm, chain_type='stuff',

retriever = vectorstore_db.as_retriever(),

return_source_documents = True,

chain_type_kwargs = {"verbose": False,

"Prompt": prompt,

"memory": memory

})

We can now ask the model questions:

query = "What are the core concepts of unlearning in deep learning as presented in these papers, and how do the approaches differ or align?"

response = qa_chain({"query": query})

response['result']

Ask a follow-up question:

query = "What insights do these papers provide about the impact of unlearning on the overall performance and accuracy of deep learning models?"

response = qa_chain({"query": query})

response['result']

Clarify history:

print(qa_chain.combine_documents_chain.memory)

chat_memory=ChatMessageHistory(messages=[HumanMessage(content='Based on the Deep unlearning pdf, what is deep unlearning?'), AIMessage(content='Deep unlearning is a technique that aims to remove a specific target class from a trained deep\nlearning model. This is achieved by splitting the training dataset into two parts: a retain dataset\ncontaining samples from the target class, and a forget dataset containing samples from all other\nclasses. The model is then trained on the retain dataset, while the forget dataset is used for\nunlearning the target class.'), HumanMessage(content='What are the key challenges involved?'), AIMessage(content='Key Challenges Involved in Deep Unlearning:\n1. Identifying the Target Class: Identifying the target class is crucial in deep unlearning, as it determines which samples need to be retained and which need to be forgotten. This can be a challenging task, as the target class may not be clearly identifiable or well-defined.\n2. Data Preparation: Preparing the data for deep unlearning can be time-consuming and resource-intensive, as it involves splitting the dataset into multiple parts.\n3. Model Training: Once the data is prepared, the model needs to be trained separately on the retain and forget datasets. This process can be challenging, as the model must learn to distinguish between the target class and other classes in the forget dataset.\n4. Evaluation: Evaluating the effectiveness of deep unlearning is essential to determine if the target class has been successfully removed from the model. This can')]) input_key='question' return_messages=True

In the preceding sections, we have delved into the procedures and steps for generating responses from the DeciLM-7B-instruct model based on multiple PDFs. In the next section, we will combine all the functions to construct a chatbot using Gradio. Through this interface, you can upload various PDFs and interact with the model by asking it questions based on them.

Using Gradio to build a question-answering chatbot application that can chat with PDFs

Now that we have explained all the components of our chatbot, we will create an actual chatbot with a user interface using Gradio.

You can follow up on this notebook.

Build Chat Interface

The interface of the application will have the following:

- A chat interface to chat with the bot.

- A text input field input queries.

- A file interface showing all the uploaded PDFs.

- Buttons to initiate a question-answering chain and one for uploading the files.

Gradio uses Blocks, a container on which we will position all the components – which we order with Row and Column, as well as the events.

# The GRADIO Interface

with gr.Blocks() as demo:

with gr.Row():

with gr.Row():

# Chatbot interface

chatbot = gr.Chatbot(label="DeciLM-7B-instruct bot",

value=[],

elem_id='chatbot')

with gr.Row():

# Uploaded PDFs window

file_output = gr.File(label="Your PDFs")

with gr.Column():

# PDF upload button

btn = gr.UploadButton("📁 Upload a PDF(s)",

file_types=[".pdf"],

file_count="multiple")

with gr.Column():

with gr.Column():

# Ask question input field

txt = gr.Text(show_label=False, placeholder="Enter question")

with gr.Column():

# button to submit question to the bot

submit_btn = gr.Button('Ask')

Bundling processes into functions

We will create functions of the processes we discussed in the last sections.

Function to load the DeciLM-7B-instruct model:

def load_llm():

# Loads the DeciLM-7B-instruct llm when called

model_id = "Deci/DeciLM-7B-instruct"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id,

trust_remote_code=True,

device_map = "auto",

quantization_config=quant_config)

llm = HuggingFacePipeline(pipeline=pipe)

return llm

Function to add a query to the chat interface.

def add_text(history, text):

# Adding user query to the chatbot and chain

# use history with current user question

if not text:

raise gr.Error('Enter text')

history = history + [(text, '')]

return history

Function to render uploaded pdfs to the file UI.

The files we upload will give the model its base knowledge, informing its responses.

def upload_file(files): # Loads files when the file upload button is clicked # Displays them on the File window # print(type(file)) return files

Function to process PDFs uploaded. Called when the ask button is clicked:

def process_file(files):

"""Function reads each loaded file, and extracts text from each of their pages

The extracted text is store in the 'text variable which is the passed to the splitter

to make smaller chunks necessary for easier information retrieval and adhere to max-tokens(4096) of DeciLM-7B-instruct"""

pdf_text = ""

for file in files:

pdf = PyPDF2.PdfReader(file.name)

for page in pdf.pages:

pdf_text += page.extract_text()

embeddings_model = HuggingFaceEmbeddings(model_name="BAAI/bge-large-en-v1.5",model_kwargs = {"device": "cuda"})

# split into smaller chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1024,

chunk_overlap=32)

splits = text_splitter.create_documents([pdf_text])

# create a FAISS vector store db

# embed the chunks and store in the db

vectorstore_db = FAISS.from_documents(splits, embeddings)

#create a custom prompt

custom_prompt_template = """

### System:

You are an AI assistant that follows instruction extremely well. Help as much as you can.

### User:

You are a research assistant for an artificial intelligence student. Use only the following information to answer user queries:

Context= {context}

History = {history}

Question= {question}

### Assistant:

"""

prompt = PromptTemplate(

template=custom_prompt_template,

input_variables=["question", "context", "history"])

# set QA chain with memory

qa_chain_with_memory = RetrievalQA.from_chain_type(

llm=llm, chain_type='stuff',

retriever = vectorstore_db.as_retriever(),

return_source_documents = True,

chain_type_kwargs = {"verbose": True,

"Prompt": prompt,

"memory": memory

})

# get answers

return qa_chain_with_memory

Function to run the question answering chain and generate the response:

When the chain is initiated, we will call the qa_chain and send our queries. The history and queries will also be passed to the chain to keep the context of conversations and stream responses to the chat interface.

def generate_bot_response(history,query, btn):

"""Function takes the query, history and inputs from the qa chain when

the submit button is clicked

to generate a response to the query"""

if not btn:

raise gr.Error(message='Upload a PDF')

qa_chain = process_file(btn) # run the qa chain with files from upload

bot_response = qa_chain({"query": query})

# simulate streaming

for char in bot_response['result']:

history[-1][-1] += char

time.sleep(0.05)

yield history,''

The backend

The following are the processes that will happen in the backend:

- Rendering uploaded PDF files on the file UI.

- Handling uploaded PDF.

- Extracting texts from PDF and creating text embeddings using hugging face embeddings.

- Storing vector embeddings in the FAISS vector store.

- Creating a RetrievalQA chain with LangChain.

- Creating query text embeddings and perform a similarity search over embedded documents.

- Sending relevant documents to the DeciLM-7B-instruct model.

- Fetching the answer and stream it on the chat UI.

Defining the gradio events

These events are triggered when a specific action on the web UI is performed, making the app interactive and dynamic.

Gradio Events uses component variables that we defined earlier to communicate with the backend. We will describe a few Events that we need for our application. These are:

- Upload Files: This will allow us to upload multiple PDFs.

- Ask event: Typing the question and pressing the ask button triggers the event.

The app should produce errors if you try to ask a question without first uploading the PDFs or when you press the ask button without typing in your query.

# The GRADIO Interface

with gr.Blocks() as demo:

with gr.Row():

with gr.Row():

# Chatbot interface

chatbot = gr.Chatbot(label="DeciLM-7B-instruct bot",

value=[],

elem_id='chatbot')

with gr.Row():

# Uploaded PDFs window

file_output = gr.File(label="Your PDFs")

with gr.Column():

# PDF upload button

btn = gr.UploadButton("📁 Upload a PDF(s)",

file_types=[".pdf"],

file_count="multiple")

with gr.Column():

with gr.Column():

# Ask question input field

txt = gr.Text(show_label=False, placeholder="Enter question")

with gr.Column():

# button to submit question to the bot

submit_btn = gr.Button('Ask')

# Event handler for uploading a PDF

btn.upload(fn=upload_file, inputs=[btn], outputs=[file_output])

# Gradio EVENTS

# Event handler for submitting text question and generating response

submit_btn.click(

fn= add_text,

inputs=[chatbot, txt],

outputs=[chatbot],

queue=False

).success(

fn=generate_bot_response,

inputs=[chatbot, txt, btn],

outputs=[chatbot, txt]

).success(

fn=upload_file,

inputs=[btn],

outputs=[file_output]

)

if __name__ == "__main__":

demo.launch() # launch app

Once you launch the app, gradio will display the interface on the notebook, or you can use the link provided to open the app on a new window.

Final thoughts

In conclusion, the combination of DeciLM-7B-instruct, LangChain, and FAISS offers a solid framework for developing chatbots capable of querying multiple PDF documents. This setup is particularly useful for industries dealing with substantial volumes of document-based information.

For developers, the key takeaways involve understanding LangChain for chat with PDFs development, techniques for processing PDF documents, and the use of text embeddings in information retrieval. The article has also covered how to manage large document processing and the creation of a responsive question-answering system.

Industries like legal, academic research, finance, and healthcare, which often handle extensive document databases, stand to benefit from this technology. These sectors also tend to have high user interaction volumes, making efficient and scalable solutions necessary.

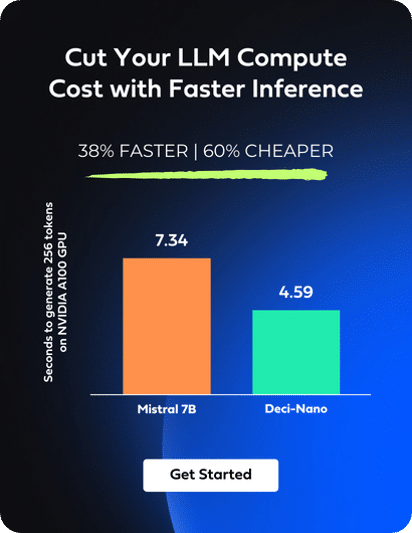

This is where Infery-LLM comes into play. Developed by Deci, Infery-LLM is an inference SDK that boosts the performance of models like DeciLM-7B-instruct in high-traffic scenarios. Its key features include high throughput, low latency, and cost-effectiveness on standard GPUs. This makes Infery-LLM ideal for scenarios with simultaneous user interactions, ensuring the system remains efficient even during peak usage periods. To see Infery-LLM in action, we invite you to try it out here.

For developers interested in exploring further how Infery-LLM can enhance your LLM-based applications, get started today.