Are you looking to benchmark your models on different hardware types? To optimize and deploy your deep learning models? We’ve got great news!

Deci’s online hardware fleet just got even better with new out-of-the-box support for the latest and greatest GPUs and CPUs for deep learning inference.

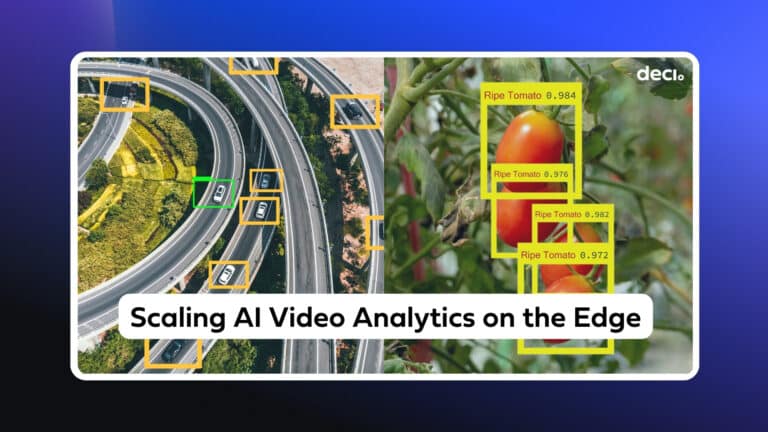

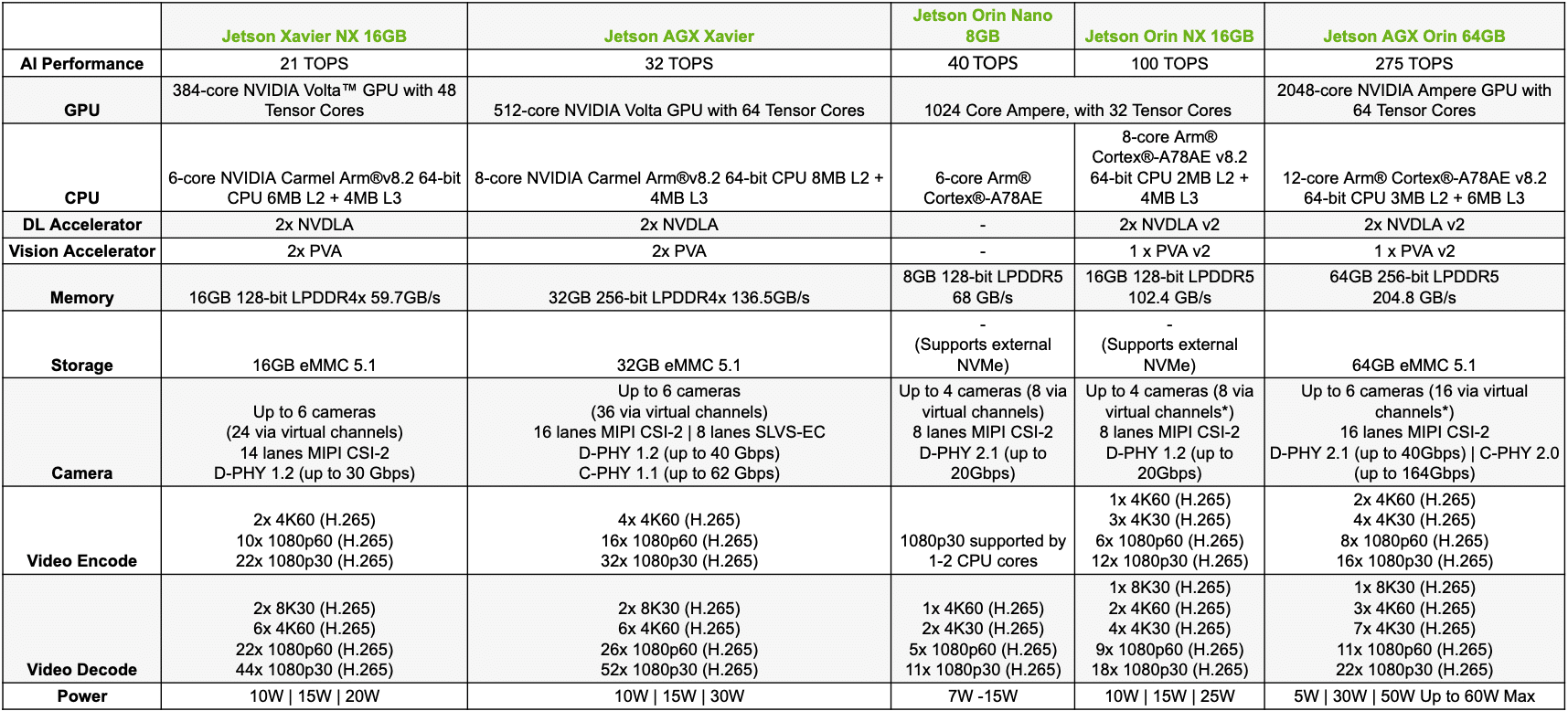

We now offer support for the NVIDIA A100 on GCP, a powerful GPU instance perfect for deep learning workloads. We also added support for NVIDIA Jetson Orin AGX, NVIDIA Jetson Orin NX 16GB, and NVIDIA Jetson Orin Nano 8GB edge devices. These devices are enabling autonomous vehicles, drones, industrial robots, medical imaging, and edge AI devices. With higher-performance AI computing capabilities, there’s nothing to stop you from creating sophisticated and intelligent systems with the ultimate in performance.

The Deci platform supports a broad range of hardware types, including CPU and GPU instances from leading hardware manufacturers such as Intel, AMD, and NVIDIA. We also support a variety of cloud providers, such as AWS and GCP, so you can enjoy greater flexibility when selecting cloud services.

With our recent version release, you can get the same benchmarking, optimization, and acceleration capabilities on an even bigger range of hardware types and instances. And if we don’t already support what you need, let us know! We’re committed to continue expanding the platform to meet our customers’ needs.

How Can You Leverage Deci’s Online Hardware Fleet?

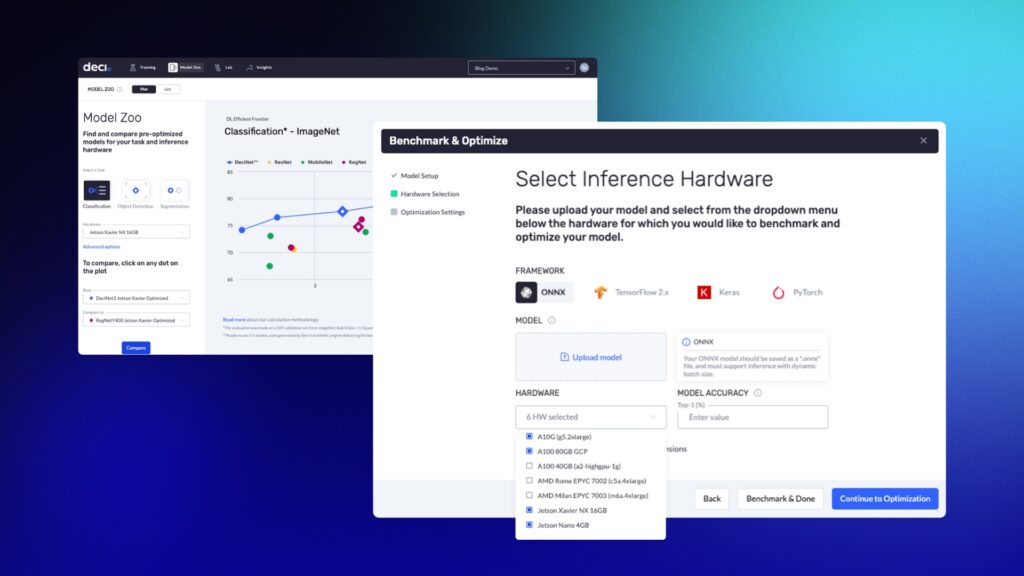

The Deci platform includes benchmarking and compilation tools to help you choose the best hardware type and instance for your models. These tools make it simple for you to test your models on different hardware types, compare the results, and make data driven decisions accordingly. Our platform also includes resources that help you compile and quantize your models (with Intel OpenVino or NVIDIA TensorRT) to achieve the optimal inference performance.

Here’s an overview of the main capabilities:

Optimize Your Models for Multiple Hardware Types with A Click

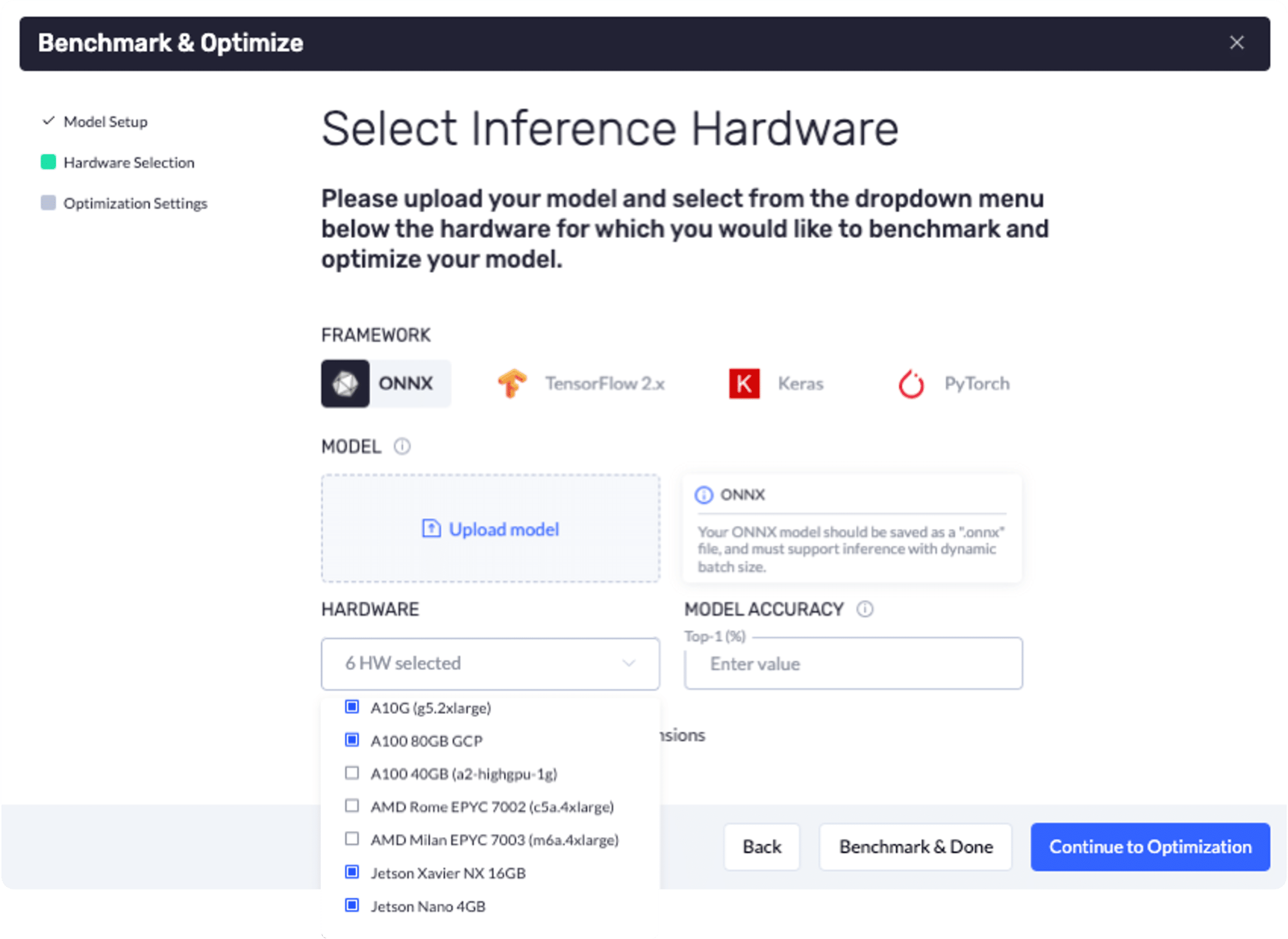

We understand that your job often involves working with multiple hardware types and different devices. How do you easily optimize the same model for more than one type of hardware? We’ve got you covered. You can optimize your models for various hardware types simultaneously. This feature is guaranteed to save you time and effort.

Easily Find the Best Inference Hardware for the Job

With Deci, you can easily compare the performance of a model on different hardware types, quickly see the results and make more informed decisions about which hardware type is best suited for your use case.

For example, say you have a model you want to optimize for both GPU instances and edge devices. The Deci platform lets you compare the performance of your model on both instances and then choose the one that works best for your workload. All you have to do is choose the hardware type you want for your benchmark and upload the model. It’s fast, effortless, and great at cutting costs. No need to connect any device or own the hardware in advance, it’s all available directly on our online hardware fleet.

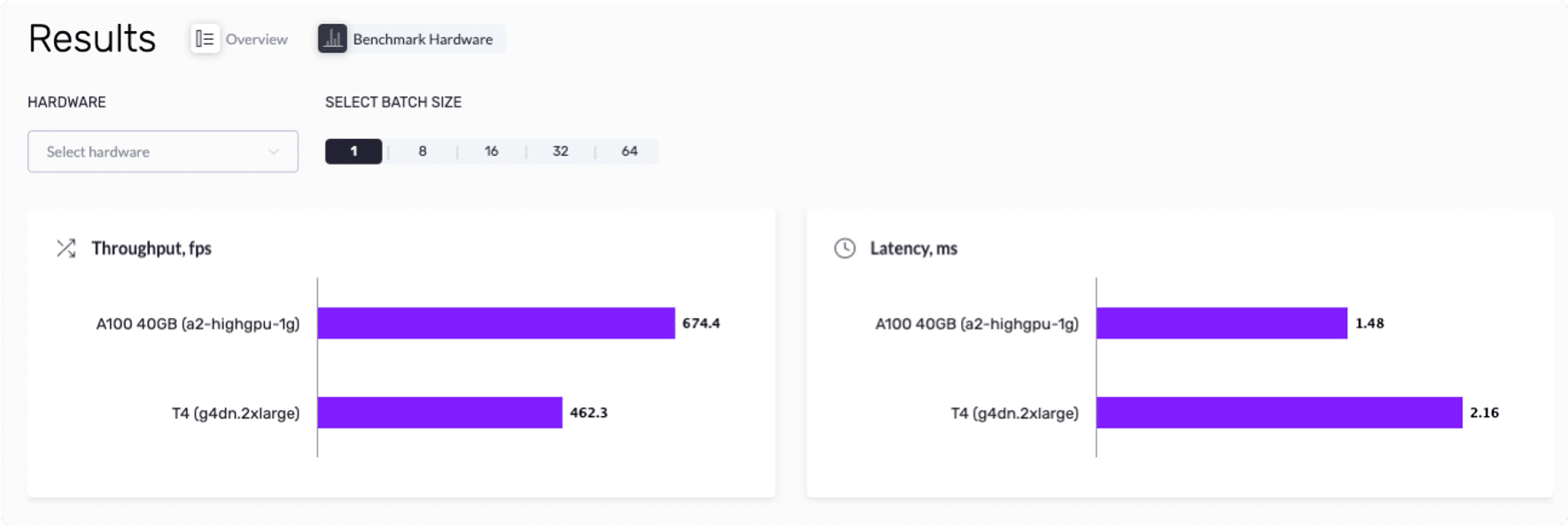

Debating which GPU Type or CPU Type is More Suitable? Deci Can Help

It’s now easier for you to compare and select the optimal hardware type for your needs. For example, you can compare NVIDIA A100 GPU vs. NVIDIA T4 GPU for high-performance computing and choose what works best. You can also compare the performance of any NVIDIA Jetson device to find the most appropriate edge device for your use case.

To explore this feature just go to the Benchmark Hardware tab in the platform, select the model for hardware comparison, and then choose all the relevant hardware instances you want to compare.

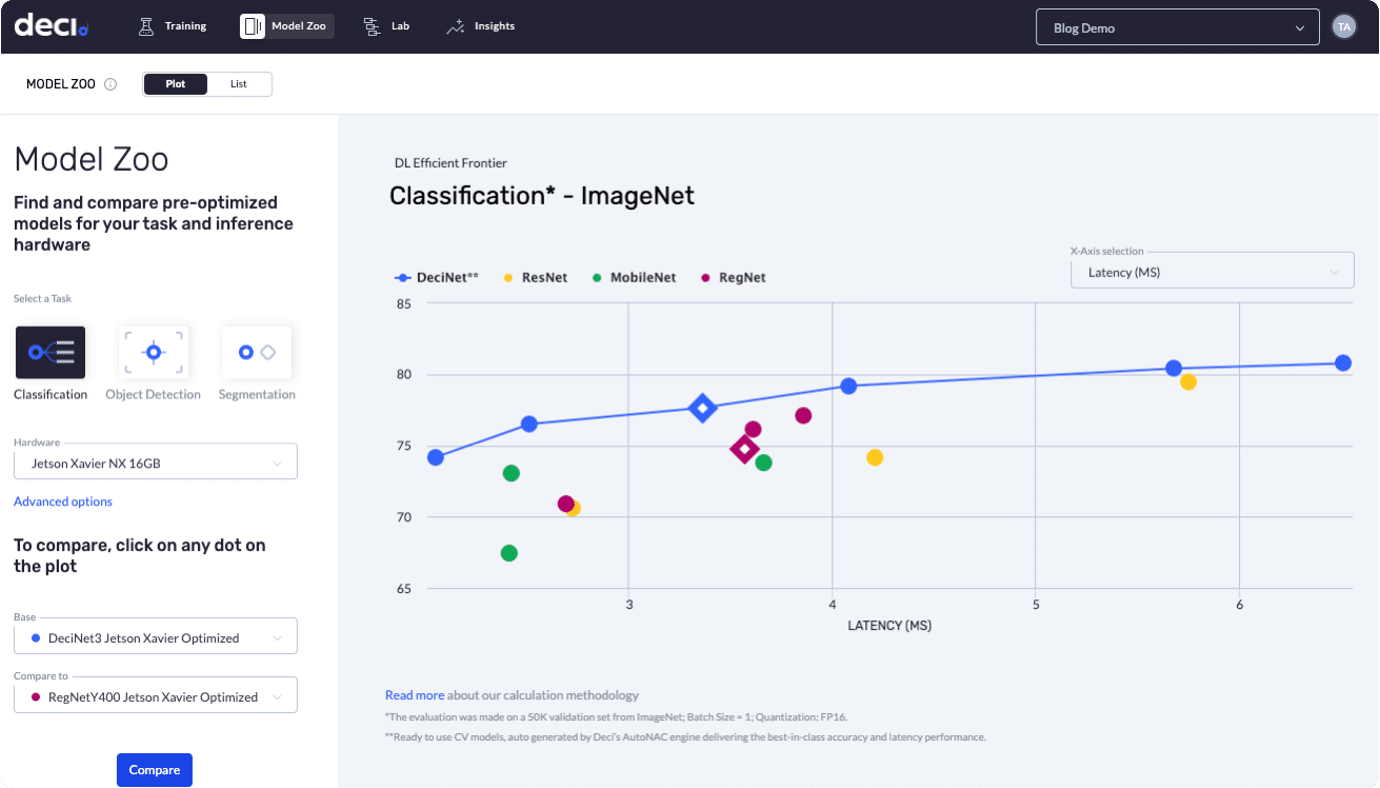

Compare Efficient Frontiers

The efficient frontier is a set of optimal performance values for different hardware types, where each point on the frontier represents a unique combination of hardware and software parameters that optimize the model’s performance.

Being able to compare the efficient frontier for different hardware types allows you to select the optimal combination of hardware and software for your specific use case. This comparison is especially valuable when you’re working with large datasets or computationally intensive models, where optimizing the hardware configuration can make a huge difference in performance.

All efficient frontiers are just one click away, under the “Model Zoo” tab in the Deci platform. That’s where you can select the task and hardware type you want to compare. You can compare two different models on the plot to get a detailed comparison or even clone the model to compare it on different hardware.

Optimize Your Deep Learning Solution

The new hardware support makes it even easier for you to create, optimize, and successfully deploy innovative deep learning solutions. Deci platform’s latest version includes an expanded list of instances, benchmarking and compilation tools, and resources for model optimization–all working together to make your development process simpler, more efficient, and more effective. The support for GCP means new options with even more flexibility for different cloud services. And, we are constantly working to expand our platform to offer our customers even more options to choose from. Keep an eye out for even more hardware options coming your way in the near future.

Looking to simplify your development process? Talk with our expert to learn how you can leverage the Deci platform to build and deploy better models faster.