File formats like GGML and GGUF for local Large Language Models (LLMs) have democratized access to this technology and reduced the costs associated with running language models.

GGUF and GGML are file formats for quantized models developed by Georgi Gerganov. To interact with these files, you need to use llama.cpp. These formats enable efficient inference from a single file, making the LLM deployment process much more simple and cost-effective.

GGML, primarily a tensor library, laid the groundwork for these file formats, allowing for LLMs’ initial storage and processing.

😬 The Limitations of GGML and the Rise of GGUF

GGML was an earlier iteration in the evolution of LLM file formats and served as a basis for future improvements. These earlier versions are now mostly compatible only with legacy systems like KoboldCCP, thanks to deliberate efforts by developers to maintain backward compatibility. However, in contemporary usage, especially with versions of llama.cpp, GGML’s relevance has waned.

The primary reasons are its slower processing capabilities and lack of advanced features compared to GGUF. It is important to keep in mind that GGML support is no longer available in llama.cpp. As a result, it won’t be able to load them anymore. Therefore, even if you come across an application that prompts you to download a GGML model, it is advised to opt for the GGUF version instead. The GGUF version will function correctly, despite what the app’s documentation may suggest.

🚨 The Emergence of GGUF

GGUF, introduced by the llama.cpp team on August 21, 2023, is not just another standard but a direct and substantial improvement over GGML.

It’s a more efficient and flexible way of storing and using LLMs for inference. While GGML was a valuable foundational effort, GGUF addresses its limitations and opens up new possibilities in the AI landscape, potentially shaping the future of model handling and deployment. Upon its release, GGUF immediately rendered the older GGML formats obsolete, ushering in a new era in LLM handling. This transition is supported by a robust community of developers, model maintainers, practitioners like theBloke, and other prominent figures expected to rapidly adapt and convert existing models into the GGUF format.

This binary format is tailored to rapidly load and save models, with a user-friendly approach to reading and handling model files. The enthusiasm surrounding its release reflects the high hopes pinned on its ability to significantly enhance model development and deployment. GGUF’s introduction as a user-friendly solution lowers production costs. It democratizes using LLMs, making these technologies more accessible and efficient.

GGUF offers numerous quality-of-life improvements, making it unlikely for users to prefer older versions:

- 💾 Single-file Deployment: Ensuring ease of distribution and loading without reliance on external files.

- 👐🏼 Extensibility: It allows adding new features to GGML-based executors and information to GGUF models, maintaining compatibility with existing models.

- 🗺️ mmap compatibility: Models can be loaded using memory mapping for enhanced speed in loading and saving.

- 😊 User-friendly Design: Simplified model loading and saving process, eliminating the need for external libraries.

- 💿 Comprehensive Information Storage: GGUF files contain all necessary data to load a model, requiring no additional input from the user.

- 📊 Quantization Compatibility: GGUF supports quantization. In this process, model weights, typically stored as 16-bit floating point numbers, are scaled down (e.g., to 4-bit integers) to save computational resources without significantly impacting the model’s power. This is particularly useful for reducing the demands on expensive GPU RAM.

An essential change in GGUF is adopting a key-value structure for hyperparameters, now known as metadata. This shift permits the addition of new metadata without compromising compatibility with existing models. This structure allows for easy integration of new information, preserving compatibility with existing GGUF models.

𝌙 Advancements and Applications of GGUF

Since August 2023, GGUF has seen widespread acceptance in the developer community.

The GGUF format has all the necessary metadata included in the model file, eliminating the need for additional files such as tokenizer_config.json.

The format is an improvement over its predecessors, with enhanced tokenization, support for special tokens, and better metadata handling. It is designed for faster model loading, ease of use, and adaptability to future changes.

👨🏽💻 User Experience and Practicality of GGUF

GGUF has been designed for simplicity and efficiency.

Requiring minimal code for model loading negates the need for external libraries. Its built-in data store streamlines the handling of parameters that were previously managed manually. The format stores handling logic within the model file, eliminating the need for repetitive parameter specification.

This user-centric approach means that running llama.cpp with a GGUF model requires only the model file and a prompt.

The format is equipped to handle new model types with unique quirks by appending a list of settings directly to the model file. This feature enables automatic detection and handling of different model types by llama.cpp.

Pros and Cons of GGUF vs. GGML

✅ Pros:

- Addresses GGML limitations, offering a more enhanced user experience.

- Ensures extensibility and compatibility with older models.

- Focuses on stability, reducing the occurrence of breaking changes.

- Supports a diverse range of models, extending its application beyond llama models.

🙅🏽 Cons:

- Transitioning existing models to GGUF can be time-consuming.

- Users and developers need to adapt to this new format.

🎰 There are many ways to run a GGUF model

And in this blog, I’ll show you my five favorite ways:

1) 🦙 pure llama.cpp

2) 🐍 + 🦙 python llama.cpp

3) 🦜🔗 + 🦙 LangChain + llama.cpp

4) 🗂️ + 🦙 LlamaIndex + llama.cpp

5) 🤖 ctransformers

Download the file locally

Start by downloading the model from HuggingFace. In this tutorial, you’ll use Deci/DeciLM-7B-instruct-GGUF. The following code will download the model from Hugging Face, specifically the decilm-7b-uniform-gqa-q8_0.gguf model file, and save it to your current directory.

%%capture !pip install huggingface-hub hf-transfer

import os os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1" !huggingface-cli download \ Deci/DeciLM-7B-instruct-GGUF \ decilm-7b-uniform-gqa-q8_0.gguf \ --local-dir . \ --local-dir-use-symlinks False

downloading https://huggingface.co/Deci/DeciLM-7B-instruct-GGUF/resolve/main/decilm-7b-uniform-gqa-q8_0.gguf to /root/.cache/huggingface/hub/tmpsjrgn13t decilm-7b-uniform-gqa-q8_0.gguf: 100% 7.55G/7.55G [00:29<00:00, 254MB/s] ./decilm-7b-uniform-gqa-q8_0.gguf

MODEL_PATH = "/content/decilm-7b-uniform-gqa-q8_0.gguf"

🥞 Below is the string prompt we’ll use to generate text. You can use anything you want here. I love pancakes, they’re my favorite, so I wanna see if the LLM can teach me a thing or two about making some good flapjacks.

STRING_PROMPT = """ ### System: You are an AI assistant that follows instructions exceptionally well. Be as helpful as possible. ### User: How do I make the most delicious pancakes the world has ever tasted? ### Assistant: """

STRING_PROMPT_TEMPLATE = """### System:

You are an AI assistant that follows instruction extremely well. Help as much as you can.

### User:

{question}

### Assistant:

"""

🦙 “pure” llama.cpp

llama.cpp is a C/C++ implementation of the LLaMA (Large Language Model Meta AI) developed by Meta (Facebook). It’s designed to efficiently run large language models (LLMs) like LLaMa on various devices and systems, offering features like:

- Efficient LLM Inference: Allows for running text-generating AI models efficiently, supporting different model sizes (e.g., 7B, 13B).

- Customization and Interaction: Supports various types of interactions and customizations, including persistent interaction, where prompts and responses can be saved and resumed.

- Grammar Constrained Output: Can force model outputs in specific formats, like JSON.

- User-friendly Setup: Involves creating a virtual environment and installing necessary packages, such as Llama-cpp-python, to run models.

- Real-world Applications: Offers portability, speed, and customization, making it suitable for a range of AI and data engineering projects.

For more detailed information and guides on how to set up and use Llama.cpp, you can refer to the GitHub repo.

To install llama.cpp you follow these steps:

The installation process for llama.cpp based on the command you provided can be outlined in the following steps:

- Clone the Repository:

- Use the Git command to clone the llama.cpp repository from GitHub to your local machine. This is done using the command

git clone https://github.com/ggerganov/llama.cpp. This command downloads all the necessary files from the repository to your local system in a directory namedllama.cpp.

- Use the Git command to clone the llama.cpp repository from GitHub to your local machine. This is done using the command

- Change Directory:

- Once the repository is cloned, change your current directory to the

llama.cppdirectory using thecd llama.cppcommand. This ensures that all subsequent commands are run in the context of the cloned repository.

- Once the repository is cloned, change your current directory to the

- Compile the Project:

- Compile the source code with the

makecommand. The commandmake -j LLAMA_CUBLAS=1is used for this purpose.make: This tool automates the compilation process by reading the Makefile in the directory and executing its instructions.-j: This flag enables parallel compilation, which can speed up the build process on systems with multiple cores.LLAMA_CUBLAS=1: This is an environment variable setting which indicates NVIDIA’s cuBLAS library should be enabled during the compilation. This is used to leverage GPU acceleration.

- Compile the source code with the

!git clone https://github.com/ggerganov/llama.cpp && cd llama.cpp && make -j LLAMA_CUBLAS=1

You can inspect all of the command line arguements by running the code below:

%%bash /content/llama.cpp/main -h

Several arguments are specifically related to text generation, you can see a full list here.

Here’s a breakdown of the relevant options:

- Prompt and Generation Customization

-p PROMPT, --prompt PROMPT: Sets the initial prompt for generation.-f FNAME, --file FNAME: Specifies a file containing the prompt.-n N, --n-predict N: Sets the number of tokens to predict/generate. I set mine to-1to allow max tokens.--prompt-cache FNAME: Uses a file to cache the prompt state for faster startups.

- Sampling and Generation Control

- Various sampling parameters like

--top-k,--top-p,--min-p,--tfs, and--typical, control the randomness and diversity of the text generation. --repeat-penalty N,--presence-penalty N,--frequency-penalty N: These options help in controlling the repetitiveness of the generated text.--temp N: Sets the temperature for generation, influencing the randomness of the output.

- Various sampling parameters like

- Text Processing Options:

--multiline-input: Allows writing or pasting multiple lines without special end characters.-e, --escape: Processes escape sequences in the prompt.

- Context and Batch Processing:

-c N, --ctx-size N: Defines the size of the prompt context.-b N, --batch-size N: Sets the batch size for prompt processing.

- GPU Layers

--n-gpu-layers: This setting determines how many layers of LLM are loaded into the GPU memory. Storing these layers in the GPU memory can significantly speed up the computations performed by the model since GPUs are designed to handle such parallel computations efficiently. I set it to an arbitrarily large number to get as many layers on the GPU as possible

These options enable customization of the text generation process, allowing users to tailor the behavior of the LLM to their specific needs.

Note: -s "$STRING_PROMPT" "$MODEL_PATH" in the cell below allow you to use Python variables in a command line argument. The $1 corresponds to STRING_PROMPT and $2 corresponds to MODEL_PATH

%%bash -s "$STRING_PROMPT" "$MODEL_PATH" /content/llama.cpp/main -m "$2" \ --log-disable \ -n -1 \ --n-gpu-layers 200 \ --color \ --temp 0.25 \ -p "$1"

### System: You are an AI assistant that follows instructions exceptionally well. Be as helpful as possible. ### User: How do I make the most delicious pancakes the world has ever tasted? ### Assistant: To make the most delicious pancakes (pancakes) in the world has ever tasted, follow these steps: Ingreat Ingredients: - 1 cup all-purpose flour - 2 tablespoon sugar - 1 teaspoon baking powder - 1/4 teaspoon salt - 1 egg - 1/3 cup milk - 1/4 cup yogurt - 1 tablespoon vanilla extract - 1 tablespoon butter, melted - oil for frying Instructions: 1. In a large bowl, mix the flour, sugar, baking powder and salt together. 2. Add egg, milk, yogurt, vanilla and melted butter to the mixture and whisk until smooth. 3. Heat a pan with oil on medium heat 4. Pour batter into the hot pan in circles using a ladle 5. Cook for 1-2 minutes or until golden brown on each side 6. Serve warm with your favorite toppings Enjoy!ggml_init_cublas: GGML_CUDA_FORCE_MMQ: no ggml_init_cublas: CUDA_USE_TENSOR_CORES: yes ggml_init_cublas: found 1 CUDA devices: Device 0: Tesla T4, compute capability 7.5, VMM: yes

The following code will allow you to spin up a server so you can have a chatbot-like experience with the model. Watch the video here to see!

from google.colab.output import eval_js

print(eval_js("google.colab.kernel.proxyPort(12345)"))

%cd /content/llama.cpp !./server -m $MODEL_PATH --log-disable -ngl 200 -c 0 --port 12345

🐍 + 🦙 llama-cpp-python

llama-cpp-python provides Python bindings for the llama.cpp library.

This package allows for OpenAI-like API use, LangChain compatibility, and supports an OpenAI-compatible web server, which can be used as a local Copilot replacement. It also provides function calling support and vision API support. The documentation for installation and usage is detailed and includes instructions for different hardware accelerations like BLAS, CUDA, and Metal. The project is licensed under the MIT license.

For more details, you can visit the repository.

👨🏽🔧 Installing llama-cpp-python

Lets install llama-cpp-python with specific build options, notably cuBLAS for CUDA support, and ensuring CMake is used in the build process. This will optimize the library for better performance on systems with NVIDIA GPUs, like Google Colab.

!CMAKE_ARGS="-DLLAMA_CUBLAS=on": This sets an environment variableCMAKE_ARGSwith the value-DLLAMA_CUBLAS=on. This means that during the installation, the CMake build system should enable CUDA BLAS (cuBLAS) support. cuBLAS is a GPU-accelerated version of the BLAS (Basic Linear Algebra Subprograms) library, which allow you to use GPU power for generations.In general:- Use

CuBLASif you have CUDA and an NVidia GPU - Use

METALif you are running on an M1/M2 MacBook - Use

CLBLASTif you are running on an AMD/Intel GPU

- Use

FORCE_CMAKE=1: This sets another environment variableFORCE_CMAKEto1. This instructs the installation process to forcibly use CMake, even if other build systems are available or previously used.pip install llama-cpp-python: This is the command to install thellama-cpp-pythonpackage via pip, Python’s package installer.

%%capture !CMAKE_ARGS="-DLLAMA_CUBLAS=on" FORCE_CMAKE=1 pip install llama-cpp-python

In the Llama class from the llama-cpp-python module, relevant arguments for text generation include:

prompt: The initial text to start generation.max_tokens: The maximum number of tokens to generate.temperature: Controls randomness in the output.top_p,min_p,typical_p: Parameters for nucleus and typical sampling, influencing the diversity of generation.stop: A list of strings that, when encountered, stop the generation.frequency_penalty,presence_penalty: Penalties applied to discourage repetition.top_k: The top-k sampling parameter, controlling the randomness of token selection.seed: Seed for random number generation, influencing the output’s randomness.logit_bias: Adjusts the likelihood of certain tokens appearing in the completion.grammar: Applies a grammar constraint to the output.

These parameters allow fine-tuning of the text generation process, offering control over aspects like diversity, randomness, and structure of the generated text.

Note, the model is instantiated with n_gpu_layers=-1, which means it will load the maximim number of model layers onto the GPU.

from llama_cpp import Llama

import json

llm = Llama(model_path=MODEL_PATH, n_gpu_layers=-1)

result = llm(

prompt=STRING_PROMPT,

max_tokens=4096,

echo=False

)

AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 1 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 |

print(result['choices'][0]['text'])

To create the most delicious pancakes (pancake) in the world has tasted, follow these steps: 1. Ingredients - 2 cups all-purpose flour -3 teaspoon baking powder of powder 1/2 cup sugar 4 egg 1 teaspoon vanilla extract 1/3 cup milk 1/4 cup butter 2. Preparation Mix the dry ingredients in a bowl and whisk them properly. 3. Add milk, egg, and vanilla, and mix. 4. Then add butter to it. 5. Make into pancakes of your desired size on pan and cook both sides golden brown. 6. Serve with syrup or honey and fruits (optional).

🦜🔗 + 🦙 LangChain + llama-cpp-python

This is straight forward.

You’re already familiar with the installation of llama-cpp-python, and LangChain is easy to install. If you want more detail, you can always visit the documentation.

%%capture !pip install langchain # We already installed llama-cpp-python above, but if you are going for a fresh # install and jumping right to this cell, you need to uncomment the below # !CMAKE_ARGS="-DLLAMA_CUBLAS=on" FORCE_CMAKE=1 pip install llama-cpp-python

from langchain.llms import LlamaCpp from langchain.prompts import PromptTemplate from langchain.chains import LLMChain from langchain.callbacks.manager import CallbackManager from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler prompt = PromptTemplate(template=STRING_PROMPT_TEMPLATE, input_variables=["question"]) # allows for streaming output callback_manager = CallbackManager([StreamingStdOutCallbackHandler()])

The LlamaCpp class in LangChain will use the same hyperparameters for text generation that were mentioned above for llama-cpp-python.

Two of the most important parameters for use with GPU are:

n_gpu_layers– determines how many layers of the model are offloaded to your GPU.n_batch– how many tokens are processed in parallel.

You can visit the source code for more information.

llm = LlamaCpp(model_path=MODEL_PATH,

temperature=0.75,

max_tokens=512,

callback_manager=callback_manager,

verbose=True,

n_gpu_layers=-1,

)

AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 1 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 |

llm_chain = LLMChain(prompt=prompt, llm=llm)

llm_chain.run("How do I make the most delicious pancakes the world has ever tasted?")

To make the most delicious pancakes the world has ever tasted, follow these steps: Ingredients: - 1 cup all-purpose flour - 2 tablespoons sugar - 2 teaspoons baking powder - ½ teaspoon salt - 1 cup milk - 1 egg - 2 tablespoons melted butter or oil - 1/4 teaspoon vanilla extract (optional) Instructions: 1. In a large mixing bowl, whisk together the flour, sugar, baking powder, and salt until well combined. 2. Create a well in the center of the dry ingredients and add the milk, egg, melted butter or oil, and vanilla extract (if using) to form a smooth batter. Mix well until all ingredients are fully incorporated. Be careful not to overmix, as this can result in tougher pancakes. 3. Heat a non-stick skillet or griddle over medium heat. Once the pan is hot, use a 1/4 cup measuring cup to pour the batter onto the pan for each pancake. Cook until bubbles form on top and the edges of the pancake start to dry out, approximately 2-3 minutes. 4. Flip the pancakes using a spatula and cook until the bottoms are golden brown and crisp, about 1-2 minutes more. Repeat with remaining batter until it's all used up. 5. Serve your delicious homemade pancakes hot, topped with butter and pure maple syrup or any of your favorite pancake toppings. Enjoy!

🗂️ + 🦙 LlamaIndex + llama-cpp-python

To properly format the model inputs, you’ll need to pass in messages_to_prompt and completion_to_prompt functions, depending on the model you’re using.

If you need to include any kwargs during initialization, you can set them in model_kwargs. A complete list of available model kwargs can be found in the LlamaCPP documentation.

If you need to set any kwargs during inference, you can set them in generate_kwargs. You can find a complete list of generate kwargs here.

The example below shows how to configure the model with all the defaults.

Check out the LlamaIndex documentation for more details and use cases.

!pip install llama-index

%%capture !pip install llama-index # We already installed llama-cpp-python above, but if you are going for a fresh # install and jumping right to this cell, you need to uncomment the below # !CMAKE_ARGS="-DLLAMA_CUBLAS=on" FORCE_CMAKE=1 pip install llama-cpp-python

from llama_index.llms import LlamaCPP

from llama_index.llms.llama_utils import (

messages_to_prompt,

completion_to_prompt,

)

Note: If you get the following error message: llamaindex ImportError: cannot import name 'Iterator' from 'typing_extensions'

Just restart the session, and make sure you instantaite the MODEL_PATH and STRING_PROMPT_TEMPLATE variable, and you’ll be good to go!

llm = LlamaCPP(

# You can pass in the URL to a GGUF model to download it automatically

model_url=None,

# or, you can set the path to a pre-downloaded model instead of model_url

model_path=MODEL_PATH,

temperature=0.1,

max_new_tokens=512,

context_window=4096,

# generation kwargs from llama-cpp-python

generate_kwargs={},

# model kwargs from llama-cpp-python, set n_gpu_layers to -1 to get max layers on GPU

model_kwargs={"n_gpu_layers": -1},

messages_to_prompt=messages_to_prompt,

completion_to_prompt=completion_to_prompt,

verbose=True

)

AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 1 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 |

response = llm.complete(STRING_PROMPT)

print(response.text)

To make the most delicious pancakes the world has ever tasted, follow these steps: 1. Preheat the pan or griddle to medium-high heat and grease it with butter or oil. 2. Mix 1 cup of all-purpose flour, 1 teaspoon baking powder, 1/4 teaspoon sugar, 1 egg, 1/4 cup milk, 1 tablespoon vanilla extract in a bowl. 3. Pour the batter into pan and cook until bubbles form on top and bottom is golden brown. 4. Flip and cook until other side is golden brown. 5. Serve with butter and syrup or any toppings of your choice.

And, of course, you can use the stream_complete endpoint to stream the response as it’s being generated rather than waiting for the entire response to be generated.

response_iter = llm.stream_complete(STRING_PROMPT)

for response in response_iter:

print(response.delta, end="", flush=True)

To make theLlama.generate: prefix-match hit most delicious pancakes the world has ever tasted, follow these steps: 1. Preheat the pan or griddle to medium heat. 2. Mix 1 cup of flour with 1 egg and 1 cup milk in a bowl. 3. Add sugar and salt to taste. 4. Stir well until smooth. 5. Pour batter into hot pan, cook on both sides till golden brown. 6. Serve with butter and syrup or jam.

🤖 CTransformers

CTransformers is a Python library providing bindings for Transformer models implemented in C/C++ using the GGML library.

Key Features and Capabilities:

- Model Loading: CTransformers allows loading language models from a local file or a remote repository. You can specify the model path or a Hugging Face Hub model repository ID.

- Support for Different Model Types: It supports various model types and can specify the specific model file and configuration.

- Text Generation and Tokenization: CTransformers supports generating new tokens from a list of tokens, detokenizing a sequence of tokens into text, and tokenizing text into a list of tokens.

- Sampling Methods: It includes many familiar parameters from Hugging Face Transformers for sampling tokens from the model, like

top_k,top_p, andtemperature.

👩🏿🔧 Installation and Usage:

- CTransformers can be installed using pip. It is also integrated with LangChain, providing a unified interface for interacting with models and generating text.

It offers a unified interface for a variety of quantized models, supporting various features including CUDA and Metal for GPU acceleration. The library is designed for efficient AI model handling and can be integrated with other frameworks like LangChain. CTransformers supports streaming outputs, loading models directly from Hugging Face Hub, and includes experimental features like GPTQ. Installation is straightforward via pip. The library is licensed under the MIT license.

For more information, visit the GitHub repository.

%%capture !pip install ctransformers

CTransformers supports the following generation parameters, which you can pass into AutoConfig.

| Parameter | Type | Description | Default |

|---|---|---|---|

top_k | int | The top-k value to use for sampling. | 40 |

top_p | float | The top-p value to use for sampling. | 0.95 |

temperature | float | The temperature to use for sampling. | 0.8 |

repetition_penalty | float | The repetition penalty to use for sampling. | 1.1 |

last_n_tokens | int | The number of last tokens to use for repetition penalty. | 64 |

seed | int | The seed value to use for sampling tokens. | -1 |

max_new_tokens | int | The maximum number of new tokens to generate. | 256 |

stop | List[str] | A list of sequences to stop generation when encountered. | None |

stream | bool | Whether to stream the generated text. | False |

reset | bool | Whether to reset the model state before generating text. | True |

batch_size | int | The batch size to use for evaluating tokens in a single prompt. | 8 |

threads | int | The number of threads to use for evaluating tokens. | -1 |

context_length | int | The maximum context length to use. | -1 |

gpu_layers | int | The number of layers to run on GPU. | 0 |

from ctransformers import AutoModelForCausalLM, AutoConfig, Config

conf = AutoConfig(Config(temperature=0.25,

max_new_tokens=1024,

gpu_layers=200))

llm = AutoModelForCausalLM.from_pretrained(MODEL_PATH,

model_type="DeciLM",

config = conf,

device_map="cuda")

print(llm(STRING_PROMPT))

Or, you can stream!

for text in llm(STRING_PROMPT, stream=True):

print(text, end="", flush=True)

Here's a recipe for making the most delicious pancakes: Ingredients: - 1 cup all-purpose flour - 2 tablespoons sugar - 2 teaspoons baking powder - 1/4 teaspoon salt - 1 cup milk - 1 egg - 3 tablespoons melted butter or oil - Optional: vanilla extract, cinnamon, blueberries, chocolate chips, etc. Instructions: 1. In a large mixing bowl, whisk together the flour, sugar, baking powder, and salt until well combined. 2. In a separate bowl, whisk together the milk, egg, and melted butter or oil. 3. Pour the wet ingredients into the dry ingredients and mix gently with a spoon or spatula until just combined (do not overmix). If adding any additional flavorings like vanilla extract or cinnamon, stir them in at this point. 4. Heat a non-stick pan or griddle over medium heat. Once hot, use a 1/4 cup measuring cup to pour the batter onto the pan. Cook for about 2-3 minutes on each side until golden brown and cooked through. Repeat with the remaining batter. 5. Serve your delicious pancakes warm, drizzled with maple syrup or any other desired toppings. Enjoy!

Conclusion

You’ve reached the end of this blog!

Throughout this blog, you have witnessed how Python libraries like llama-cpp-python and ctransformers can easily integrate the advanced capabilities of C/C++ LLM implementations into the Python environment, which many of us are familiar with. This achievement is not just a technical feat; it opens the door to new realms of creativity and innovation in various applications such as content creation and automated responses, particularly in the AI domain.

The purpose of this blog was to demonstrate that both enthusiasts and professionals can utilize these powerful LLMs. GGUF has reduced the barriers to entry, making it possible for a broader community to explore and innovate in the field of AI and LLMs.

You can now take advantage of the power of LLMs using the GGUF format on your own machine or the free tier of Google Colab.

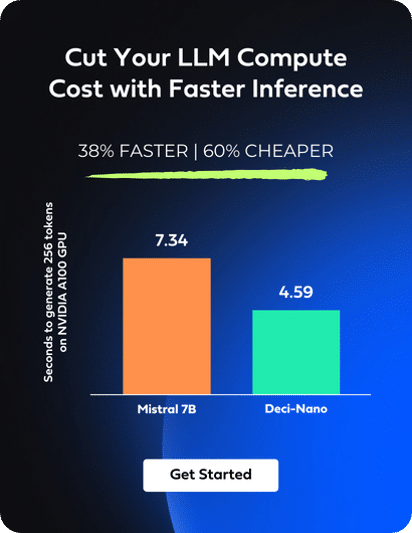

If you’re interested in running high-performance LLMs locally, I encourage you to discover Deci’s LLMs and GenAI Development Platform. The platform supports quantization and is designed to enable developers to deploy and scale high-performing models efficiently, with the added benefit of keeping their data on-premises.

To try out Deci’s GenAI models and development platform firsthand, you’re invited to sign up for a free trial.