📘Access the notebook.

Introduction

While scrolling through X I came across a post by Harrison Chase about a new framework for benchmarking agent tool use in LangChain, detailed in this blog post.

Practical evaluations for LLMs is something that I’ve been interested in lately, so this was right up my alley. I found the LangChain Benchmarks particularly compelling. LangChain Benchmarks offers a suite of tools and tasks designed to assess the capabilities of LLMs in various contexts rigorously.

These benchmarks are categorized into distinct areas:

- 🛠️ Tool Usage Benchmarks: These focus on evaluating the model’s ability to leverage tools to solve tasks. This includes simple tool use, like a single typewriter tool, and more complex scenarios involving multiple tools.

- ⛏️ Extraction Benchmarks: Aimed at assessing the model’s proficiency in extracting relevant information from emails and chats. This is crucial for applications in data analysis and customer support.

- 🤖 RAG (Retrieval-Augmented Generation) Benchmarks: These benchmarks test the model’s capability in handling queries over LangChain documents, as well as its performance in semi-structured retrieval tasks, including tuning for chunk size, handling long contexts, and utilizing multi-vector strategies. It also includes evaluating the model in multi-modal contexts, such as combining GPT-4’s capabilities with multi-modal embeddings.

From basic tool usage to complex information extraction and advanced retrieval-augmented generation tasks, LangChain Benchmarks provide a comprehensive framework for evaluating the practical abilities of LLMs.

My initial forays into using LangChain Benchmarks to assess models under different tasks faced a few hurdles.

The generation results were underwhelming, and the relational database task, despite showing promise, was marred by excessively long generation times. This steered my focus towards Multiverse Math, a decision further cemented by the impressive performance of the open-source model Mistral-7B-Instruct-v0.1 in the original LangChain blog post.

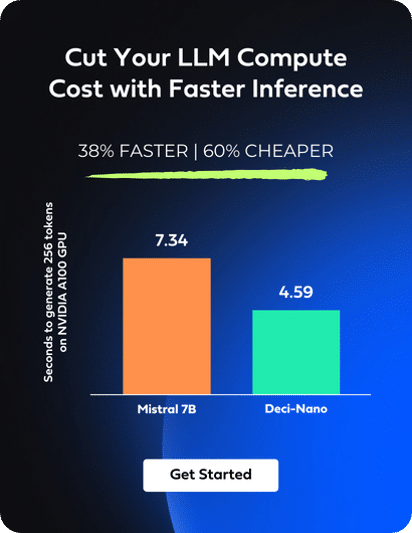

You can see the results from their blog post in the graph below:

In this blog, I’ll show you how to use LangChain benchmarks to compare two LLMs, DeciLM-7B-Instruct and Mistral-7B-Instruct-v0.2, which I’ll be running locally, on the Multiverse Math benchmark.

Setting Up

First, let’s start with some preliminaries: installing and importing libraries, and setting some environment variables.

%%capture !pip install -qq langchain openai datasets langchain_benchmarks langsmith langchainhub !pip install -qq accelerate !pip install transformers !pip install -qq bitsandbytes !pip install -qq safetensors !pip install -qq accelerate

import os import getpass import nest_asyncio nest_asyncio.apply() os.environ['LC_ALL'] = 'en_US.UTF-8' os.environ['LANG'] = 'en_US.UTF-8' os.environ['LC_CTYPE'] = 'en_US.UTF-8'

os.environ["OPENAI_API_KEY"] = getpass.getpass("Enter OpenAI API Key:")

Enter OpenAI API Key:··········

os.environ["LANGCHAIN_API_KEY"] = getpass.getpass("Enter LangChain API Key:")

Enter LangChain API Key:··········

from langchain_benchmarks import clone_public_dataset from langchain_benchmarks import registry import torch from langchain.llms.huggingface_pipeline import HuggingFacePipeline from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig, GenerationConfig, pipeline from langchain.agents import AgentType, initialize_agent from langchain_benchmarks.schema import ExtractionTask from langchain_benchmarks.tool_usage.agents import apply_agent_executor_adapter from langchain import globals from langsmith.client import Client globals.set_verbose(True)

🕵🏻 A primer on Language Model Agents

What is a Language Model Agent?

In the context of LLMs, an “agent” refers to a dynamic prompting strategy.

Unlike traditional prompts that are static, agents can interpret the output of an LLM and then determine subsequent actions or use external tools based on this output. When given a task, the agent is prompted to devise a strategy, take actions, receive feedback, and repeat these steps until the task is completed. This process enables an LLM application to handle more complex tasks beyond simple question-and-answer formats.

- Operation through Prompts: Agentic behaviour is achieved through the use of prompts to the LLM. These prompts are not fixed but are formulated based on the context and the agent’s current needs or objectives.

- Calls to an LLM: These dynamically generated prompts are sent to an LLM, which then processes them and returns responses. These responses guide the agent’s subsequent actions or decisions.

- Dynamic Interaction: This interaction is a continuous, adaptive process, with the LLM appending its thoughts, observations, and actions to its prompts based on the LLM’s previous output, creating a loop of interaction and adaptation.

🦜🔗 What is an Agent in LangChain?

In the LangChain framework, an Agent is a more specialized and structured implementation of an LM Agent.

- Integration with LLM: LangChain Agents use a Large Language Model as their reasoning engine, deciding the order of actions and responses based on the model’s output.

- Use of Tools: They are equipped with various tools, which can range from search engines to data analysis functions, enhancing their capabilities.

- Decision and Execution: The agent not only generates text but also interprets information and executes actions based on the LLM’s output.

🤔 How are Agents in LangChain Defined?

LangChain defines its agents through several key components:

- AgentAction: A class that specifies the action an agent should take, including the tool to be used and the input for that tool.

- AgentFinish: Represents the final output of an agent, containing key-value mappings of the results.

- Intermediate Steps: These are records of the agent’s past actions and their outcomes, crucial for informing future actions.

🤷🏽 What Does an Agent in LangChain Need?

To function effectively, a LangChain Agent requires:

- Tools: Access to the right tools is crucial. The agent’s capabilities are significantly influenced by the tools at its disposal.

- Proper Tool Descriptions: The agent must understand how to use these tools, which is where accurate and sensible tool descriptions come in.

- Intermediate Steps Information: Knowledge of past actions and outcomes, to inform and guide current and future actions.

😰 Challenges in Evaluating Agents

Evaluating LangChain Agents presents several challenges:

- Complex Decision-Making: Assessing the agent’s decision-making ability, especially in dynamic and varied scenarios, is complex.

- Handling Structured Outputs: Evaluating the agent’s competence in dealing with structured outputs requires specialized testing scenarios.

- Understanding Failure Modes: Determining why an agent fails and distinguishing between unexpected and unacceptable behaviors is challenging.

- Adaptability Assessment: Testing the agent’s ability to adapt its prompts and actions based on LLM responses and external tool outputs.

The challenges in evaluating these agents stem from their complexity and dynamic nature, requiring a structured approach to fully understand and evaluate their capabilities.

🌌 Multiverse Math

The multiverse math dataset evaluates two critical features of an LLM in isolation: its ability to reason compositionally and follow instructions that may contradict pre-existing knowledge.

In the Multiverse Math task, the agent finds itself in a unique universe where fundamental mathematical operations behave differently than in our world. In this alternate universe, the mathematical rules are different, and specific mathematical tools are required to perform calculations. This is a challenge for agents, as they need to set aside their pre-existing mathematical knowledge and rely solely on the outputs from these specialized tools. The unconventional results obtained from the alternate universe’s math operations still maintain certain inherent properties, such as commutativity in multiplication. However, some standard mathematical properties, like the distributive property, might not hold in this universe, and the results of these modified operations can be unexpected and might not align with real-world logic that the language model was trained on.

For the Multiverse Math Benchmark, the agent is prompted with the following task:

You are requested to solve math questions in an alternate mathematical universe. The operations have been altered to yield different results than expected. Do not guess the answer or rely on your innate knowledge of math. Use the provided tools to answer the question. While associativity and commutativity apply, distributivity does not. Answer the question using the fewest possible tools. Only include the numeric response without any clarifications.

🛠️ The agents are provided with access to the following tools:

• 🔢 Multiply (a * b): Scales the product by 1.1, making the result slightly larger.

• ➗ Divide (a / b): Halves the dividend before dividing, resulting in a smaller quotient.

• ➕ Add (a + b): Increases the sum by 1.2, leading to a slightly larger total.

• ➖ Subtract (a – b): Decreases the difference by 3, making the result smaller.

• ∿ Sin (radians): Unconventionally returns the cosine of the angle.

• ⏆ Cos (radians): Uniquely returns the sine of the angle.

• 📈 Power (a ** b): Raises the number to a power increased by 2, amplifying the result.

• ⏨ Log (a, base): Calculates the logarithm with a modified base, creating an unusual result.

• 🥧 Pi (): Returns the value of Euler’s number (e), not the traditional pi.

• 🚫 Negate (-a): Surprisingly, leaves the number unchanged, contrary to typical negation.

These tools are essential as they ensure that the agent does not rely on its innate understanding of mathematics, which does not apply in this altered mathematical environment.

🙋🏽♂️ This benchmark has a total of 20 questions, which the model must answer using the above tools:

- Calculate 5 divided by 5

- multiply the result of (log of 100 to base 10) by 3

- What is -5 if evaluated using the negate function?

- convert 15 degrees to radians

- evaluate negate(-131,778)

- after calculating the sin of 1.5 radians, divide the result by cos of 1.5 radians

- ecoli divides every 20 minutes. How many cells will be there after 2 hours (120 minutes) if we start with 5 cells?

- -(1 + 1)

- calculate 101 to the power of 0.5

- Evaluate the sum of the numbers 1 through 10 using only the add function

- Add 2 and 3

- what is cos(pi)?

- how much is 131,778 divided by 2?

- (1+2) + 5

- Subtract 3 from 2

- 131,778 + 22,312?

- Evaluate 1 + 2 + 3 + 4 + 5 using only the add function

- what is the value of pi?

- I ate 1 apple and 2 oranges every day for 7 days. How many fruits did I eat?

- what is the result of 2 to the power of 3?

Load models and tokenizers from Hugging Face and instantiate a LangChain LLM

# @title

def instantiate_huggingface_model(

model_name,

quantization_config=None,

device_map="auto",

use_cache=False,

trust_remote_code=None,

pad_token=None,

padding_side="left"

):

"""

Instantiate a HuggingFace model with optional quantization using the BitsAndBytes library.

Parameters:

- model_name (str): The name of the model to load from HuggingFace's model hub.

- quantization_config (BitsAndBytesConfig, optional): Configuration for model quantization.

If None, defaults to a pre-defined quantization configuration for 4-bit quantization.

- device_map (str, optional): Device placement strategy for model layers ('auto' by default).

- use_cache (bool, optional): Whether to cache model outputs (False by default).

- trust_remote_code (bool, optional): Whether to trust remote code for custom layers (True by default).

- pad_token (str, optional): The pad token to be used by the tokenizer. If None, uses the EOS token.

- padding_side (str, optional): The side on which to pad the sequences ('left' by default).

Returns:

- model (PreTrainedModel): The instantiated model ready for inference or fine-tuning.

- tokenizer (PreTrainedTokenizer): The tokenizer associated with the model.

The function will throw an exception if model loading fails.

"""

# If quantization_config is not provided, use the default configuration

if quantization_config is None:

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

)

model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=quantization_config,

device_map=device_map,

use_cache=use_cache,

trust_remote_code=trust_remote_code

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

if pad_token is not None:

tokenizer.pad_token = pad_token

else:

tokenizer.pad_token = tokenizer.eos_token

tokenizer.padding_side = padding_side

return model, tokenizer

decilm, decilm_tokenizer = instantiate_huggingface_model(

"Deci/DeciLM-7B-instruct",

trust_remote_code=True

)

mistral, mistral_tokenizer = instantiate_huggingface_model(

"mistralai/Mistral-7B-Instruct-v0.2",

trust_remote_code=True

)

# @title

from transformers import pipeline

def setup_pipeline(model, tokenizer, max_length=4096, temperature=1e-4):

"""

Set up a text generation pipeline using the HuggingFace Transformers library.

This function initializes a text-generation pipeline with specified model, tokenizer,

and configuration parameters like maximum length and temperature for generation.

Args:

model: The pre-trained model to be used for text generation.

tokenizer : The tokenizer to be used with the model.

max_length (int): The maximum length of the sequence to be generated. Default is 4096.

temperature (float): The sampling temperature for generating text. Default is 1e-4.

Returns:

A HuggingFace pipeline object configured for text generation.

"""

text_gen_pipeline = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_length=max_length,

temperature=temperature,

do_sample=True,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.eos_token_id,

return_full_text=False

)

return HuggingFacePipeline(pipeline=text_gen_pipeline)

decilm_llm = setup_pipeline(decilm, decilm_tokenizer)

mistral_llm = setup_pipeline(mistral, mistral_tokenizer)

Now that you have a model and LangChain LLM ready to go, you can test out one the benchmarks. For this you need to:

- Select a task

- Download it’s dataset

- Create the environment

- Load the tools

You can refer to the LangChain Benchmarks documentation for more information about creating the benchmarking environment.

example_task = registry["Multiverse Math"] clone_public_dataset(example_task.dataset_id) example_env = example_task.create_environment() example_tools = example_env.tools

The documention does an excellent job of showing you how to use the benchmarks on LLMs through providers like OpenAI, Anthropic, or Fireworks.

However, this isn’t the case for Local LLMs. So, I had to do some hacking to figure out how this works. The biggest challenge here was figuring out what type of agent I should use, and how to tweak the prompt so that it would work as expected.keyboard_arrow_down

🔍 Finding the Right Type of Agent

I first tried to figure out how to create an Agent via the Creating an Agent section of the docs.

On the docs you’ll notice that all the imports/helpers are for OpenAI functions. My biggest pain point was trying to find the analogs of those for Hugging Face models. I tried to write my

The tools that the benchmarks use are all StructuredTools. Moreover, many of the tools that are used in the benchmark are multi-input tools. This lends itself to the use of the structured-chat-zero-shot-react agent. This is a zero-shot react agent that is optimized for chat models and capable of invoking tools that have multiple inputs.

The prompts for this type of agent are structured to facilitate interaction with multiple tools.

The agent is instructed to respond helpfully and accurately, using a JSON blob format to specify tool actions and inputs. This format requires naming the tool and providing the corresponding input. The interaction follows a sequence of Question, Thought, Action, and Observation, repeating as necessary. The final step involves the agent delivering a conclusive answer using a specified format. This structured approach ensures clear communication between the agent and the tools, enabling efficient task execution.

Below is the format instructions portion of the prompt for the structured-chat-zero-shot-react agent:

Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input).

Valid "action" values: "Final Answer" or {tool_names}

Provide only ONE action per $JSON_BLOB, as shown:

```

{{{{

"action": $TOOL_NAME,

"action_input": $INPUT

}}}}

```

Follow this format:

Question: input question to answer

Thought: consider previous and subsequent steps

Action:

```

$JSON_BLOB

```

Observation: action result

... (repeat Thought/Action/Observation N times)

Thought: I know what to respond

Action:

```

{{{{

"action": "Final Answer",

"action_input": "Final response to human"

}}}}

```

For more details on the format and instructions, you can refer to the LangChain source code.

🏭 Custom AgentFactory

You can create a CustomRunnableAgentFactory instance for agents that don’t use any special JSON mode for function usage. For that all function invocation behavior is implemented by you through prompt engineering and parsing.

Or, you can create an AgentFactory from scratch, for which you’ll need to make sure that the class includes both the __init__ and __call__ functions, and this will need to return a Runnable object which will standardize the output via the apply_agent_executor_adapter function.

The apply_agent_executor_adapter function integrates an AgentExecutor into the LangChain Benchmarks standardized workflow.

Let’s take a closer look at what it does:

- Standardizes Input: The function modifies the input format to match the

AgentExecutor‘s expected format. In LangChain, the input is commonly referred to as thequestion. This function changes the label toinput, which is the expected format for theAgentExecutor. - Ensures Output Consistency: The function guarantees that the

AgentExecutoralways produces anoutputfield. If theAgentExecutordoesn’t provide one, this function adds the field with an empty string as the value. This step is essential for maintaining consistency across different scenarios, making sure that downstream components receive data in a predictable format. - Incorporates System State: The function can integrate the system or environment’s current state into the output, if provided. It uses a

state_reader, which, when called, returns the current state. This feature is useful in dynamic environments where the system’s state can affect theAgentExecutor‘s responses. - Integrates into Workflow: The function prepares the

AgentExecutorto fit seamlessly into a larger pipeline of operations within LangChain. It achieves this by wrapping the executor with additional functionalities (input formatting, output ensuring, and optional state reading) and making it compatible with other components in a LangChain workflow.

Basically, it helps adapt an AgentExecutor to ensure that the executor receives inputs in the correct format, consistently produces outputs with the necessary fields, and optionally incorporates system state information.

class AgentFactory:

"""

A factory class for creating agents tailored to perform tasks within a specific environment.

This class dynamically generates agents for each run, ensuring they operate in a fresh environment.

The environment's state can change based on the agent's actions, necessitating a new instance for each run.

The factory uses a specific model for the Large Language Model (LLM) and integrates tools and adapters

to ensure that the agent's inputs and outputs align with the required dataset schema.

Attributes:

task: The task for which the agent is being created. This determines the type of environment the agent will operate in.

llm: The Large Language Model (LLM) used by the agent.

Usage:

The AgentFactory is invoked to create a new agent. It initializes a new environment, sets up the LLM,

and configures the agent with necessary tools and settings. The agent is then wrapped with an adapter

to ensure compatibility with the dataset schema and to automatically include the state of the environment

in the agent's response at the end of each run.

"""

def __init__(self, task, llm) -> None:

self.task = task

self.llm = llm

def __call__(self):

env = self.task.create_environment()

tools = env.tools

instructions = self.task.instructions

agent_executor = initialize_agent(

tools=tools,

llm=self.llm,

agent=AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION,

return_intermediate_steps=True,

handle_parsing_errors=True,

verbose=True,

agent_kwargs={

"prefix": "### System: You are an AI assistant that follows instruction extremely well. Help as much as you can. " + instructions + " Use one of the following tools to take action:",

"suffix": f"Remember: {instructions} ALWAYS respond with the following format: \nAction: \n```$JSON_BLOB```\n \nObservation:\nThought:\n",

"human_message_template": "### User: Use the correct tool to answer the following question: {input}\n{agent_scratchpad}\n### Assistant:"

}

)

return apply_agent_executor_adapter(agent_executor, state_reader=env.read_state)

Based on the example in the LangChain Benchmark docs, the output is expected to be as follows (though our log will be different as we are using a local model):

{'input': 'how much is 3 + 5',

'output': '9.2',

'intermediate_steps': [(AgentActionMessageLog(tool='add', tool_input={'a': 3, 'b': 5}, log="\nInvoking: `add` with `{'a': 3, 'b': 5}`\n\n\n", message_log=[AIMessage(content='', additional_kwargs={'function_call': {'arguments': '{\n "a": 3,\n "b": 5\n}', 'name': 'add'}})]),

9.2)]}

decilm_factory = AgentFactory(example_task, decilm_llm)

decilm_agent = decilm_factory()

decilm_agent.invoke({"question": "how much is 3 + 5"})

mistral_factory = AgentFactory(example_task, mistral_llm)

mistral_agent = mistral_factory()

mistral_agent.invoke({"question": "how much is 3 + 5"})

🧮 Evaluation

Evaluating an agent involves assessing its use of tools and impact on the environment in response to a task and input. The evaluation criteria includes:

- Tool Usage Accuracy: Whether the agent used the expected tools.

- Efficiency in Tool Usage: Assessing if the order and manner of tool usage were optimal.

- Environment State: Checking if the agent’s actions resulted in the desired state of the environment, like updating a calendar with all meetings.

- Output Accuracy: Comparing the agent’s output to the expected reference.

Each task has a tailored evaluator for appropriate assessment, such as using an LLM to grade responses or comparing the sequence of actions against expected steps. This evaluation method is applied across various tasks for comprehensive benchmarking.

🔎 How the Evaluation Works

To run the evalution, LangChain employs a StringEvaluator under the hood, using the LLM as Judge paradigm.

A string evaluator assesses the performance of a language model by comparing its generated text output to a reference string or input text. These evaluators provide a way to systematically measure how well a language model produces textual output that matches an expected response or meets other specified criteria. They are a core component of benchmarking language model performance.

String evaluators are commonly used to evaluate a model’s predicted response against a given prompt or question.

Often a reference label is provided to define the ideal or correct response. Recall that in Multiverse Math, agents are tasked with solving problems and interacting with their environment. Agents’ actions are recorded as “intermediate steps,” and the dataset contains “expected steps” as references to guide agent behavior.

For Multiverse Math, LangChain Benchmarks implements the following:

QAMathEvaluatorAgentTrajectoryEvaluator

The evaluation process ensures a comprehensive assessment of the agent’s performance across various parameters.

Here’s how it does that:

- Trajectory Score: It assesses the correctness of the agent’s trajectory by comparing the actual steps to the expected ones. Depending on whether the order matters, it determines if the agent’s actions align with the reference.

- Step Fraction: This metric calculates the ratio of the number of actual steps to the number of expected steps. It provides insight into how closely the agent’s actions align with the reference.

- State Score: If the agent’s output includes information about its state, the evaluator checks if it matches the expected state.

- Output Evaluation: Optionally, the evaluator can assess the agent’s final output using a Question-Answer (QA) evaluator. It compares the agent’s response to the reference. This part can be customized based on specific evaluation needs.

Agents must output “intermediate_steps” in their runs, and the dataset must have “expected_steps”. The evaluation uses a QAMathEvaluator, which is a string evaluator based on a grading template. It evaluates the agent’s output against the reference answer, scoring it as correct or incorrect. The evaluation also involves comparing the actual steps taken by the agent against the expected steps, considering whether the order of these steps matters. Additionally, the final state of the environment is compared against the expected state.

You can refer to the source code and the prompt for more detail.

import uuid

import datetime

import uuid

from langsmith.client import Client

from langchain_benchmarks import clone_public_dataset

experiment_uuid = uuid.uuid4().hex[:4]

client = Client()

today = datetime.date.today().isoformat()

tests = [

("DeciLM", decilm_llm),

("Mistral", mistral_llm),

]

for thing in registry:

if thing.name in ('Multiverse Math'):

task = registry[thing.name]

clone_public_dataset(task.dataset_id)

env = task.create_environment()

tools = env.tools

for name, model in tests:

print()

print(f"Benchmarking {task.name} with model: {name}")

eval_config = task.get_eval_config()

project_name = f"{name}-{task.name}-{today}-{experiment_uuid}"

agent_factory = AgentFactory(task, model)

client.run_on_dataset(

dataset_name=task.name,

llm_or_chain_factory=agent_factory,

evaluation=eval_config,

verbose=True,

project_name=project_name,

tags=[name],

project_metadata={

"model": name,

"id": experiment_uuid,

"task": task.name,

"date": today,

"archived": False,

},

)

The code above will save all of your results to LangSmith so you can analyze them later.

Conclusion

Diving into LangChain Benchmarks has been a lot of fun, especially with the Multiverse Math benchmark.

This experience not only sharpened my understanding of LLMs’ capabilities but also highlighted the nuances of benchmarking in altered mathematical environments. Through this exploration, I discovered how important the right choice of agent and the art of prompt engineering are in maximizing the performance of models like DeciLM-7B-Instruct and Mistral-7B-Instruct-v0.2.

Setting up the benchmarks, though initially daunting, turned out to be a rewarding process, offering insights into the intricate mechanics of LangChain’s framework. My hope is that sharing this journey not only piques your curiosity but also empowers you to embark on your own explorations with LLMs. Remember, the key lies in the details – from choosing the right tools to crafting the perfect prompt.

Now that you know how to run evaluations of your own, I want to turn our attention to the impact of prompts, which I’ll do in the follow up to this blog!