Edge AI is transforming the way we interact with AI in our daily lives. This is due in part to recent advances in deep learning, specifically inference by neural networks. Deep learning has brought us self-driving cars that can spot pedestrians, radiology tools that identify tumors, or sensors that report changes in industrial infrastructure or agricultural fields. In fact, Gartner predicts that by 2025, 50% of all inference will take place at the edge.

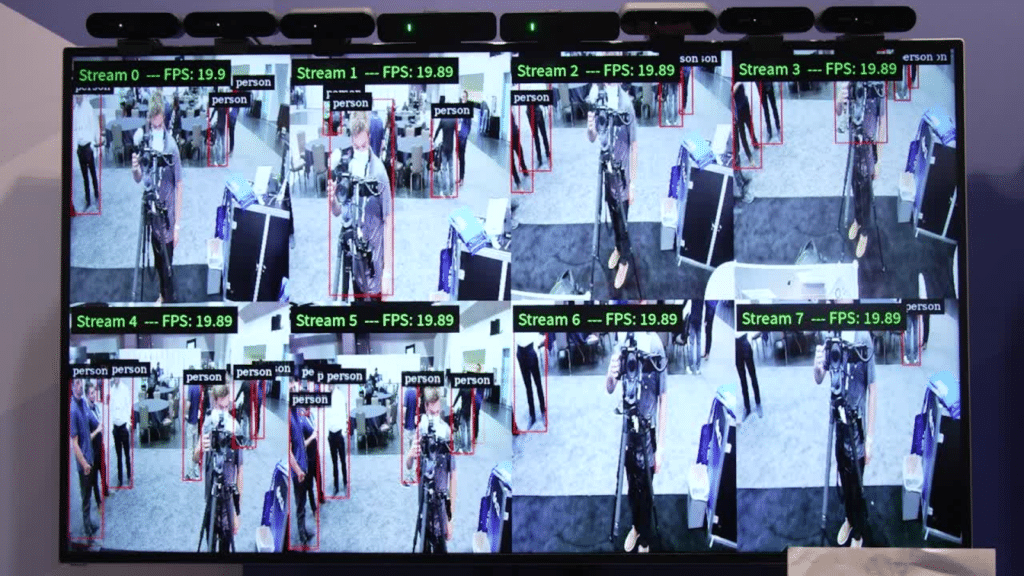

When we look at how AI, together with edge devices, has unlocked incredible new opportunities, object detection is one of the key enablers. Object detection is a computer vision task that identifies and locates objects in an image or video. By understanding exactly what is in the image and precisely where it’s located, we can track objects, count them, and discover when anomalies appear.

This has made object detection one of the most sought after tasks in AI, especially on edge devices. It’s already playing a starring role in applications for robotics, automotives, video analytics, drone inspections, and more.

The unique benefits we’re seeing with edge AI include everything from real-time insights previously unavailable to reduced costs and increased privacy. Because edge technology analyzes data locally as opposed to in the cloud, it opens the way to much faster data analytics and insight that can be acted upon immediately.

Having this processing power closer to the edge means applications need less or no internet bandwidth. They can also take advantage of new lower cost hardware instead of doing the processing on expensive GPUs or in the cloud.

Compliance with new regulations for increased privacy and the protection of sensitive data are also easier because data is collected and processed locally. In short, there are many advantages, but, as with anything, there is also the challenging side of object detection.

Challenges of Object Detection at the Edge

Today’s more sophisticated object detection models use deep learning inference, which can pose several challenges for edge devices. Firstly, AI computing capabilities on edge devices are limited and often cannot keep up with the speed required to compute these massive models. Because there are so many different sizes, types, and numbers of objects that need to be detected, different applications need model architectures that are complex yet flexible.

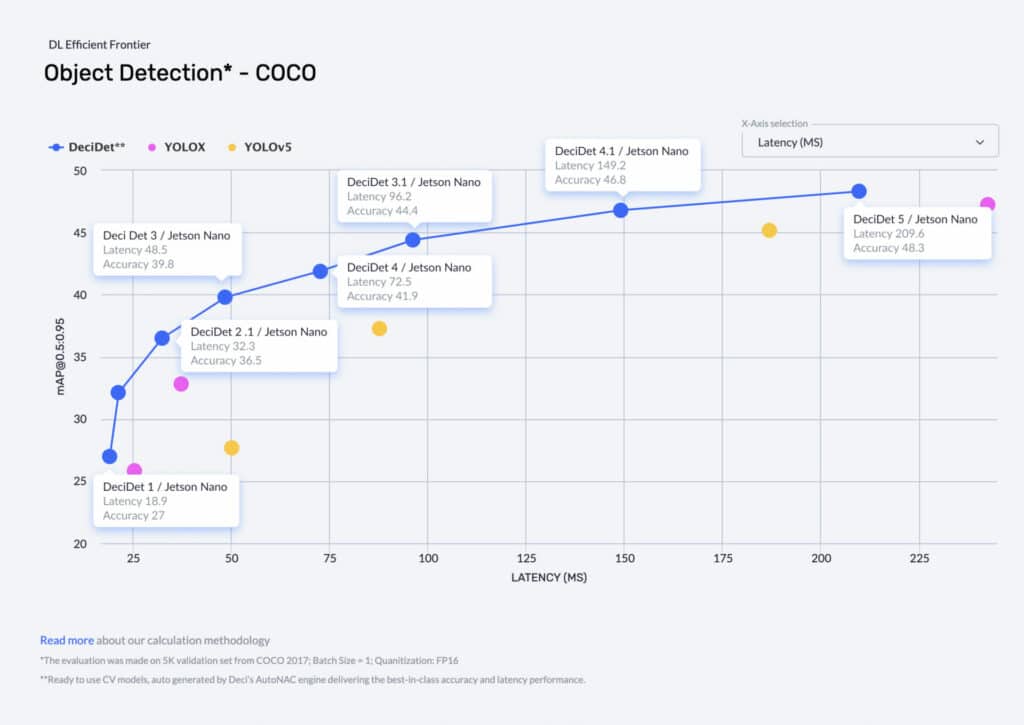

There is also the tradeoff between speed and accuracy to consider, which means different models will be more or less appropriate depending on the context of the application. Deep networks with more layers will offer better accuracy but at the price of slower performance. For example, the YOLOv3 architecture can offer better accuracy for high-res images, but requires more processing time.

Running object detection on edge devices is also challenging in terms of the memory and storage requirements. This, in turn, means constraints on the type of object detection model used. Beyond being highly efficient and having a small memory footprint, the architecture chosen for edge devices has to be thrifty when it comes to power consumption. This, along with the heat dissipation in some devices, can also limit the model.

Here’s the thing: a network with reduced memory and compute power may simply be impractical for applications that require real-time processing. Both speed and accuracy are a must for security systems, healthcare applications, or algorithms for autonomous cars.

Choosing a network with high latency or low accuracy is simply not an option. With so many variations of object detection networks, how do you find the one that is best for your application?

NAS to the Rescue

Fast and accurate deep neural networks are essential for successfully deploying commercial AI applications at the edge. But, these neural networks need loads of compute power, something that is inherently limited at the edge. How do you find the right combination?

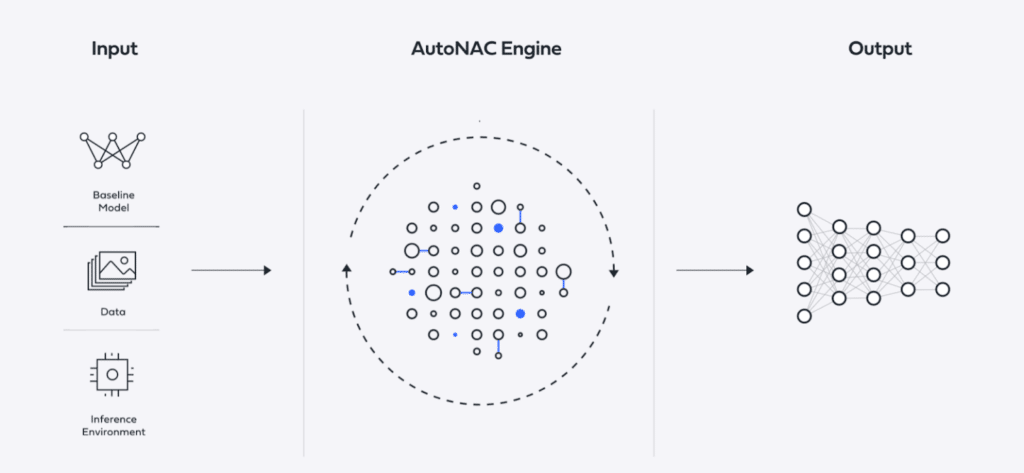

Neural architecture search (NAS) is a potentially viable approach to creating such models. The NAS process designs and searches for the best structure of the neural network for your dataset and hardware.

NAS starts with a candidate neural architecture, and it spans from there, creating a search space that could include tens, hundreds, or thousands of models. The algorithm then benchmarks those models and in the next steps assesses the accuracy of the candidates. It uses a machine learning-based algorithm to analyze a given set of architectures and select those that have a high probability of possessing the best accuracy while preserving the desired latency.

As you can imagine, NAS comes with its own challenges and is far from simple. The search space can be enormous, and benchmarking at this kind of scale tends to be impractical, if not impossible. At Deci, we looked into how we can scale the optimization factor of this algorithm.

Our NAS method, known as Automated Neural Architecture Construction (AutoNAC) technology, modifies the process and benchmarks models on a given hardware. It then selects the best model while minimizing the tradeoff between accuracy and latency. AutoNAC can discover fast and accurate neural architectures for any task on any specific AI chip—including NVIDIA Jetson edge devices—while taking both compilation and quantization into consideration.

DeciDets for Edge, is an example of how a more efficient model can solve the deep learning inference problem on edge devices. In the graph below, DeciDets was optimized for the NVIDIA Jetson Nano edge devices, offering the best accuracy-tradeoff available and outperforming known SOTA classification models.

To the Edge and Beyond

By generating highly efficient models tailored to edge applications, Deci is empowering companies to deliver superior products to market and tackle the real-time challenges we’re seeing at the edge.

- One video conference company was having trouble with their virtual background, which was causing users to have a frustrating experience. After optimizing their model with Deci’s AutoNAC, the task achieved a 2.4x increase in throughput while preserving accuracy—ensuring a seamless and comfortable user experience.

- Another customer’s smart waste management solution wasn’t making the grade when it came to real-time performance. With Deci’s help, they were able to accelerate their solution’s performance by close to 13%, unlocking a new partnership and multiple business opportunities.

- A company’s video conference solution was falling short when it came to face detection. Optimizing the model with Deci’s AutoNAC task introduced a 1.65x increase in throughput while preserving accuracy for the hardware.

Other clients are reducing costs by moving from the cloud to edge processing, benefiting from less-expensive hardware and the added advantages of data privacy. Deci’s ability to create SOTA models tailored to specific applications and hardware saves time and money, eliminates the need for endless fine-tuning, and is opening up fresh opportunities at the edge.

Find out how Deci can optimize your models. Book a demo to see this technology in action.