Introduction

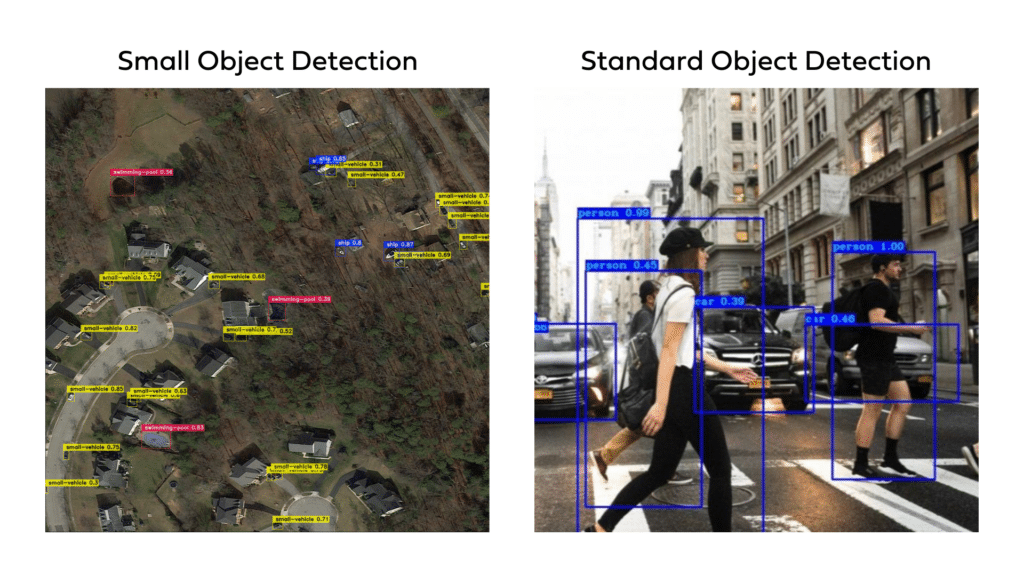

Object detection underpins a wide array of applications, spanning from autonomous vehicles to security systems, thanks to the accuracy and effectiveness of current leading object detection models. Yet, it encounters notable obstacles in satellite and aerial imagery analysis, where the ability to detect small objects—those occupying a minor fraction of the image area and possibly comprising just a few pixels—is essential. Addressing this requires advanced techniques. After highlighting key use cases for small object detection, this blog will address the specific challenges of this task and detail effective solutions.

Key Use Cases Necessitating Small Object Detection

Small object detection is pivotal across a range of sectors. Understanding these use cases illuminates the critical need for advancing detection methodologies to cater to small objects. Here are 5 common use cases across different sectors:

- Agriculture: Crop Health Monitoring – Detecting early signs of disease, pest infestation, or nutrient deficiency in individual plants or specific areas of a field. This use case is critical for implementing precise intervention strategies, thereby optimizing yield and reducing resource waste.

- Environmental Conservation: Wildlife Monitoring – Identifying and tracking small animals or specific species within large natural habitats using aerial or satellite imagery. This is essential for studying biodiversity, understanding ecological dynamics, and enforcing wildlife protection laws.

- Disaster Response and Management: Damage Assessment – Quickly identifying small-scale damages to infrastructure, such as roads, bridges, and buildings, following natural disasters. Accurate detection enables prioritized and efficient allocation of emergency response resources and reconstruction efforts.

- Urban Planning and Smart Cities: Infrastructure Mapping and Monitoring – Precisely detecting and cataloging small urban features, such as traffic signs, utility poles, and streetlights, from aerial imagery. This information supports urban development projects, traffic management systems, and infrastructure maintenance programs.

The Challenges of Small Object Detection

If you’re developing an application focused on one of these key use cases, be prepared to face unique challenges that extend beyond the usual scope of object detection. The task of detecting small objects, often encountered in satellite or aerial imagery, introduces distinct challenges that necessitate innovative solutions.

Challenge 1: Limited Detail for Recognition

The main challenge in detecting small objects lies in the limited detail available for identifying and classifying objects. Small objects may offer only a minimal number of pixels, reducing the information available. This scarcity of detail complicates the identification of distinctive features necessary for classification by traditional object detection algorithms.

Pixel Representation and Feature Extraction

Objects are represented by pixels in digital images, each pixel encoding color and intensity information. While larger objects provide a rich array of details for feature extraction, small objects, with their limited pixel representation, often lack sufficient detail, leading to scenarios where critical features are barely discernible.

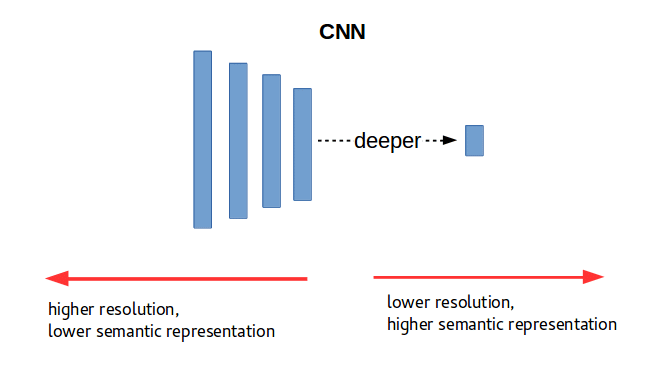

Modern CNNs Optimized for Large Bounding Box Sizes

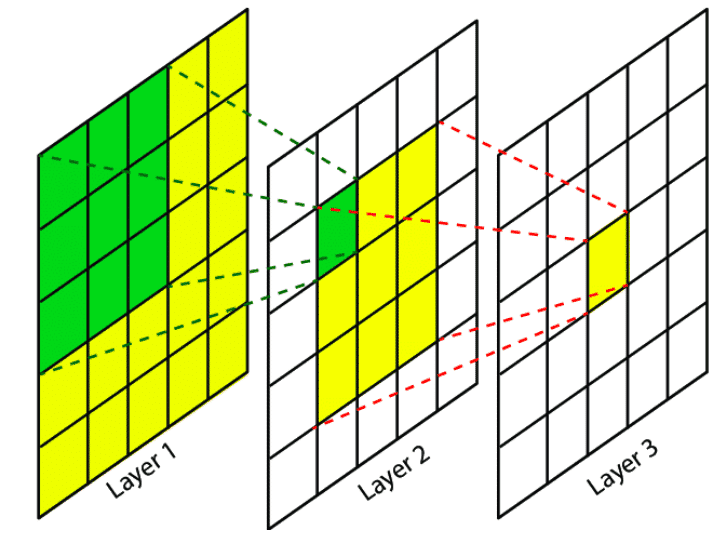

Modern CNNs are optimized for larger bounding box sizes. This leads to inherent challenges in accurately recognizing smaller objects, as their limited pixel representation can diminish in significance through the network’s layers. In the architecture of CNNs, as the depth increases, there is a deliberate reduction in spatial resolution, a strategy intended to distill and abstract higher-level features from the input data.

Image Source: Understanding Object Detection Methods

However, this process can inadvertently cause the finer details of small objects to become less discernible, effectively causing them to ‘vanish’ from the network’s detection capabilities.

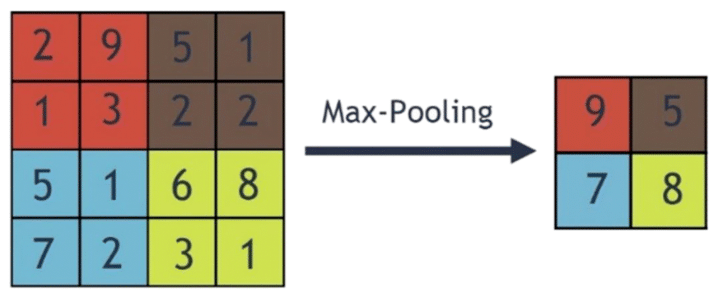

Image Source: ResearchGate

Furthermore, the standard inclusion of pooling layers, designed to reduce the dimensionality of the data by consolidating information into a more manageable format, can also contribute to this problem. This operation may inadvertently compress and eliminate critical details of small objects into a reduced number of pixels, thus risking the obliteration of vital features necessary for accurate detection and recognition.

Image Source: ResearchGate

Challenges in Cluttered Scenes

In complex scenes, differentiating small objects from background noise becomes exceedingly difficult. The model must have a high spatial resolution capability to accurately identify and classify these objects amidst similar-sized elements and textures.

Challenge 2: IoU Score Sensitivity and [email protected] Sub-Optimality

A second core challenge in small object detection is the sensitivity of the Intersection Over Union (IoU) metric to minor detection errors. This sensitivity makes the widely used evaluation metric [email protected] sub-optimal for small object detection. Let’s delve deeper into this challenge.

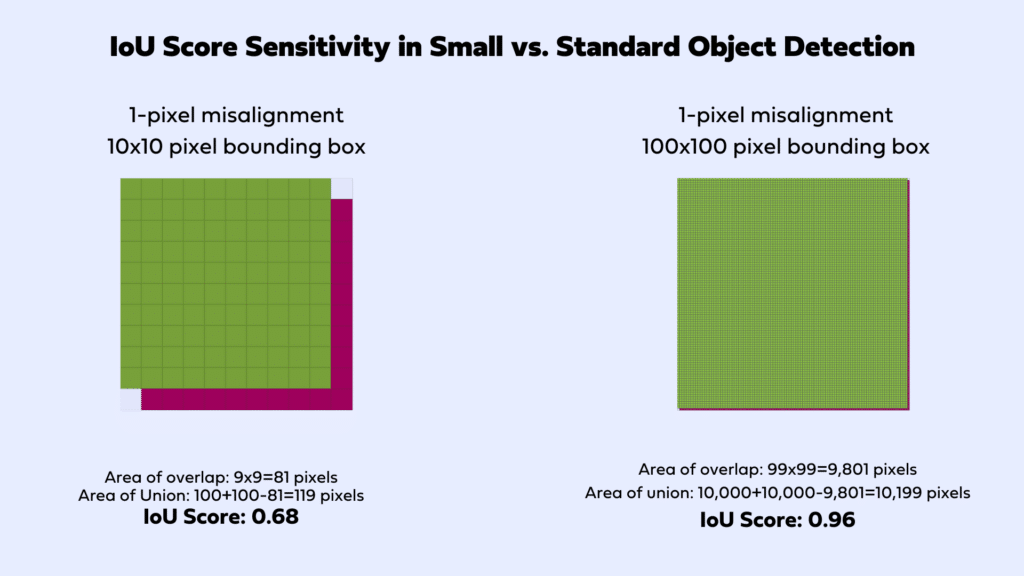

IoU Score Sensitivity

The IoU metric is fundamental for evaluating the precision of object detection models, quantifying the extent of overlap between predicted and actual (ground truth) bounding boxes. By calculating the ratio of the area of overlap to the area of union between these bounding boxes, IoU provides a scale from 0 (no overlap) to 1 (perfect overlap), offering a direct measure of localization accuracy.

However, the IoU score exhibits pronounced sensitivity to minor changes in the bounding box dimensions, particularly for small objects. Such sensitivity is attributable to the relatively small area these objects occupy, making even slight misalignments between predicted and actual bounding boxes significantly affect the IoU calculation.

For instance, consider a small object with a 10×10 pixel bounding box. A misalignment of merely 1 pixel can reduce the IoU score substantially:

Area of overlap: 9×9=81 pixels

Area of Union: 100+100-81=119 pixels

IoU: 81/119=0.68

In contrast, for a larger object with a 100×100 pixel bounding box, a similar 1-pixel misalignment results in a relatively minor impact on the IoU score:

Area of overlap: 99×99=9,801 pixels

Area of union: 10,000+10,000-9,801=10,199 pixels

IoU: 9,801/10,199=0.96

This discrepancy illustrates the challenge of evaluating and optimizing object detection models for small objects. The IoU metric’s sensitivity can complicate model training, making it harder to improve detection accuracy for small objects. Additionally, models may be unjustly penalized for minor inaccuracies in detecting small objects, complicating the assessment of a model’s true performance in applications where such detection is crucial.

Sub-Optimality of COCO mAP Metric

The sensitivity of the IoU score makes the popular COCO mAP metric, [email protected] insufficient for evaluating models on the task of small object detection.

The mAP score is calculated by first computing the Average Precision (AP) for each object class at different IoU thresholds. AP is a measure that combines precision (the proportion of true positive detections among all positive detections) and recall (the proportion of true positive detections among all actual instances of the class) at various decision thresholds. The mAP is then derived as the mean of these AP scores across all classes, providing a single metric that reflects overall detection performance.

The IoU thresholds used in the COCO mAP metric range from lenient (0.5) to stringent (0.95), which is meant to allow evaluators to understand how well a model performs across a spectrum of localization accuracies. While this approach is comprehensive, it may not be entirely suited for small object detection. Since the final mAP score aggregates performance across all IoU thresholds, the sensitivity of IoU scores to minor discrepancies for small objects can skew the evaluation, potentially underestimating the model’s capability in detecting these objects.

Additionally, at higher IoU thresholds, annotation quality may become a problem. Human annotators may easily introduce 2-3 pixel annotation errors, which can significantly limit the potential top accuracy in small object detection.

How to Tell Whether COCO mAP Metric is Sub-Optimal for Your Dataset

To assess the sensitivity of your dataset and evaluation metric to minor localization errors, apply a controlled shift of 1 pixel to the left (or any other direction) to the ground truth annotations. Then, compare the evaluation metric scores of the original and shifted annotations. A deviation exceeding 1-2 mAP points from a perfect score of 1.0 indicates that the evaluation metric may need reevaluation for its suitability in your context.

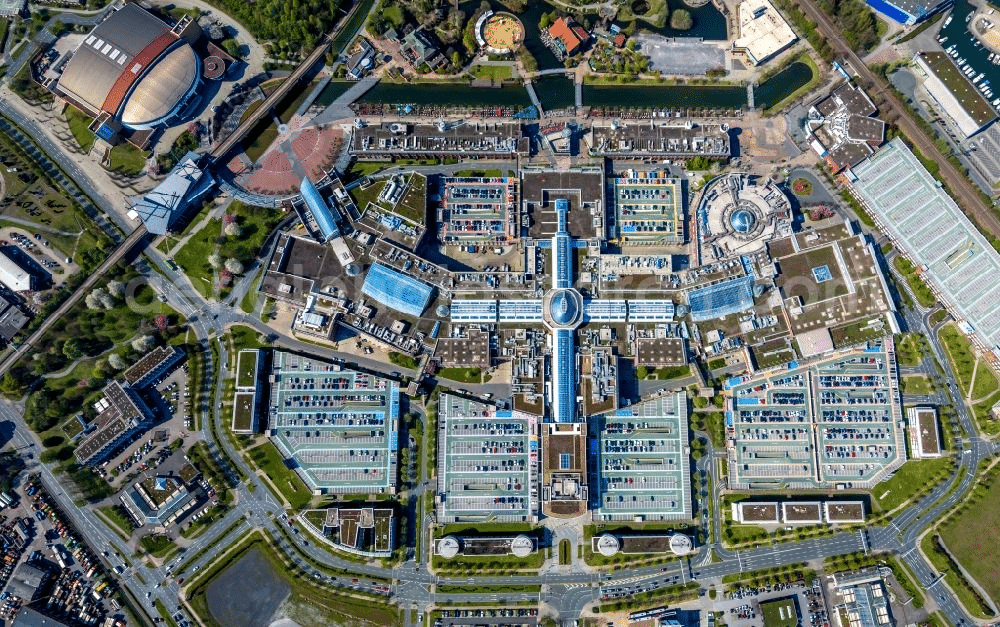

Enhancing Small Object Detection Accuracy Without Adding Latency

Intuitively, leveraging higher-resolution images seems like a straightforward solution to improve small object detection accuracy by providing more detail for recognition and diminishing the impact of minor misalignments on IoU scores, thus rendering mAP scores more reliable.

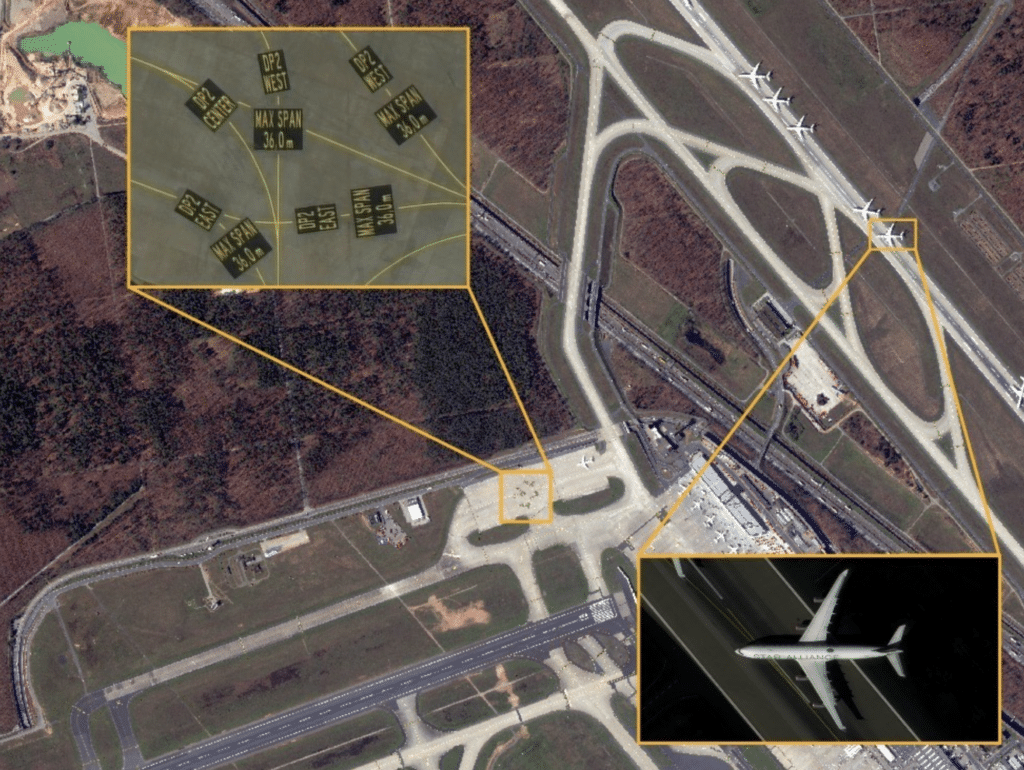

Image Source: Maxar Technologies Blog

Why using higher resolution images is often not a viable solution

In many scenarios, relying on higher resolution images to enhance object detection is not a practical approach due to several constraints:

1. Limited Availability of High-Resolution Imagery

Access to high-resolution images is often restricted by the limitations of imaging technology and available resources. For instance, satellite and drone cameras may not capture the detail needed for detecting tiny objects due to their hardware specifications. Upgrading these systems for higher resolution involves significant costs, complex technology upgrades, and extensive time and regulatory hurdles in the case of satellites.

2. Increased Computational and Memory Demand of High-Resolution Images

Utilizing high-resolution images for small object detection significantly increases both computational and memory demands. These images contain more detailed information, requiring larger, more complex models with extensive parameters to process and analyze the intricate details effectively. Consequently, this elevates the model’s memory footprint and the computational power needed, challenging especially when deploying on resource-constrained edge devices. Such devices, designed for efficiency and compactness, may struggle to meet the heightened requirements, leading to potential bottlenecks in processing speed and efficiency during real-time applications.

3. Increased Latency in Real-Time Applications

High-resolution images extend the processing time required for small object detection, creating latency issues for real-time applications. Quick decision-making is crucial in monitoring use cases, where delays can affect performance and safety. Higher resolution means more data to analyze, slowing down the system’s response time. In environments where rapid responses are essential, the increased latency from processing these larger images can significantly impede operational efficiency.

4. Diminishing Returns

Beyond a certain point, increasing image resolution might not yield proportional improvements in detection performance for small objects. The fundamental challenges of feature extraction and scale mismatch may still persist, requiring architectural or algorithmic solutions rather than just more pixels.

The upshot is that we need to find different solutions to the challenges of small object detection.

Adopting Alternative Evaluation Metrics

The IoU metric’s sensitivity to minor prediction errors can disproportionately affect evaluations, especially for small objects where minor discrepancies can significantly impact IoU scores. To mitigate these issues, we can consider alternative or supplementary evaluation metrics that offer a more nuanced understanding of a model’s small object detection capabilities:

Adopting a Single IoU Threshold:

Using a consistent, lower IoU threshold (e.g. 0.5) reduces the impact of minor localization errors, prioritizing the model’s ability to identify objects over precision in bounding box alignment.

Implementation Considerations:

- Threshold Selection: The choice of the IoU threshold should balance the need for reasonable localization accuracy with the acknowledgment of inherent difficulties in precisely detecting small objects. A threshold too low might overlook significant localization errors, while too high a threshold could unfairly penalize detections of small objects.

- Evaluation Protocol Adjustment: Integrating this single IoU threshold into the evaluation protocol requires adjustments in how true positives are counted and how precision and recall are computed, ensuring that the model’s performance is accurately reflected under this simplified criterion.

Implementing Distance-based Metrics:

By evaluating the distance between the centers of predicted and ground truth bounding boxes, this approach directly addresses the issue of IoU sensitivity. It focuses on the proximity of detection rather than the precise overlap, which can be a more forgiving measure for small objects.

Implementation Considerations:

- Defining Distance Metrics: Common choices include Euclidean distance or Manhattan distance. The selection depends on the specific requirements of the task and the expected distribution of object positions within images.

- Normalization and Thresholding: To ensure fairness and comparability across different image sizes and object scales, distances should be normalized relative to image dimensions or object sizes. Additionally, establishing a maximum allowable distance for a detection to be considered correct is crucial for maintaining a standard of localization accuracy.

- Integration with Existing Metrics: Distance-based metrics can be used alongside traditional IoU-based evaluations to provide a more comprehensive view of model performance. For instance, a combined metric that incorporates both the IoU score for overlap quality and a distance-based score for spatial accuracy could offer a balanced assessment of detection capabilities.

Developing Better Models for Small Object Detection

The goal of enhancing small object detection goes beyond just better scores on evaluation metrics; it’s about fundamentally improving how models detect and classify small objects. This means developing model architectures that specifically address the challenges of recognizing objects with minimal detail, leading to genuine improvements in detection capabilities.

Here are three algorithmic modifications that can produce a model that is better suited for small object detection:

Optimizing the Receptive Field

Adjusting the receptive field size is critical. Smaller receptive fields or depthwise separable convolutions can focus on finer details, enhancing the detection of small objects.

Leveraging Finer Feature Maps

Employing finer feature maps allows for the retention of critical details in small objects, often overlooked by standard methods. This approach ensures that high-resolution features are carried deeper into the model, preserving the unique attributes of small objects. Adjustments like reducing convolutional layer strides or using upscaling techniques are necessary to balance detailed feature sensitivity with model performance.

Modifying Detection Heads

Adapting detection heads to focus on scales suitable for small objects or modifying them to improve accuracy for specific size ranges can significantly enhance performance.

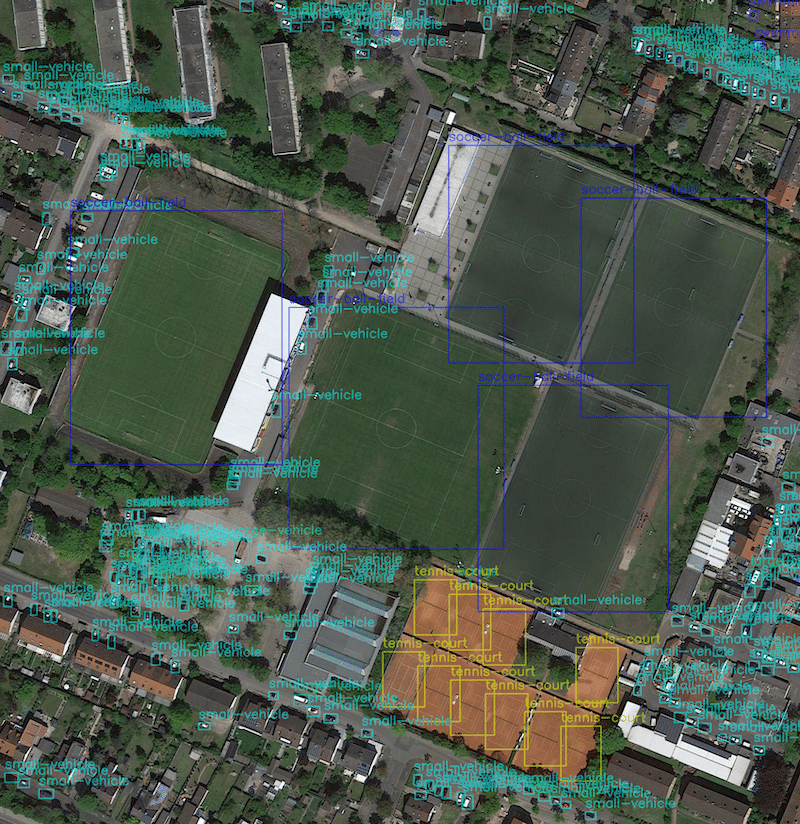

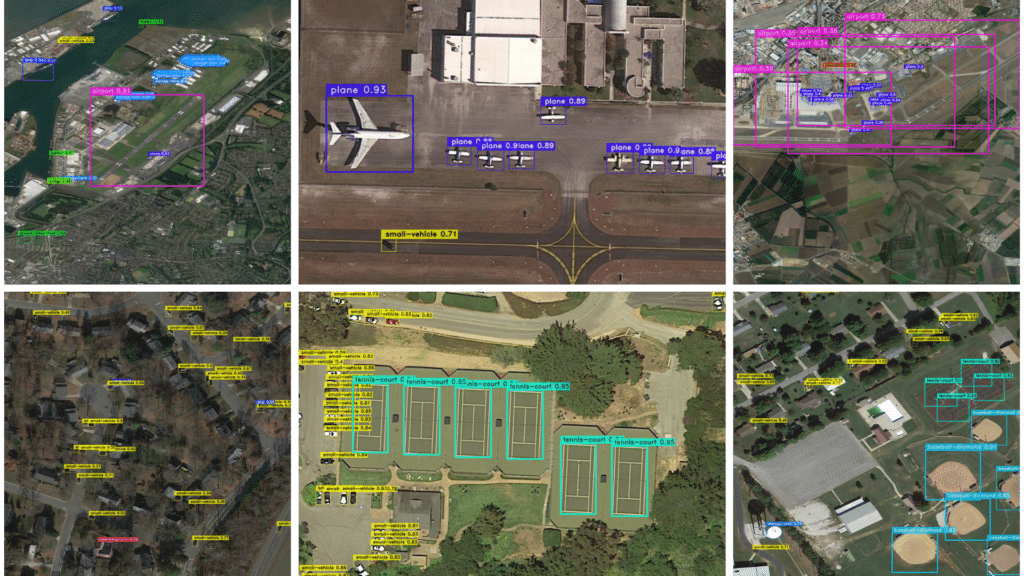

YOLO-NAS-SAT: A Specialized Architecture for Small Object Detection

Very few, if any, neural network architectures have been explicitly refined for small object detection. That is, until the introduction of YOLO-NAS-Sat. Deriving from the state-of-the-art object detection model, YOLO-NAS, YOLO-NAS-Sat’s architecture was specifically optimized for accuracy and efficiency in small object detection on edge devices such as the Jetson AGX Orin. Additionally, YOLO-NAS-Sat was fine-tuned on the DOTA 2.0 dataset, an extensive collection of aerial images designed for object detection and analysis, featuring diverse objects across multiple categories.

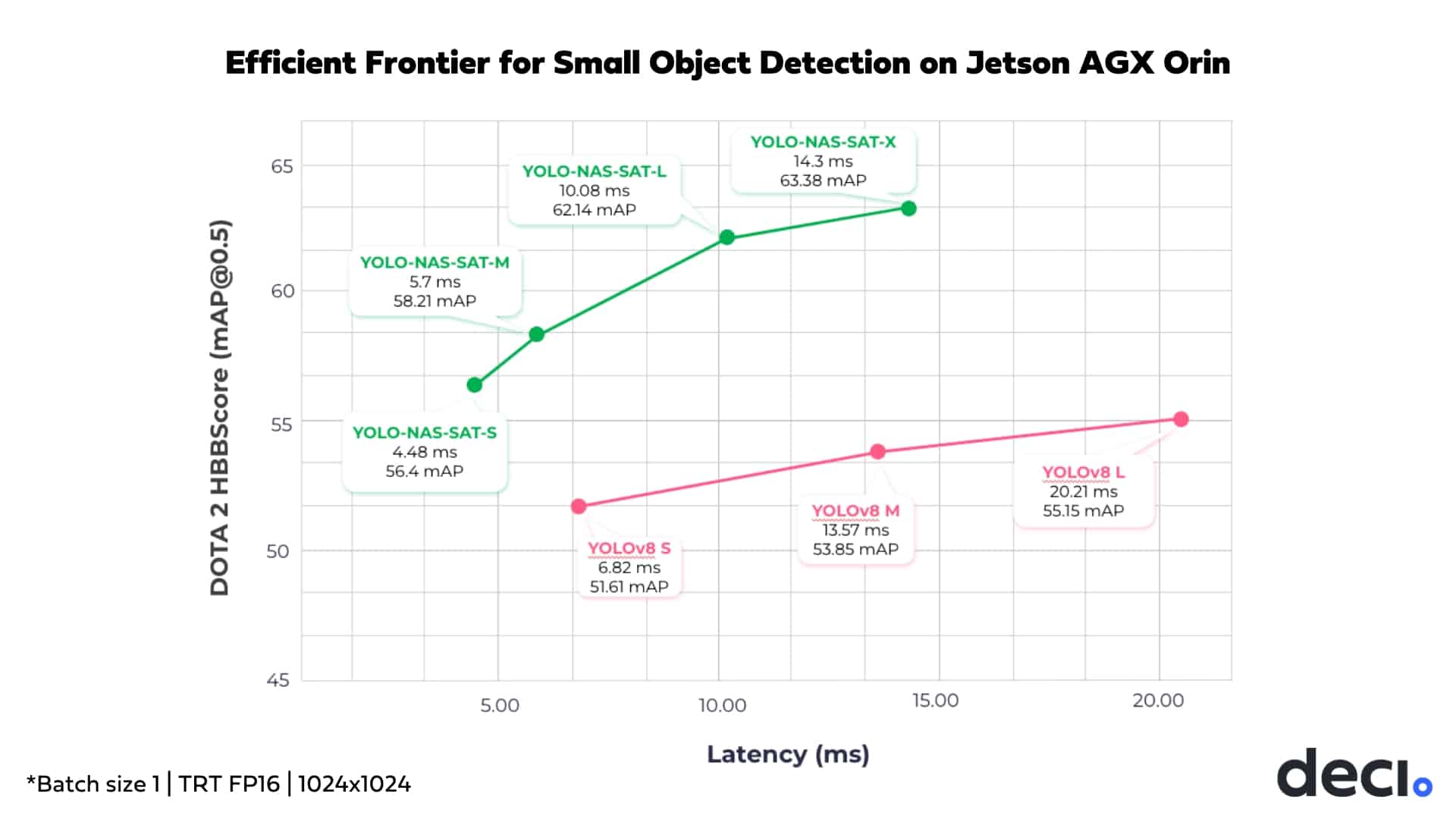

The result is a model that outperforms models such as YOLOv8 in both latency and accuracy in small object detection.

YOLO-NAS-Sat Highlights

- YOLO-NAS-Sat comes in 4 sizes, Small, Medium, Large, and X-Large

- The models are 1.52x-2.38x faster than the corresponding YOLOv8 models on NVIDIA AGX Orin.

- The models’ [email protected] scores on DOTA 2.0 are 4.79 points to 6.99 points higher than the corresponding YOLOv8 models, after both sets of models were fine-tuned on DOTA 2.0 in exactly the same way.

- YOLO-NAS-Sat is INT8 quantization friendly

YOLO-NAS-Sat provides a ready-made solution for a variety of small object detection use cases. It eliminates extensive experimentation and fine-tuning, facilitating a quicker transition from design to deployment.

Conclusion

In addressing the intricate challenges of small-object detection, this article has highlighted crucial use cases, pinpointed specific obstacles, and outlined targeted solutions.

Essential Takeaways

Architectural Refinements: Enhancing model architecture, by optimizing receptive fields, incorporating skip connections, and customizing detection heads, is vital for improving detection accuracy for small objects amidst complex imagery.

Evaluation Metric Adjustments: The examination of conventional metrics’ limitations underscores the necessity for adopting or adapting metrics that accurately reflect the challenges of detecting small objects. This includes exploring metrics that minimize the impact of minor localization errors and provide a more comprehensive performance assessment.

Development Efficiency with YOLO-NAS-Sat: Optimized for small object detection on edge devices, YOLO-NAS-Sat improves development timelines for small object detection projects. It eliminates extensive experimentation and fine-tuning, facilitating a quicker transition from design to deployment, essential for time-sensitive and real-time applications.

To learn more about YOLO-NAS-Sat and how it might help you reach production faster, we invite you to request a free demo of YOLO-NAS-Sat.