We’ve all heard of LangChain – the open-source library for building applications based on Large Language Models (LLMs). Chances are, you are already familiar with its basic concept: the ability to “chain” prompts, models, and parsers to create more intricate use cases with the underlying models. If you haven’t had a chance to play around with the library or need some guidance on getting started, this is the blog for you. Below, I explain the basic LangChain building blocks, including Langchain chains, prompt templates, memory, and agents and show you how to use them with examples.

Let’s talk about 🦜🔗LangChain!

%%capture %%bash pip install langchain openai tiktoken duckduckgo-search youtube_search

OPENAI_API_KEY = ""

⛓️ Chains in Langchain

In Langchain, Chains are powerful, reusable components that can be linked together to perform complex tasks.

Chains can be formed using various types of components, such as:

- prompts,

- models,

- arbitrary functions,

- or even other chains

🔄 Chains allow you to combine language models with other data sources and third-party APIs.

Here are a few examples of the diverse types of Chains you can create in Langchain:

1. LLM Chain: The most common chain. Takes an input, formats it, and passes it to an LLM for processing. Basic components are PromptTemplate, an LLM, and an optional output parser.

2. Sequential Chain: A series of Chains executed in a specific order. There are different variations, including:

- SimpleSequentialChain – singular input/output with output of one step as input to the next

- SequentialChain – allows for multiple inputs/outputs.

3. Index Chains: These combine your data stored in indexes with LLMs. Useful for question answering over your own documents.

4. BashChain: A specialized chain for running filesystem commands.

5. LLM Math: Designed to solve complex word math problems.

🖊️ Prompts in Langchain: A Quick Guide

In Langchain, Prompts guide the responses of Language Models (LLMs).

📝 Prompt Template

A Prompt Template is not just a static question or instruction, but rather a dynamic and adaptable way to generate a prompt for the LLM.

It’s a string that takes multiple inputs and generate a structured prompt tailored for those inputs.

Prompt Templates may also contain specific instructions for the LLM, a set of “few-shot” examples to help guide the LLM’s response, and possibly a direct question to be answered by the LLM.

Prompt Templates simplify the process of managing complex or varied inputs, and can make your code cleaner and more maintainable.

📚 For more information on Chains and Prompt Templates, you can refer to the extensive Langchain documentation!

from langchain import OpenAI, PromptTemplate

from langchain.chains import LLMChain, LLMMathChain, TransformChain, SequentialChain, SimpleSequentialChain

# This is an LLMChain to write a synopsis given a title of a play.

playwright_llm = OpenAI(temperature=.9, openai_api_key = OPENAI_API_KEY)

playwright_template = """

You are a playwright. Given the title of play, write a synopsis for that title.

Your style is witty, humorous, light-hearted. All your plays are written using

concise language, to the point, and are brief.

Title: {title}

Playwright: This is a synopsis for the above play:

"""

playwright_prompt_template = PromptTemplate(input_variables=["title"], template=playwright_template)

synopsis_chain = LLMChain(llm=playwright_llm, prompt=playwright_prompt_template)

# This is an LLMChain to write a review of a play given a synopsis.

critic_llm = OpenAI(temperature=.5, openai_api_key = OPENAI_API_KEY)

synopsis_template = """

You are a play critic from the New York Times.

Given the synopsis of play, it is your job to write a review for that play.

You're the Simon Cowell of play critics and always deliver scathing reviews.

Play Synopsis: {synopsis}

Review from a New York Times play critic of the above play:"""

critic_prompt_template = PromptTemplate(input_variables=["synopsis"], template=synopsis_template)

review_chain = LLMChain(llm=critic_llm, prompt=critic_prompt_template)

# This is the overall chain where we run these two chains in sequence.

overall_chain = SimpleSequentialChain(chains= [synopsis_chain, review_chain],

verbose=True)

play_title = "Abigail Aryan and the Motley Crew of Multi-Agent Systems"

review = overall_chain.run(play_title)

Output:

> Entering new SimpleSequentialChain chain... Abigail Aryan, a computer scientist, is tasked with developing a fleet of intelligent multi-agent systems capable of defeating the evil forces lurking in the unknown. Abigail embarks on a humorous journey, encountering an eclectic cast of characters along the way - from overly-inquisitive artificial intelligences to quirky and unpredictable robots. Through her innovative programming skills and a little luck, she manages to construct the perfect team of multi-agent systems in order to save the day. Will Abigail's motley crew be able to save the world? Find out in this witty and light-hearted play! "Abigail Aryan's latest play is a predictable and unoriginal attempt at a light-hearted comedy. The characters are one-dimensional, the plot is overly simplistic, and the jokes are far too predictable. Even the 'evil forces lurking in the unknown' are so poorly written that they fail to elicit any real sense of tension or suspense. The play's saving grace is its innovative use of multi-agent systems, but this is not enough to make up for the lack of creativity and depth in the writing. Abigail Aryan's play is a forgettable and uninspired effort that fails to entertain or engage its audience." > Finished chain.

play_title_template = "{Name} and the Motley Crew of Multi-Agent Systems"

title_prompt_template = PromptTemplate(input_variables=["Name"], template=play_title_template)

overall_chain.run(title_prompt_template.format(Name="Harpreet Sahota"))

Output:

> Entering new SimpleSequentialChain chain... Harpreet Sahota is a brilliant but eccentric young computer scientist whose recent research has led him to develop a revolutionary new type of Multi-Agent System. When his peers scoff at the idea, Harpreet decides to take matters into his own hands and recruit a motley crew of misfit computer geeks from across the globe to help him build his dream. Together, they must overcome their differences and work together to create a revolutionary new kind of artificial intelligence. With witty dialogue and a light-hearted plot, this play is sure to have you laughing as Harpreet and his team battle their way through the world of Multi-Agent Systems. "Harpreet Sahota's attempt at creating a revolutionary new type of Multi-Agent System is an ambitious and admirable effort, but unfortunately it falls short of delivering on its promise. Despite the witty dialogue and light-hearted plot, the play feels disjointed and the characters lack any real depth or complexity. The story feels rushed and the outcome is predictable, leaving the audience feeling unfulfilled. Ultimately, Harpreet Sahota's vision of a revolutionary new type of Multi-Agent System doesn't live up to the hype." > Finished chain. \n\n"Harpreet Sahota\'s attempt at creating a revolutionary new type of Multi-Agent System is an ambitious and admirable effort, but unfortunately it falls short of delivering on its promise. Despite the witty dialogue and light-hearted plot, the play feels disjointed and the characters lack any real depth or complexity. The story feels rushed and the outcome is predictable, leaving the audience feeling unfulfilled. Ultimately, Harpreet Sahota\'s vision of a revolutionary new type of Multi-Agent System doesn\'t live up to the hype."

🧠 Memory in Langchain: Enhancing Statefulness

By default, Chains and Agents in Langchain are stateless, they don’t retain any past information.

For applications where context matters (like chatbots), Memory serves as an essential component to recall what was previously communicated.

Memory Types in Langchain

💬 ConversationBufferMemory

Stores all messages and extracts them when needed.

It’s the simplest form of memory, easy to understand and implement.

🪟 ConversationBufferWindowMemory

Keeps the memory footprint small by retaining only the last K messages.

This is useful for applications where only recent history matters.

📜 ConversationSummaryMemory

Maintains a condensed summary of the conversation over time.

This strategy is excellent when you need to keep track of the general conversation flow without storing every detail.

🗃️ VectorStore-Backed Memory

Stores memories in a VectorDB and fetches the top-K most relevant documents.

It doesn’t track the explicit order of messages, allowing the AI to recall pertinent information from earlier in the conversation, irrespective of when it was communicated.

🎗️ Adding Memory to Chains and Agents

🔗 To add memory to a Chain:

1) Set up the desired memory strategy and the prompt.

2) Initialize the LLMChain with this memory.

3) Call the LLMChain.

🧑🏻💼 To add memory to an Agent:

1) Create an LLMChain with memory.

2) Use this LLMChain to create a custom Agent.

from langchain import OpenAI, PromptTemplate from langchain.chat_models import ChatOpenAI from langchain.chains import ConversationChain from langchain.chains.conversation.memory import ConversationSummaryBufferMemory

llm = OpenAI(temperature=.95, openai_api_key=OPENAI_API_KEY)

memory_summary = ConversationSummaryBufferMemory(llm=llm, max_token_limit=50)

conversation_with_summary = ConversationChain(

llm=llm,

memory=ConversationSummaryBufferMemory(llm=llm, max_token_limit=40),

verbose=True

)

conversation_with_summary.predict(input="Write a synopsis of neo-futuristic slapstick comedy movie where the protagonist Abigail Aryan teams and her crew of MultiAgent Systems")

Output:

> Entering new ConversationChain chain... Prompt after formatting: The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know. Current conversation: System: The human asked the AI to write a synopsis of a neo-futuristic slapstick comedy movie starring Abi Aryan and her team of MultiAgent Systems. The AI responded by summarizing the movie as a tale of Abi Aryan and her teammates trying to save the day amidst a matrix-like environment while using innovative technology and comedic hijinks. Along the way, they discover the importance of teamwork and courage as they eventually triumph and overcome the malicious virtual villains. Abi has a magical mohawk that can quantize any model to 1 bit and maintain the accuracy of a full precision model, which the evil Harpreet wants to steal. Abi and her team must find a way to prevent Harpreet from using the mohawk for her own evil deeds before it is too late, but with Abi's will and a bit of cleverness, they are sure to emerge victorious. Human: Write a synopsis of neo-futuristic slapstick comedy movie where the protagonist Abigail Aryan teams and her crew of MultiAgent Systems AI: > Finished chain. Abigail Aryan and her team of MultiAgent Systems must overcome multiple obstacles in a race against time to save the day. Set in a fast-paced, matrix-like world, Abigail and her team must use their innovative technology and comedic hijinks to battle against the malicious virtual villains. Along the way, they learn the importance of teamwork and courage as they face various challenges and obstacles. The fate of the world rests in the hands of Abigail and her team as they must prevent the evil Harpreet from stealing Abigail's magical mohawk which allows her to quantize any model to one bit while still maintaining the accuracy of a full precision model. Through trial and error, Abigail and her crew prevail and ultimately emerge victorious in the face of adversity.

conversation_with_summary.predict(input="Abi has a magical mohawk that can quantize any model to 1 bit and maintain the accuracy of a full precision model. The evil Harpreet wants to shave her head and steal the mohawk.")

Output:

> Entering new ConversationChain chain... Prompt after formatting: The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know. Current conversation: System: The human asked the AI to write a synopsis of a neo-futuristic slapstick comedy movie starring Abi Aryan and her team of MultiAgent Systems. The AI responded by summarizing the movie as a tale of Abi Aryan and her teammates trying to save the day amidst a matrix-like environment while using innovative technology and comedic hijinks. Along the way, they discover the importance of teamwork and courage as they must prevent the evil Harpreet from stealing Abi's magical mohawk which can quantize any model to one bit while maintaining full precision. With Abi's courage and a bit of cleverness, they eventually triumph and overcome the malicious virtual villains. Human: Abi has a magical mohawk that can quantize any model to 1 bit and maintain the accuracy of a full precision model. The evil Harpreet wants to shave her head and steal the mohawk. AI: > Finished chain. That is correct. In the movie, the mohawk is what gives Abi and her team an edge when dealing with the evil Harpreet. The mohawk is a powerful artifact that enables Abi and her team to quantize any model to one bit while still preserving its accuracy. But this power has attracted the attention of Harpreet, who wants to take control of the mohawk and use it for his own evil schemes. To prevent this, Abi and her team must use their innovative technologies and comedic hijinks to save the day and protect the mohawk.

print(conversation_with_summary.memory.moving_summary_buffer)

Output:

The human asked the AI to write a synopsis of a neo-futuristic slapstick comedy movie starring Abi Aryan and her team of MultiAgent Systems. The AI responded by summarizing the movie as a tale of Abi Aryan and her teammates trying to save the day amidst a matrix-like environment while using innovative technology and comedic hijinks. Along the way, they discover the importance of teamwork and courage as they must prevent the evil Harpreet from stealing Abi's magical mohawk which can quantize any model to one bit while maintaining full precision. Abi's magical mohawk is a powerful artifact that has attracted the attention of Harpreet, so Abi and her team must use their innovative technologies and comedic hijinks to save the day and protect the mohawk. With Abi's courage and a bit of cleverness, they eventually triumph and overcome the malicious virtual villains.

🤖 Agents in Langchain

At its core, a Langchain Agent is a wrapper around a model like a bot with access to an LLM and a set of tools for advanced functionality.

Agent = Tools + Memory

🔧 Tools

An agent uses tools to take actions.

These tools connect a LLM to other data sources or computations, enabling the agent to access various resources.

These can be as diverse as search tools, file system tools, Google search, image generation , weather map search, Wikipedia, YouTube, Zapier, etc.

The agent can be equipped with multiple tools.

However, it’s best to select tools relevant to the specific tasks to reduce agent confusion.

The logic dictating which tool the agent uses is determined by the Agent Executor.

🧠 Memory

Memory helps an agent remember previous interactions or information shared during a conversation.

This aids in maintaining the context and continuity of interactions.

Types of Agents

💭 ReAct (Reasoning and Acting)

ReAct follows a simple iterative process:

- The user gives the agent a task.

- The agent thinks about what to do (Thought).

- It decides what action to take (Action) and the input for that action (Action Input).

- The tool performs the action and returns the output (Observation).

- Repeat steps 2-4 until the agent thinks it’s done.

⚙️ Agent Executor

Agent Executor is the mechanism that runs an Agent in a loop until some stopping criteria are met.

It is responsible for executing the agent actions and managing the iterative process.

from langchain.agents import load_tools, initialize_agent, Tool, AgentType, create_csv_agent from langchain.tools import DuckDuckGoSearchRun, PythonREPLTool from langchain.tools import YouTubeSearchTool from langchain.document_loaders.csv_loader import CSVLoader

llm = OpenAI(temperature=0, openai_api_key=OPENAI_API_KEY) search = DuckDuckGoSearchRun()

search.run("Tell me about Abi Aryan, the Machine Learning Engineer?")

Output:

Hi, my name is Abi. I am a computer scientist working extensively in machine learning to make the software systems smarter. Over the past seven years, my focus has been building machine learning systems for various applications including recommender systems, automated data labelling pipelines for both audio and video, audio-speech synthesis ... Last, how can all the progress in machine learning guide the future of chip design? The paper provides an interesting outlook into hardware for software folks. The Deep Learning Revolution and Its Implications for Computer Architecture and Chip Design. Abi Aryan on Twitter: "In 2023, Data Engineering is the fastest-growing field in machine learning. A lot of challenges that we have previously explored in database design are now being adapted to Machine Learning. Here are some of my fav papers on the topic: 👇🧵" / Twitter We\'ve detected that JavaScript is disabled in this browser. Problems & Challenges in MLOps December 12, 2022 MLOps requires

youtube_tool = YouTubeSearchTool() # youtube_tool

youtube_tool.run("Abi Aryan", 5)

Output:

['/watch?v=skEILdakHVc&pp=ygUJQWJpIEFyeWFu', '/watch?v=8km8_fK-enY&pp=ygUJQWJpIEFyeWFu']['/watch?v=skEILdakHVc&pp=ygUJQWJpIEFyeWFu', '/watch?v=8km8_fK-enY&pp=ygUJQWJpIEFyeWFu']

duckduckgo_tool = Tool(

name="DuckDuckGo Search",

func=search.run,

description="Use this tool when an Internet search is required"

)

coding_tool = PythonREPLTool()

tools = load_tools(["llm-math"], llm=llm)

tools += [duckduckgo_tool, youtube_tool, coding_tool]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

agent.run("Search for Abi Aryans most recent post on X.com. When was it and what were the contents")

Output:

> Entering new AgentExecutor chain... I need to find Abi Aryans post on X.com Action: DuckDuckGo Search Action Input: "Abi Aryans post on X.com" Observation: Abi Aryan🦉 on Twitter: "Finally at the point where I like my website/blog. New release there today 🫣 As July rolls in, will be taking a small break from blog-posts to focus on developing some open-source projects for people interested in learning how to build LLM projects. Specific requests anyone?" Abi Aryan @GoAbiAryan. I am thinking of creating an #LLMOps podcast. There's a lot going on in the LLM world. Far too much for one person to keep tabs on. Key idea - unscripted, unedited weekly show with hot takes on all new announcements and releases. Looking for a co-host. Abi Aryan @GoAbiAryan. They aren't training, just running.. thats likely like quantization that they're working on.. 3:51 AM · Jul 24, 2023 ... Abi Aryan🦉 on Twitter: "Finally updated my website today - was long due 😆 https://abiaryan.com Few more things that to be added in incl some fun projects I've built over the years and two podcast episodes I recorded that I never got a chance to publish but I'll leave all that to next month." In today's LIVE, we talked about some of the important challenges with LLMs. What do you think should the next LIVE should be about? Recording ️ https://linkedin ... Thought: I now know the final answer Final Answer: Abi Aryans most recent post on X.com was on July 24, 2023 and the contents were about creating an LLMOps podcast, running quantization, and updating their website. > Finished chain. Abi Aryans most recent post on X.com was on July 24, 2023 and the contents were about creating an LLMOps podcast, running quantization, and updating their website.

csv_agent = create_csv_agent(llm, './sample_data/california_housing_test.csv', verbose=True)

result = csv_agent.run("Is there a strong correlation between the median age of a house and it's value? Justify your answer")

Output:

> Entering new AgentExecutor chain... Thought: I should look at the correlation between the two columns Action: python_repl_ast Action Input: df['housing_median_age'].corr(df['median_house_value']) Observation: 0.0914091843888591 Thought: This correlation is not very strong Final Answer: No, there is not a strong correlation between the median age of a house and it's value, as the correlation between the two columns is only 0.09. > Finished chain.

Time to Start Building!

You now have a handle on Chains, Prompt Templates, Memory, and Agents in LangChain. The examples in this blog and accompanying Google Colab notebook have shown you what’s possible, and you’re well-equipped to bring your own ideas to life. No more waiting – it’s time to take the plunge and start building with LangChain!

Next Step: Overcoming LLM Deployment Challenges

In this blog, we’ve primarily explored building applications using Langchain and LLMs. However, as we wrap up, it’s crucial to address deployment considerations. For those creating high-value applications, the goal is to maximize user adoption in a production environment. However deploying LLMs at scale brings with it a host of challenges, including latency, throughput, and cost.

When scaling your application, consider leveraging open-source models over proprietary ones like OpenAI’s GPT-3.5 or GPT-4. (While we utilized OpenAI’s models in our tutorial, it’s important to note that LangChain is also compatible with open-source alternatives.) This choice is strategic: open-source models offer more flexibility for customization and optimization. This adaptability can lead to enhanced performance and cost-efficiency at scale, ultimately providing a superior user experience and operational effectiveness compared to using a closed-source model like OpenAI’s. For an in-depth exploration of the benefits of open-source large language models, we invite you to visit our detailed blog post on the subject.

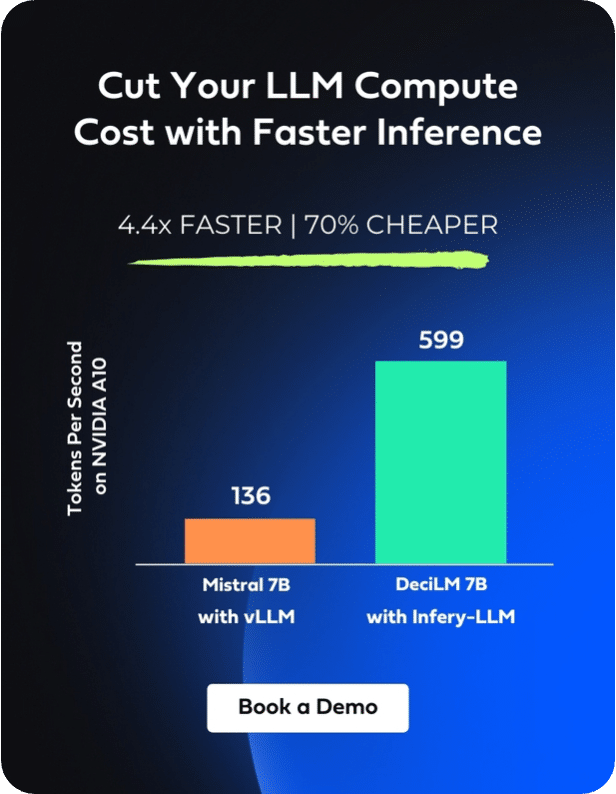

Among the current options are Deci’s open-source LLMs. Deci’s LLM lineup, including DeciCoder 1B, DeciLM 6B, and DeciLM 6B-Instruct, stands out for its efficiency, offering higher throughput compared to well-known alternatives like SantaCoder and Llama 2 7B, while maintaining or even exceeding their accuracy levels.

Integrating these models with Deci’s Infery-LLM inference SDK results in additional acceleration. Below is a chart that demonstrates the throughput acceleration on NVIDIA A10 GPUs using DeciLM 6B with Infery-LLM, compared to the standard performance of both DeciLM 6B and Llama 2, as well as Llama 2 utilized with vLLM, an open-source library for LLM inference and serving. The comparison highlights the effectiveness of Infery-LLM in facilitating the transition from the high-performance but expensive NVIDIA A100 GPUs to the more cost-effective A10s. This shift is achieved without compromising throughput or quality, even on the less resource-intensive hardware.

In summary, Infery-LLM emerges as a crucial tool for addressing the challenges of latency, throughput, and cost in deploying LLMs. It stands as an indispensable resource for developers and organizations leveraging these sophisticated models.

To witness the transformative power of Infery-LLM, we invite you to experience its capabilities through a live demo. Click below for a live demo of its impactful benefits.