Object detection is a computer vision task that involves identifying and locating objects in images or videos. It is an important part of many applications, such as self-driving cars, robotics, and video surveillance. There are various computer vision problems in a surveillance system, and Object Tracking is one of them.

Object tracking is a method of tracking detected objects throughout frames using their spatial and temporal features. In this blog post, we will be implementing two of the most popular tracking algorithms, SORT and DeepSORT, with YOLO-NAS. In addition, will see how we can use YOLO-NAS for object tracking on a custom dataset (i.e., ship detection). We will also create an application for vehicle counting (entering and leaving) by applying YOLO-NAS and SORT.

Contents

(1) Quick Primer on Object Tracking

(3) SORT and DeepSORT Algorithms

(4) Object Detection using YOLO-NAS

(5) Object Tracking using YOLO-NAS and DeepSORT

(6) Vehicle Counting (Entering and Leaving) with YOLO-NAS and DeepSORT

(7) Vehicles Counting (Entering and Leaving) with YOLO-NAS and SORT

(8) YOLO-NAS for Object Tracking on a Ship Detection Custom Dataset

Quick Primer on Object Tracking

Object tracking in deep learning is the task of predicting the positions of objects throughout a video using spatial and temporal features. In this task, we first get an initial set of detections, then we assign a unique ID to each detected object and track the detected objects throughout the frames of the video feed while maintaining the assigned IDs.

Object tracking has many potential real-world applications.

Traffic Monitoring:

Tracking can be used to monitor traffic and track vehicles on the road. They can also be used to assess traffic and detect violations.

Sports:

Tracking can also be used in various sports to track the ball or players.

Types of Trackers

Trackers can be classified into two categories: Single and multiple-object trackers.

Single Object Tracker:

These types of trackers track only a single object even if there are many other objects present in the frame. They work by first initializing the location of the object in the first frame, and then tracking it throughout the sequence of frames.

Multiple Object Tracker:

These types of trackers can track multiple objects present in a frame. Multiple object trackers are trained on a large amount of data, unlike traditional trackers. Some of the multiple object tracking algorithms include SORT, DeepSORT, JDE, Center Track, and ByteTrack.

SORT and DeepSORT Object Tracking Algorithms

Simple Online Real-Time Tracking (SORT)

SORT is an approach where Kalman filters and Hungarian algorithms are used to track objects. SORT consists of four key components, which include detection, estimation, data association, and creation & deletion of track identities.

SORT performs very well in terms of tracking precision and accuracy, but there are some limitations of the SORT algorithm:

Limitations of SORT Algorithm:

- The SORT algorithm returns tracks with a high number of identity switches

- The SORT algorithm fails in case of occlusion because of the association matrix used.

DeepSORT

DeepSORT, a Multiple Object Tracking (MOT) algorithm, enhances the efficiency and accuracy. Extending the SORT algorithm, DeepSORT uniquely identifies and tracks objects by employing an advanced association metric that integrates both motion and appearance descriptors. This integration minimizes identity switches, promoting more efficient and reliable tracking. By considering not just velocity and motion, but also object appearance, DeepSORT stands out as a comprehensive tracking algorithm in the realm of computer vision.

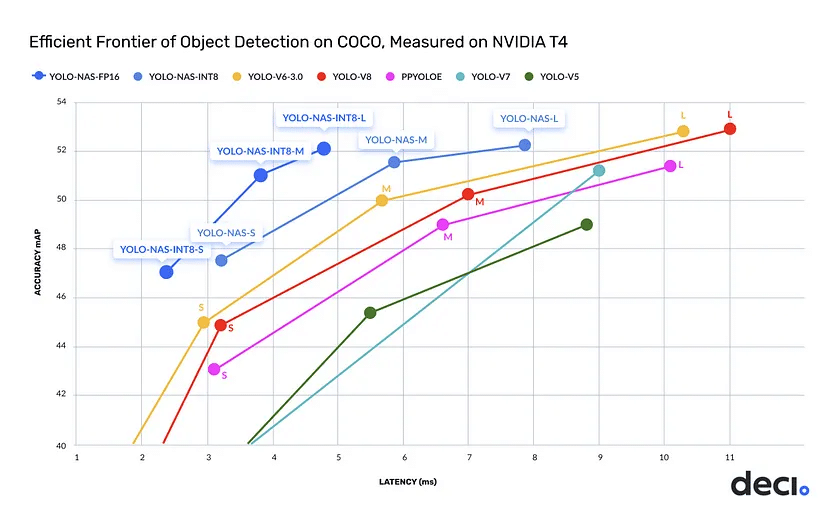

Object Detection using YOLO-NAS

YOLO-NAS, created by Deci AI, stands as the state-of-the-art object detection model, harnessing the power of AutoNAC, Deci’s proprietary Neural Architecture Search technology. Outshining competing models in speed and accuracy, YOLO-NAS integrates the latest deep learning advancements to enhance key aspects of existing YOLO models, including quantization support and accuracy-latency tradeoff. This innovative approach ensures superior performance, offering optimized accuracy-latency and quantization support tradeoffs in object detection.

YOLO-NAS enhances the ability to detect small objects, improves localization accuracy, and increases the performance-per-compute ratio, making the model more accessible for real-time edge device applications.

Tools

For this project, Google Colab is used, which offers a 15GB Graphics Card. Let’s explore the software tools we will use to implement YOLO-NAS with SORT and DeepSORT tracking in Python.

(i) SuperGradients

The YOLO-NAS model is available under an open-source license with pre-trained weights available for non-commercial use on SuperGradients, Deci’s PyTorch-based, open-source,

computer vision training library.

(ii) Simple Online Real-Time Tracking (SORT) Object Tracking

SORT is an algorithm that estimates the location of an object based on its past location using the Kalman filter.

(iii) Deep Simple Online Real-Time Tracking

Deep SORT is a state-of-the-art object tracking algorithm that combines a deep learning-based object detector with a tracking algorithm to achieve high accuracy and robustness in crowded and complex environments.

Step-by-Step Guide

Let us go through the step-by-step guide on how to implement object tracking with YOLO-NAS and SORT/ DeepSORT algorithms.

The accompanying codes for this guide can be found in this Google Colab Notebook.

Step 1- Install All the Required Packages

The first step is to install the required packages, which include SuperGradients and the Filterpy package. YOLO-NAS is included in the SuperGradients package. To do object detection on images and videos, the SuperGradients package is required, and to implement object tracking with the SORT algorithm, the Filterpy package is required.

!pip install -q super-gradients==3.1.3 !pip install filterpy==1.1.0

After installing the SuperGradients and Filterpy packages, please make sure to restart the run time.

Step 2 – Import All the Required Libraries

After installing the necessary packages, we’ll import essential libraries including opencv, super gradients, and numpy. The opencv-python package is vital for reading, displaying, and processing images, as well as handling input videos frame by frame, and managing output video display and saving. Since YOLO-NAS is housed within the super gradients package, we’ll import models from the Supergradients training library, which grants access to the pretrained YOLO-NAS model weights.

import cv2 import torch from super_gradients.training import models import numpy as np import math from numpy import random

Step 3 – Download the Pretrained YOLO-NAS Model Weights

Since we have installed all the required packages and imported all the required libraries, we will download the pre-trained YOLO-NAS model weights. YOLO-NAS comes in three different models sizes – small (S), medium (M), and large (L). YOLO-NAS-S is the fastest, but it is less accurate than the other YOLO-NAS models. YOLO-NAS-L is the most accurate but the slowest of the YOLO-NAS models. We will use the small and large versions of the YOLO-NAS models and the pre-trained weights from the COCO dataset in this project.

device=torch.device("cuda:0") if torch.cuda.is_available() else torch.device("cpu")

model=models.get('yolo_nas_s', pretrained_weights="coco").to(device)

Step 4 – Object Detection with YOLO-NAS on Video

To perform object detection on a video, we will loop through each video frame one by one, do the detection of the objects in each of the frames, and using opencv write function we will also save the output video.

out=cv2.VideoWriter('output1.avi', cv2.VideoWriter_fourcc('M', 'J', 'P', 'G'), 20, (frame_width, frame_height))

count=0

while True:

ret, frame=cap.read()

count+=1

if ret:

result=list(model.predict(frame, conf=0.5))[0]

bbox_xyxys=result.prediction.bboxes_xyxy.tolist()

confidences=result.prediction.confidence

labels=result.prediction.labels.tolist()

for (bbox_xyxy, confidence, cls) in zip(bbox_xyxys, confidences, labels):

bbox=np.array(bbox_xyxy)

x1, y1, x2, y2 = bbox[0], bbox[1], bbox[2], bbox[3]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2)

classname=int(cls)

class_name=names[classname]

conf=math.ceil((confidence*100))/100

label=f'{class_name}{conf}'

print("Frame N", count, "", x1, y1,x2, y2)

cv2.rectangle(frame, (x1, y1), (x2, y2), color=(0, 0, 255, 0.6),thickness=2, lineType=cv2.LINE_AA)

t_size=cv2.getTextSize(str(label), 0, fontScale=1/2, thickness=1)[0]

c2=x1+t_size[0], y1-t_size[1]-3

cv2.rectangle(frame, (x1, y1), c2, color=(0, 0, 255, 0.6), thickness=-1, lineType=cv2.LINE_AA)

cv2.putText(frame, str(label), (x1, y1-2), 0, 1/2, [255, 255, 255], thickness=1, lineType=cv2.LINE_AA)

out.write(frame)

else:

break

out.release()

cap.release()

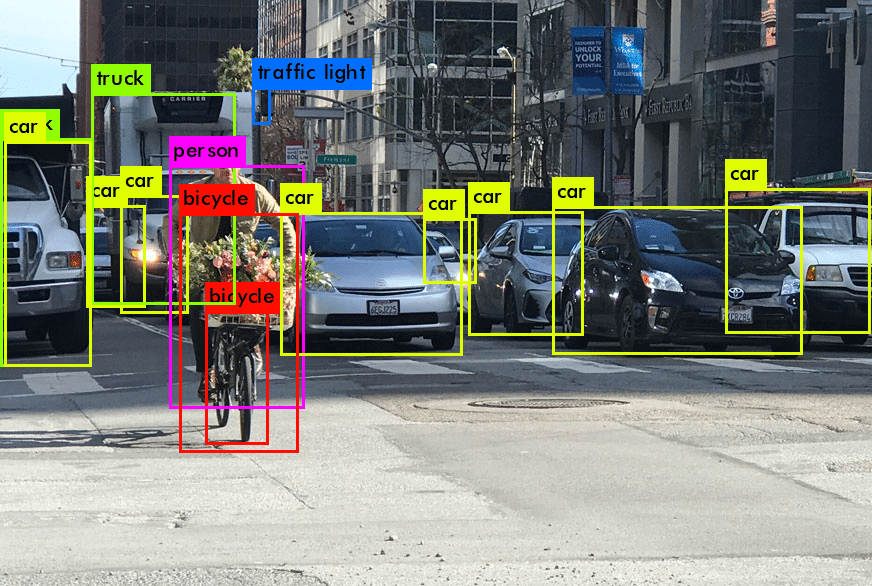

Here is the output video:

Step 5 – Object Tracking with YOLO-NAS and DeepSORT

In object tracking, a unique ID is assigned to each of the detected objects. To implement, we first get an initial set of detections, then we assign a unique ID to each detected object and track the detected objects throughout the frames of the video feed while maintaining the assigned IDs.

Downloading the DeepSORT Files

DeepSORT files are downloaded from the drive into the Google Colab notebook.

!gdown "https://drive.google.com/uc?id=11ZSZcG-bcbueXZC3rN08CM0qqX3eiHxf&confirm=t"

Initialize DeepSORT

The next step is to initialize DeepSORT and create some helper functions.

cfg_deep = get_config()

cfg_deep.merge_from_file("/content/deep_sort_pytorch/configs/deep_sort.yaml")

deepsort = DeepSort(cfg_deep.DEEPSORT.REID_CKPT,

max_dist=cfg_deep.DEEPSORT.MAX_DIST, min_confidence=cfg_deep.DEEPSORT.MIN_CONFIDENCE,

nms_max_overlap=cfg_deep.DEEPSORT.NMS_MAX_OVERLAP,

max_iou_distance=cfg_deep.DEEPSORT.MAX_IOU_DISTANCE,

max_age=cfg_deep.DEEPSORT.MAX_AGE, n_init=cfg_deep.DEEPSORT.N_INIT,

nn_budget=cfg_deep.DEEPSORT.NN_BUDGET,

use_cuda=True)

A helper function “draw boxes” is created, to draw bounding boxes around each of the detected objects. After getting an initial set of detections with YOLO-NAS, we assign a unique ID to each of the detected objects.

def draw_boxes(img, bbox, identities=None, categories=None, names=None, offset=(0,0)):

for i, box in enumerate(bbox):

x1, y1, x2, y2 = [int(i) for i in box]

x1 += offset[0]

x2 += offset[0]

y1 += offset[0]

y2 += offset[0]

cat = int(categories[i]) if categories is not None else 0

id = int(identities[i]) if identities is not None else 0

cv2.rectangle(img, (x1, y1), (x2, y2), color= compute_color_for_labels(cat),thickness=2, lineType=cv2.LINE_AA)

label = str(id) + ":" + classNames[cat]

(w,h), _ = cv2.getTextSize(str(label), cv2.FONT_HERSHEY_SIMPLEX, fontScale=1/2, thickness=1)

t_size=cv2.getTextSize(str(label), cv2.FONT_HERSHEY_SIMPLEX, fontScale=1/2, thickness=1)[0]

c2=x1+t_size[0], y1-t_size[1]-3

cv2.rectangle(frame, (x1, y1), c2, color=compute_color_for_labels(cat), thickness=-1, lineType=cv2.LINE_AA)

cv2.putText(frame, str(label), (x1, y1-2), 0, 1/2, [255, 255, 255], thickness=1, lineType=cv2.LINE_AA)

return img

Implementing Object Tracking

As we have initialized the DeepSORT module and created the helper functions. Let’s implement Object Tracking with YOLO-NAS and DeepSORT. We will first upload a video and get an initial set of detections with YOLO-NAS. Then we assign a unique ID to each of the detected objects and track the detected objects throughout the frames of the video feed while maintaining the assigned IDs.

The code snippet is provided below.

while True:

ret, frame = cap.read()

if ret:

result = list(model.predict(frame, conf=0.5))[0]

bbox_xyxys = result.prediction.bboxes_xyxy.tolist()

confidences = result.prediction.confidence

labels = result.prediction.labels.tolist()

for (bbox_xyxy, confidence, cls) in zip(bbox_xyxys, confidences, labels):

bbox = np.array(bbox_xyxy)

x1, y1, x2, y2 = bbox[0], bbox[1], bbox[2], bbox[3]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2)

conf = math.ceil((confidence*100))/100

cx, cy = int((x1+x2)/2), int((y1+y2)/2)

bbox_width = abs(x1-x2)

bbox_height = abs(y1-y2)

xcycwh = [cx, cy, bbox_width, bbox_height]

xywh_bboxs.append(xcycwh)

confs.append(conf)

oids.append(int(cls))

xywhs = torch.tensor(xywh_bboxs)

confss= torch.tensor(confs)

outputs = deepsort.update(xywhs, confss, oids, frame)

if len(outputs)>0:

bbox_xyxy = outputs[:,:4]

identities = outputs[:, -2]

object_id = outputs[:, -1]

draw_boxes(frame, bbox_xyxy, identities, object_id)

output.write(frame)

else:

break

Here is the output video:

Step 6: Vehicles Counting (Entering and Leaving) with YOLO-NAS and SORT Object Tracking

Using the power of object detection and tracking, we will create a vehicle counting application which will track and count the number of vehicles entering and leaving a lane. For object detection, we will use YOLO-NAS and for tracking SORT algorithm. As we are doing object tracking with the SORT algorithm, we will download the sort.py file from the drive into the Google Colab notebook.

Download the sort.py file

!gdown "https://drive.google.com/uc?id=1AhwiIb2umnJpZwunbfCKiyjanzjX_xfx&confirm=t"

The code snippet is provided below.

tracker = Sort(max_age = 20, min_hits=3, iou_threshold=0.3)

count=0

while True:

ret, frame = cap.read()

count += 1

if ret:

detections = np.empty((0,6))

result = list(model.predict(frame, conf=0.40))[0]

bbox_xyxys = result.prediction.bboxes_xyxy.tolist()

confidences = result.prediction.confidence

labels = result.prediction.labels.tolist()

for (bbox_xyxy, confidence, cls) in zip(bbox_xyxys, confidences, labels):

bbox = np.array(bbox_xyxy)

x1, y1, x2, y2 = bbox[0], bbox[1], bbox[2], bbox[3]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2)

classname = int(cls)

class_name = classNames[classname]

conf = math.ceil((confidence*100))/100

currentArray = np.array([x1, y1, x2, y2, conf, cls])

detections = np.vstack((detections, currentArray))

tracker_dets = tracker.update(detections)

if len(tracker_dets) >0:

bbox_xyxy = tracker_dets[:,:4]

identities = tracker_dets[:, 8]

categories = tracker_dets[:, 4]

draw_boxes(frame, bbox_xyxy, identities, categories)

out.write(frame)

Here is the output video:

Step 7: Vehicles Counting (Entering and Leaving) with YOLO-NAS and DeepSORT Object Tracking

In the previous step, we explored how we can do vehicles counting with YOLO-NAS and SORT Object tracking. Let’s take this one step further and implement vehicle counting with YOLO-NAS and DeepSORT object tracking.

At the start of this blog, I explained the limitations of the SORT algorithm, which include higher identity switches and fails in case of occlusion. I also stated that the DeepSORT algorithm overcomes these limitations by adding an appearance descriptor. We’ll now dive deeper into DeepSORT and see its advantages in action.

As I have already initialized DeepSORT and created helper functions, I will quickly dive into the main script.

The code snippet is provided below.

while True:

ret, frame = cap.read()

if ret:

result = list(model.predict(frame, conf=0.5))[0]

bbox_xyxys = result.prediction.bboxes_xyxy.tolist()

confidences = result.prediction.confidence

labels = result.prediction.labels.tolist()

for (bbox_xyxy, confidence, cls) in zip(bbox_xyxys, confidences, labels):

bbox = np.array(bbox_xyxy)

x1, y1, x2, y2 = bbox[0], bbox[1], bbox[2], bbox[3]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2)

conf = math.ceil((confidence*100))/100

cx, cy = int((x1+x2)/2), int((y1+y2)/2)

bbox_width = abs(x1-x2)

bbox_height = abs(y1-y2)

xcycwh = [cx, cy, bbox_width, bbox_height]

xywh_bboxs.append(xcycwh)

confs.append(conf)

oids.append(int(cls))

xywhs = torch.tensor(xywh_bboxs)

confss= torch.tensor(confs)

outputs = deepsort.update(xywhs, confss, oids, frame)

if len(outputs)>0:

bbox_xyxy = outputs[:,:4]

identities = outputs[:, -2]

object_id = outputs[:, -1]

draw_boxes(frame, bbox_xyxy, identities, object_id)

output.write(frame)

Here is the output video:

Step 8: YOLO-NAS for Object Tracking on a Custom Dataset (Ships Detection)

We have seen how we can do object tracking with YOLO-NAS and the SORT / DeepSORT algorithms. In the previous examples, we used pre-trained weights from the COCO dataset. Now we will go one step further, implementing object tracking with YOLO-NAS and DeepSORT on a custom dataset. We will first train the YOLO-NAS model on a ship’s dataset, available publicly on Roboflow. After training the YOLO-NAS model on the ship’s dataset, we will integrate DeepSORT object tracking with our custom-trained YOLO-NAS model.

The code snippet is provided below.

while True:

ret, frame = cap.read()

if ret:

result = list(model.predict(frame, conf=0.55))[0]

bbox_xyxys = result.prediction.bboxes_xyxy.tolist()

confidences = result.prediction.confidence

labels = result.prediction.labels.tolist()

for (bbox_xyxy, confidence, cls) in zip(bbox_xyxys, confidences, labels):

bbox = np.array(bbox_xyxy)

x1, y1, x2, y2 = bbox[0], bbox[1], bbox[2], bbox[3]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2)

conf = math.ceil((confidence*100))/100

cx, cy = int((x1+x2)/2), int((y1+y2)/2)

bbox_width = abs(x1-x2)

bbox_height = abs(y1-y2)

xcycwh = [cx, cy, bbox_width, bbox_height]

xywh_bboxs.append(xcycwh)

confs.append(conf)

oids.append(int(cls))

xywhs = torch.tensor(xywh_bboxs)

confss= torch.tensor(confs)

outputs = deepsort.update(xywhs, confss, oids, frame)

if len(outputs)>0:

bbox_xyxy = outputs[:,:4]

identities = outputs[:, -2]

object_id = outputs[:, -1]

draw_boxes(frame, bbox_xyxy, identities, object_id)

output.write(frame)

else:

break

Here is the output video:

In this article, we have covered in detail how to do object tracking with YOLO-NAS and DeepSORT/ SORT algorithms. We did object tracking on a custom dataset and built an application for object counting.

Next Steps

Now that we have implemented object tracking with YOLO-NAS and DeepSORT/ SORT, there are many exciting steps we can take to bring this project forward.

- Build a frontend chat interface with Streamlit, which allows the user to upload a video and do the vehicle counting using YOLO-NAS and the DeepSORT/ SORT algorithm.

- Dockerize and deploy the application on a cloud instance.

- Experiment with other YOLO-NAS and object-tracking algorithms to objectively evaluate the differences in inference speed and output.

YOLO-NAS and Deci’s Computer Vision Suite

In this blog, we’ve explored object tracking with DeepSort and YOLO-NAS. As we conclude, it’s noteworthy that YOLO-NAS is a prime example of the advanced foundation models developed by Deci AI using our innovative AutoNAC engine. Each model, including YOLO-NAS, is designed for a specific computer vision task, hardware configuration, and data characteristics. This ensures exceptional accuracy, efficient memory utilization, and remarkably low latency. For those needing custom solutions, AutoNAC can be used to develop models specific to unique requirements.

Upon choosing a foundation or custom model, you can train or fine-tune it using your own data via Deci’s SuperGradients PyTorch training library. This resource offers superior training recipes with advanced techniques to enhance model quality and provide clearer visual insights. To further boost your model’s efficiency and inference speed, Deci’s Infery SDK is an invaluable tool.

Discover more about Deci’s foundation models and the Infery SDK, and see how they can transform your AI initiatives, by booking a demo with us.