Deci is excited to present YOLO-NAS-Sat, the latest in our lineup of ultra-performant foundation models, which includes YOLO-NAS, YOLO-NAS Pose, and DeciSegs. Tailored for the accuracy demands of small object detection, YOLO-NAS-Sat serves a wide array of vital uses, from monitoring urban infrastructure and assessing changes in the environment to precision agriculture. Available in four sizes—Small, Medium, Large, and X-large—this model is designed for peak performance in accuracy and speed on edge devices like NVIDIA’s Jetson Orin series.

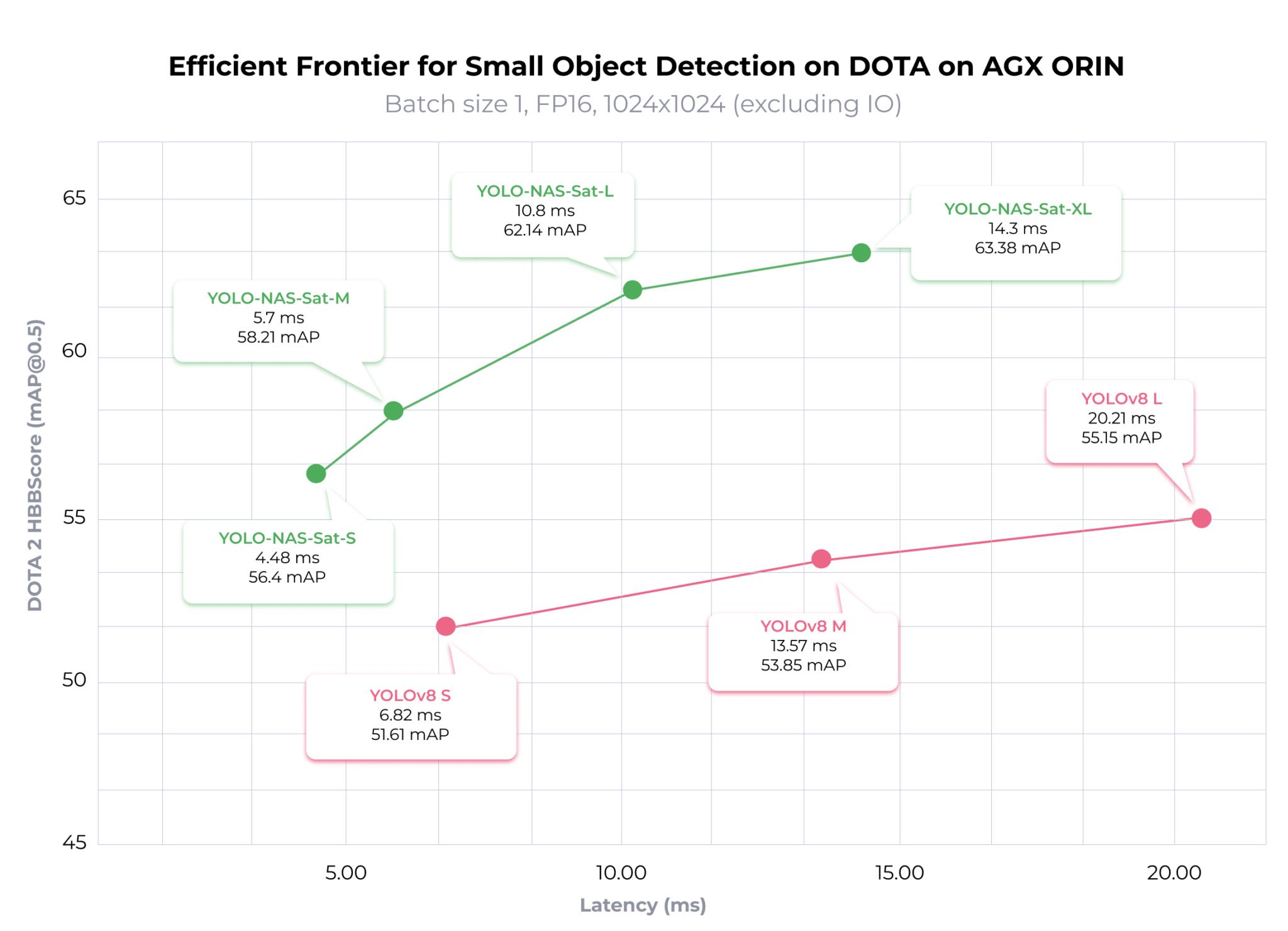

YOLO-NAS-Sat sets itself apart by delivering an exceptional accuracy-latency trade-off, outperforming established models like YOLOv8 in small object detection. For instance, when evaluated on the DOTA 2.0 dataset, YOLO-NAS-Sat L achieves a 2.02x lower latency and a 6.99 higher mAP on the NVIDIA Jetson AGX ORIN with FP16 precision over YOLOV8.

YOLO-NAS-Sat’s superior performance is attributable to its innovative architecture, generated by AutoNAC, Deci’s Neural Architecture Search engine.

If your application requires small object detection on edge devices, YOLO-NAS-Sat provides an off-the-shelf solution that can significantly accelerate your development process. Its specialized design and fine-tuning for small object detection ensure rapid deployment and optimal performance.

Continue reading to explore YOLO-NAS-Sat’s architectural design, training process, and performance.

YOLO-NAS-Sat’s Specialized Architecture

The Need for A Specialized Architecture for Small Object Detection

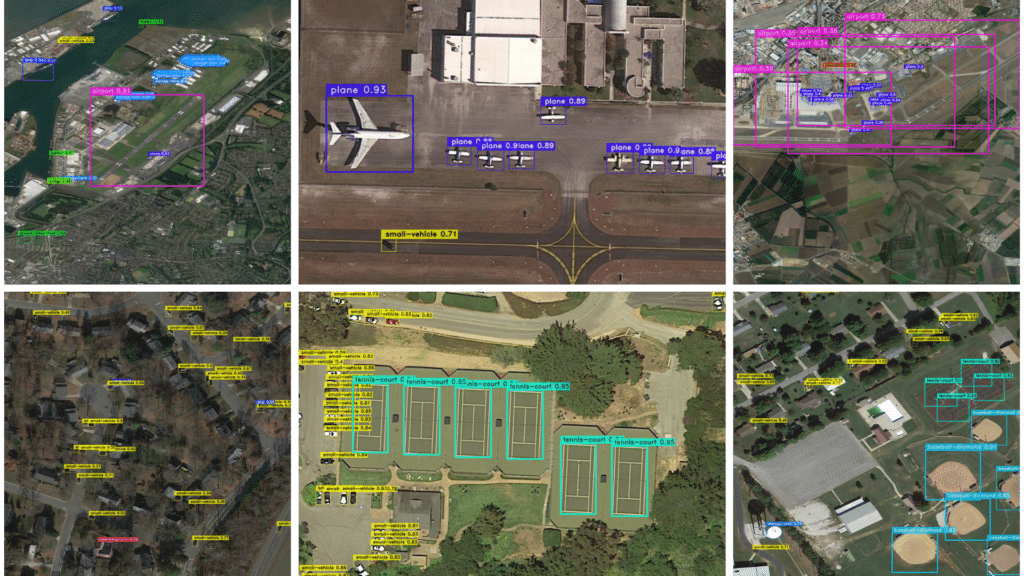

Small object detection is the task of detecting objects that take up minimal space in an image, sometimes a mere handful of pixels. This task inherently involves significant challenges, including scant detail for recognition and increased vulnerability to background noise. To meet these challenges, a specialized architecture is required – one that captures and retains small, local details in an image.

From YOLO-NAS to YOLO-NAS-Sat

YOLO-NAS-Sat is based on the YOLO-NAS architecture, which is renowned for its robust performance in standard object detection tasks. The macro-level architecture remains consistent with YOLO-NAS, but we’ve made strategic modifications to better address small object detection challenges:

- Backbone Modifications: The number of layers in the backbone has been adjusted to optimize the processing of small objects, enhancing the model’s ability to discern minute details.

- Revamped Neck Design: A newly designed neck, inspired by the U-Net-style decoder, focuses on retaining more small-level details. This adaptation is crucial for preserving fine feature maps that are vital for detecting small objects.

- Context Module Adjustment: The original “context” module in YOLO-NAS, intended to capture global context, has been replaced. We discovered that for tasks like processing large satellite images, a local receptive window is more beneficial, improving both accuracy and network latency.

These architectural innovations ensure that YOLO-NAS-Sat is uniquely equipped to handle the intricacies of small object detection, offering an unparalleled accuracy-speed trade-off.

Leveraging AutoNAC for Architecture Optimization

At the heart of YOLO-NAS-Sat’s development is Deci’s AutoNAC, an advanced Neural Architecture Search engine. AutoNAC’s efficiency in navigating the vast search space of potential architectures allows us to tailor models specifically to the requirements of tasks, datasets, and hardware. YOLO-NAS-Sat is part of a broader family of highly efficient foundation models, including YOLO-NAS for standard object detection, YOLO-NAS Pose for pose estimation, and DeciSegs for semantic segmentation, all generated through AutoNAC.

For YOLO-NAS-Sat, we utilized AutoNAC to identify a neural network within the pre-defined search space that achieves our target latency while maximizing accuracy.

YOLO-NAS-Sat’s Training

We trained YOLO-NAS-Sat from scratch on the COCO dataset, followed by fine-tuning on the DOTA 2.0 dataset. The DOTA 2.0 dataset is an extensive collection of aerial images designed for object detection and analysis, featuring diverse objects across multiple categories. For the fine-tuning phase, we segmented the input scenes into 1024×1024 tiles, employing a 512px step for comprehensive coverage. Additionally, we scaled each scene to 75% and 50% of its original size to enhance detection robustness across various scales.

During the training process, we extracted random 640×640 crops from these tiles to introduce variability and enhance model resilience. For the validation phase, we divided the input scenes into uniform 1024×1024 tiles.

YOLO-NAS-Sat’s State-of-the-Art Performance

Accuracy Compared to Fine-tuned YOLOv8

To benchmark YOLO-NAS-Sat against YOLOv8, we subjected YOLOv8 to the same fine-tuning process previously outlined for YOLO-NAS-Sat.

Comparing the accuracy of the fine-tuned models, we see that each YOLO-NAS-Sat variant has a higher [email protected] score than the corresponding YOLOv8 variant. YOLO-NAS-Sat-S has 4.79% higher mAP compared to YOLOv8 S; YOLO-NAS-Sat-M mAP’s is 4.36% higher compared to YOLOv8 M , and YOLO-NAS-Sat-L is 6.99% more accurate than YOLOv8 L.

Latency Compared to YOLOv8

While higher accuracy typically demands a trade-off in speed, the finely tuned YOLO-NAS-Sat models break this convention by achieving lower latency compared to their YOLOv8 counterparts. However, on NVIDIA AGX Orin at TRT FP16 Precision, each YOLO-NAS-Sat variant outpaces the corresponding YOLOv8 variant.

As can be seen in the above graph, YOLO-NAS-Sat S outpaces its YOLOv8 counterpart by 1.52x, YOLO-NAS-Sat M by 2.38x, and YOLO-NAS-Sat L by 2.02x. Notably, YOLO-NAS-Sat XL is not only more accurate but also 1.42x faster than YOLOv8 L.

Adopting YOLO-NAS-Sat for small object detection not only enhances accuracy but also substantially improves processing speeds. Such improvements are especially valuable in real-time applications, where rapid data processing is paramount.

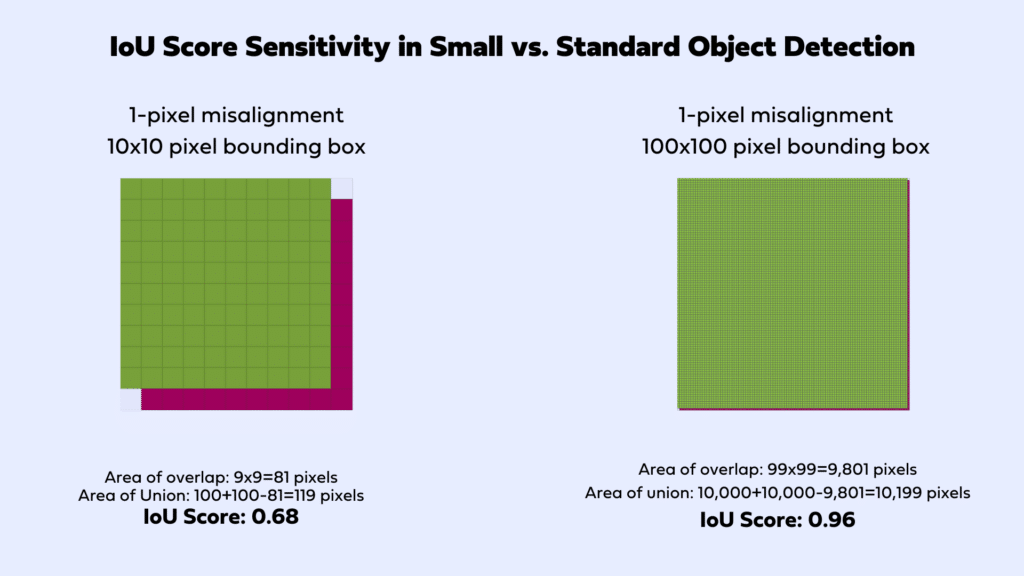

Why We Chose [email protected] for Small Object Detection Evaluation

In assessing these small object detection models, we opted for the [email protected] metric over the broader [email protected]. The commonly adopted [email protected], which evaluates performance across IOU thresholds from 0.5 to 0.95, may not accurately reflect the nuances of small object detection. Minor discrepancies, even as slight as a 1 or 2-pixel shift between the predicted and actual bounding boxes, can significantly impact the IOU score, plummeting it from perfect to 0.8 and thus affecting the overall mAP score adversely.

Considering the inherent sensitivities of the IOU metric when detecting small objects, it becomes imperative to adopt an alternative evaluation strategy. Utilizing a singular IOU threshold, such as 0.5, presents a viable solution by diminishing the metric’s vulnerability to small prediction errors, thereby offering a more stable and reliable measure of model performance in the context of small object detection.

YOLO-NAS-Sat’s Potential: Beyond Aerial Images

While YOLO-NAS-Sat excels in satellite imagery analysis, its specialized architecture is also ideally tailored for a wide range of applications involving other types of images:

- Satellite Images: Used for environmental monitoring, urban development tracking, agricultural assessment, and security applications.

- Microscopic Images: Essential in medical research for detecting cells, bacteria, and other microorganisms, as well as in material science.

- Radar Images: Applied in meteorology for weather prediction, in aviation for aircraft navigation, and in maritime for ship detection.

- Thermal Images: Thermal imaging finds applications in a variety of fields, including security, wildlife monitoring, and industrial maintenance, as well as in building and energy audits. The unique information provided by thermal images, especially in night-time or low-visibility conditions, underlines its importance and the volume of use.

Gaining Access to YOLO-NAS-Sat

YOLO-NAS-Sat is ready to revolutionize your team’s small object detection projects. If you’re looking to leverage this model’s cutting-edge performance for your applications, we invite you to connect with our experts. You’ll learn how you can use Deci’s platform and foundation models to streamline your development process, achieving faster time-to-market and unlocking new possibilities in your computer vision projects.