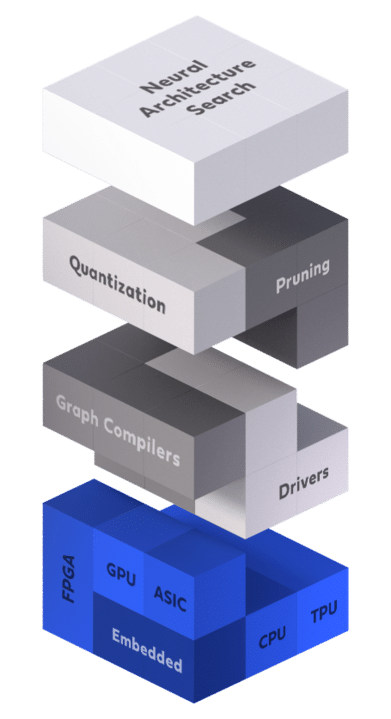

In deep learning development, the inference stack is a set of components that influence inference computation and performance. Also called the acceleration stack, it can be divided into three different levels: hardware, software, and algorithmic. By optimizing several levels in conjunction, a significant speed-up can be achieved. More AI teams can successfully deploy deep learning to production by examining the inference stack. It helps to close the gap between the success of neural networks and their ability to handle real-world scenarios.

Here is a deep dive into the inference stack to learn more.