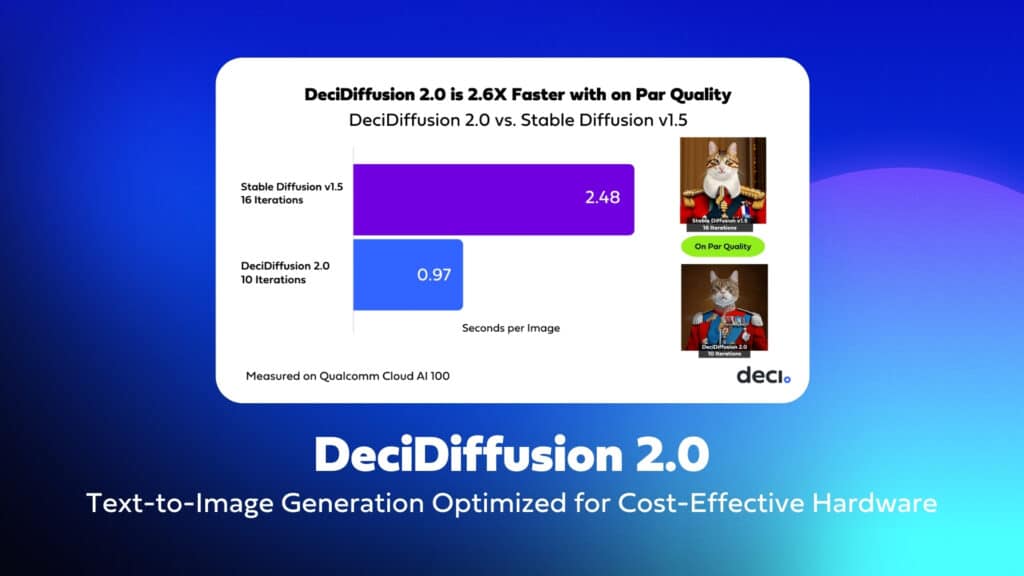

Deci proudly unveils DeciDiffusion 2.0, our latest ultra-efficient text-to-image latent diffusion model. Achieving image production in under a second, this 732 million-parameter model outpaces Stable Diffusion v1.5 by 2.6 times. Building on the success of its larger predecessor, DeciDiffusion 1.0, this latest iteration melds exceptional speed with quality images. It’s distinguished by its novel scheduling algorithm, cutting-edge training methodologies, and unique architecture generated by AutoNAC, Deci’s advanced Neural Architecture Search engine, to run optimally on cost-efficient hardware.

The launch of DeciDiffusion 2.0 marks a significant milestone in text-to-image technology. Its improved speed not only elevates the user experience but also opens doors to affordable deployment. This model has been optimized for the Qualcomm Cloud AI 100, which enables substantial savings combined with top-notch inference performance.

Dive deeper into this blog to uncover the architecture and advanced training techniques behind DeciDiffusion 2.0.

From Text to Image in the Blink of an Eye

DeciDiffusion 2.0 presents significant advancements over Stable Diffusion v1.5:

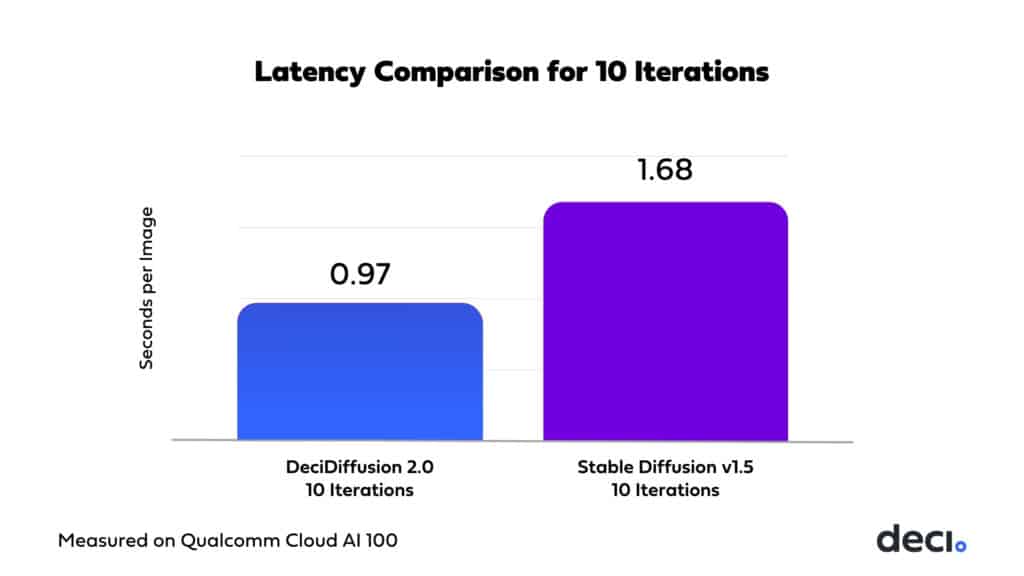

Speed per Iteration

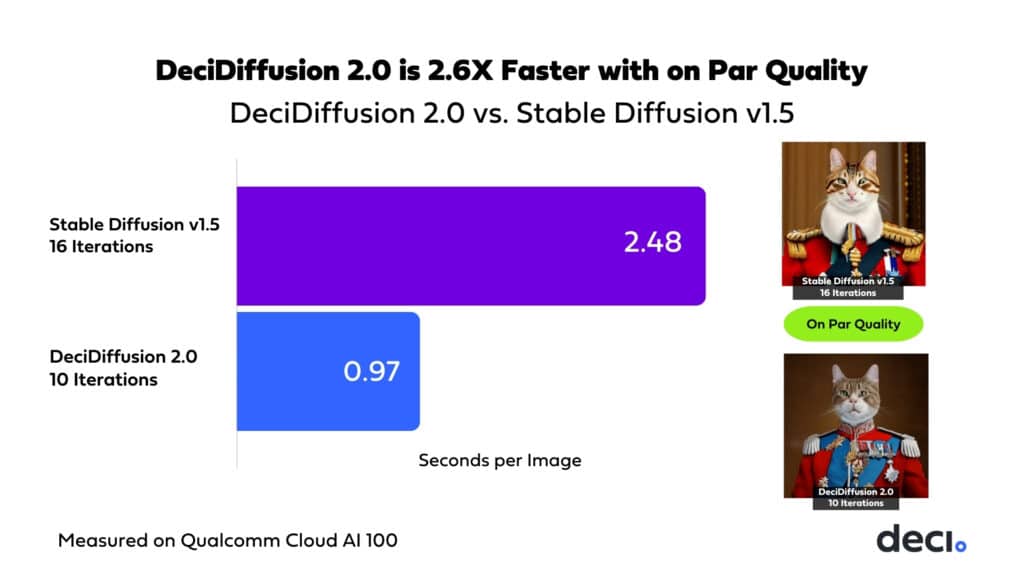

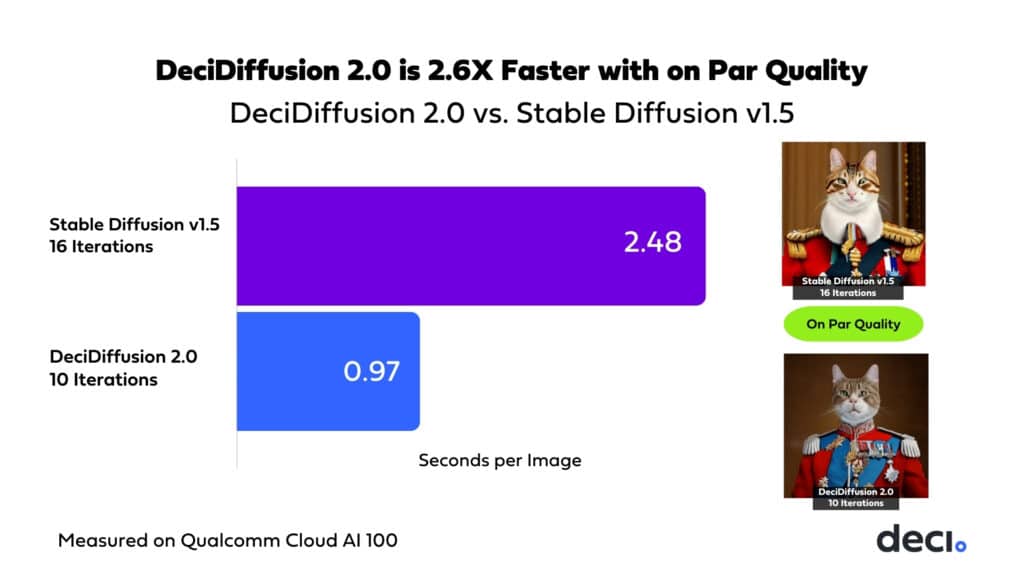

When comparing the two models on Qualcomm’s Cloud AI100, using a fixed number of iterations (for example, 10), DeciDiffusion 2.0 completes these iterations 1.73 times faster than Stable Diffusion v1.5.

Same Quality in Fewer Iterations

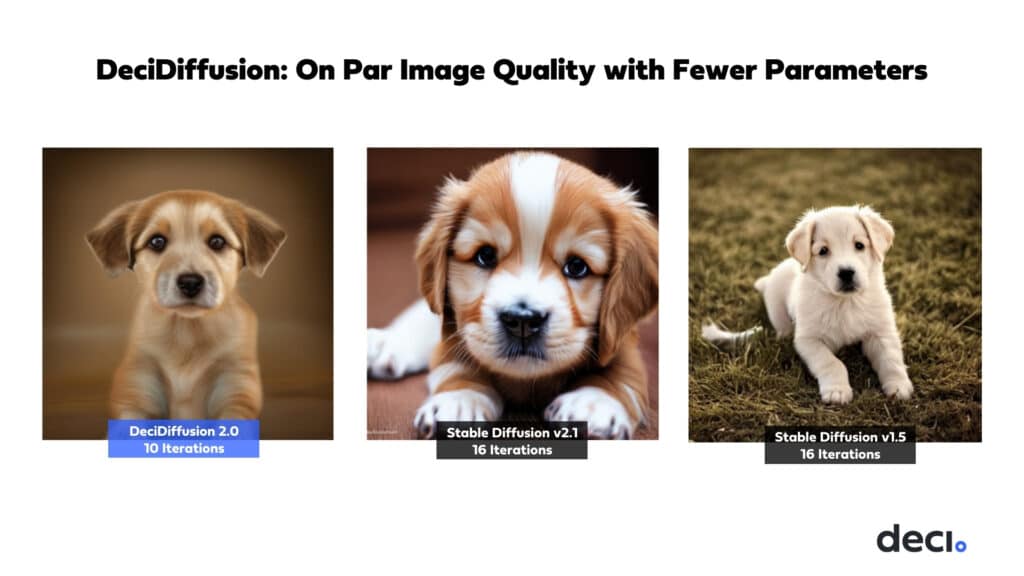

Moreover, DeciDiffusion 2.0 is also superior in terms of image quality. It achieves comparable image quality in fewer iterations. When comparing the duration each model requires to reach a certain quality level, DeciDiffusion can deliver high-quality images in 40% fewer iterations.

The following graph illustrates the combined impact of these advantages: DeciDiffusion 2.0 produces quality images on par with Stable Diffusion v1.5, but 2.6 times faster.

Additionally, DeciDiffusion 2.0’s image quality at 10 iterations is on par with that of the 1.29 billion-parameter Stable Diffusion v2.1 at 16 iterations.

DeciDiffusion 2.0’s Architectural Advantage: A Smaller, Faster U-Net-NAS

Like DeciDiffusion 1.0 and Stable Diffusion, DeciDiffusion 2.0 is a latent diffusion model. DeciDiffusion 1.0’s main innovation was replacing the traditional U-Net component with a more streamlined variant, U Net-NAS. DeciDiffusion 2.0 takes this one step further. It employs an even smaller and faster U-Net component. Optimized for cost-efficient hardware, DeciDiffusion 2.0’s U-Net-NAS component has a mere 525 million parameters compared to DeciDiffusion 1.0 U-Net-NAS’s 820 million parameters.

The U-Net component of latent diffusion models is an iterative encoder-decoder mechanism that introduces and subsequently reduces noise in the latent images. The decoder employs cross-attention layers, conditioning output on text embeddings linked to the given description.

Latent diffusion models have additional components: a Text Encoder, which transforms textual prompts into latent text embeddings used by the U-Net component, and a Variational Autoencoder, which converts latent representation into output images during inference. However, the U-Net component is the core and most computationally demanding part of latent diffusion models. The repetitive noising and denoising processes incur substantial computational costs at every iteration. Reducing its size is crucial in reducing the computational cost of latent diffusion.

The Role of AutoNAC in Optimizing DeciDiffusion’s Architecture for Cost-Efficient Hardware

DeciDiffusion 2.0’s architecture was generated using Deci’s specialized NAS-powered engine, AutoNAC. While traditional Neural Architecture Search approaches show potential, they often demand significant computational resources. AutoNAC overcomes this limitation by streamlining the search process for greater efficiency. It has played a key role in developing a variety of efficient foundation models, including DeciLM-7B, one of the the top text generation models in its class in terms of both accuracy and throughput, the code generation LLM, DeciCoder 1B, DeciCoder-6B and the state-of-the-art object detection and pose estimation models YOLO-NAS and YOLO-NAS Pose. For DeciDiffusion, AutoNAC crucially optimized the U-Net component for cost-efficient hardware, such as Qualcomm’s AI 100.

DeciDiffusion 2.0’s Cutting-Edge Scheduler and Training

The model features an optimized scheduler and was fine-tuned to arrive at quality images in fewer iterations or steps.

Optimized Scheduler for Sample Efficiency

DeciDiffusion 2.0 marks a significant advancement over previous latent diffusion models, particularly in terms of sample efficiency. This means it can produce high-quality images with fewer diffusion timesteps during the inference process. To attain such efficiency, Deci has created an optimized scheduler, SqueezedDPM++, which effectively cuts down the number of steps needed to generate a quality image from 16 to just 10.

Optimized Training for Sample Efficiency

Utilizing an optimized DPM++ scheduler, Deci fine-tuned the model to perform with a precise number of timesteps. This approach leads to a model that converges more rapidly, specifically tailored to the steps employed during its training.

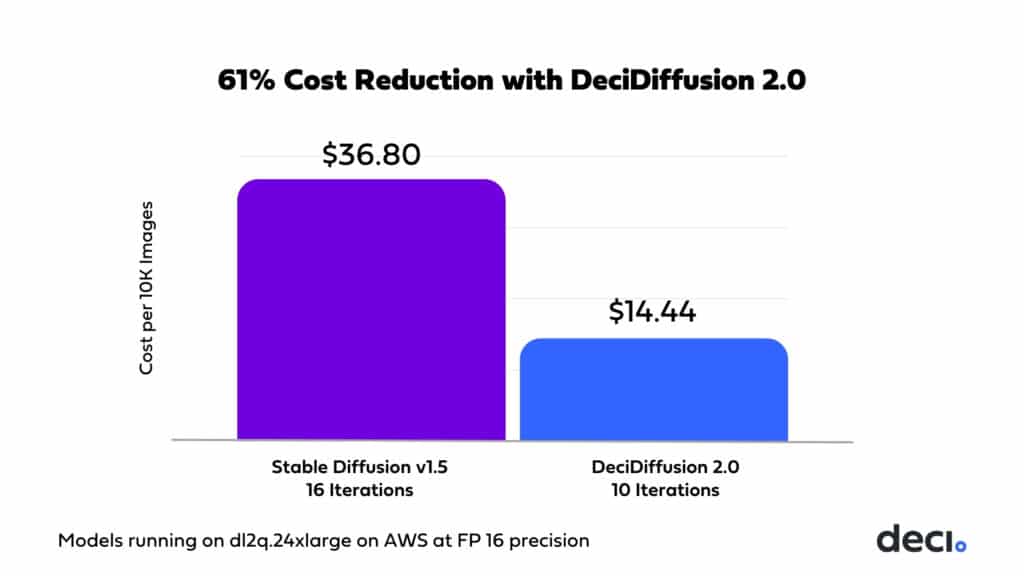

Cost Implications of DeciDiffusion 2.0’s Efficiency

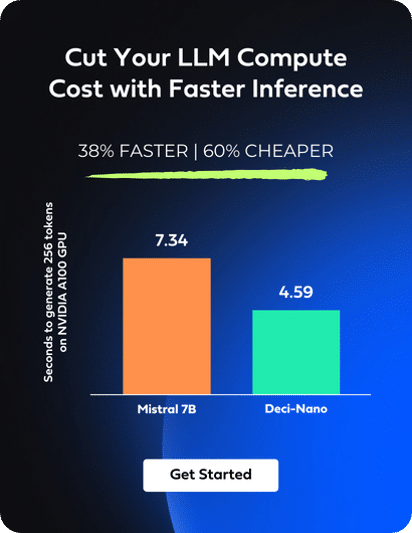

We’ve seen that DeciDiffusion 2.0’s enhanced latency is the result of its optimized architecture and scheduler. A direct implication of this is a significant 61% cost reduction.

DeciDiffusion 2.0’s Permissive License

Aligned with our dedication to advancing the adoption of efficient models, Deci is excited to present DeciDiffusion 2.0 to the AI community. Our goal is to maintain its ease of access and user-friendliness, promoting an environment rich in learning and innovation. DeciDiffusion 2.0 is available for complimentary download. We have released the model under the CreativeML Open RAIL++-M License. We invite researchers, developers, and enthusiasts to utilize this cutting-edge foundational model in their projects, driving forward the field of AI.

Conclusion

Thanks to its efficient architecture and advanced training methods, DeciDiffusion 2.0 achieves exceptional generation speed and image quality. Designed to run optimally on affordable hardware, such as Qualcomm’s Cloud AI 100, DeciDiffusion 2.0 opens up new possibilities for practical applications, enabling broader adoption and innovative uses of AI in various industries.

- Get Started: Access and download the model directly from its Hugging Face repository.

- Follow a step-by-step tutorial to implement the model on Qualcomm hardware.

- Run DeciDiffusion on AWS DL2q instances using the Qualcomm Cloud AI Platform SDK.

- Experience in Action: Engage with the model’s capabilities through our interactive demo.

- Explore Further: Examine our comprehensive notebook to learn how to leverage the full strength of the model.

If you’re interested in trying out our GenAI models firsthand, we encourage you to sign up for a free trial of our API.