Before designing a model for a computer vision task, it’s crucial to examine the dataset for variations in object sizes. Knowing whether your images contain a mix of small and large objects or primarily one or the other can guide you in selecting a suitable model architecture.

Understanding the role of detection heads in object detection models

Modern object detection models, such as Single Shot MultiBox Detector (SSD), You Only Look Once (YOLO), and RetinaNet that uses Feature Pyramid Network (FPN) structure, often utilize multiple detection heads. Each head is applied at a different scale or layer in the network to accommodate the detection of objects of varying sizes.

If your dataset predominantly contains small objects, you could, in theory, simplify models like SSD or YOLO by eliminating the use of detection heads at scales designed for larger objects. This would be akin to modifying the architecture to focus on the layers of the feature pyramid that cater to small object detection. Conversely, if your dataset contains extremely small objects, you could consider adjusting or adding a detection head specifically for detecting these smaller objects in these models.

Reducing the scales at which the detection heads are applied can decrease model complexity and memory usage. Meanwhile, adding or adjusting detection heads for specific object sizes might enhance the model’s accuracy. However, remember that such modifications may not be straightforward and would require careful implementation.

Skip Connections and Object Sizes

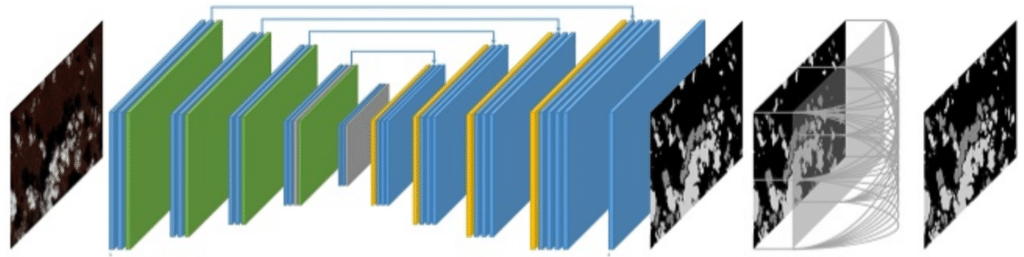

Skip connections, an important architectural element in deep learning models, are pathways that allow the output of one layer to be fed as input directly into a subsequent layer, bypassing one or more intermediate layers in the process. These connections play a crucial role when the task involves working with datasets that contain very small objects or a mix of different object sizes.

The utility of skip connections in these contexts can be attributed to their ability to preserve fine, high-frequency details from the early stages of the model and carry them to the end. As the model processes the data, it often reduces the spatial resolution, which can cause the loss of such fine details. By bypassing these reductions, skip connections ensure that the information about smaller objects and precise object boundaries is retained, enhancing the model’s detection and segmentation performance.

The length of the skip connection — that is, the number of layers it bypasses — also influences the granularity of the details retained. Longer connections can carry forward more detailed and refined information, thereby enabling more precise detection and segmentation of smaller objects.

While the role of skip connections is vital in the context of small objects, their importance extends to tasks that involve objects with intricate, fine details, especially in semantic segmentation. By carrying forward the high-frequency details, skip connections allow the model to maintain the precision necessary for delineating complex object boundaries. This makes them a valuable tool for any computer vision task that requires a high level of detail retention and precision.

Understanding the Role of the Receptive Field

The receptive field in a Convolutional Neural Network (CNN) refers to the region in the input space that a particular CNN feature can access. In other words, it’s the spatial extent of the input data that contributes to a neuron’s activation. Understanding receptive fields is critical in comprehending how a network learns and perceives the structure of the data it’s dealing with.

When considering datasets, one important factor is the size of the objects within the images. For datasets primarily composed of large objects, models with larger receptive fields are generally more suitable. This is because a larger receptive field can encompass the entirety of the object within its purview. This holistic view of the object allows the model to better understand the context and the relationships between different parts of the object. Such comprehension is critical when the task involves understanding the object as a whole, for instance, when discerning a bus from a car where the overall shape and structure matter.

On the other hand, if your dataset primarily features images populated with smaller objects, a model with a smaller receptive field might be more fitting. A smaller receptive field, by focusing on a more restricted region of the image, can concentrate on the fine details that might be critical in identifying or segmenting these smaller objects. The finer granularity in this case allows the model to better capture high-frequency information, which can be pivotal for tasks like detailed segmentation or precise object detection.

Therefore, the size of the receptive field plays a vital role in a model’s ability to perform its task efficiently and accurately. It should be carefully chosen and could even be adapted within the model based on the specific characteristics of the dataset.

Trade-offs in Model Complexity

It’s also crucial to consider the trade-offs. While models with larger receptive fields or added skip connections might perform better on a diverse object size distribution, they can also be more computationally intensive and may require more memory. Therefore, it’s important to weigh the potential performance benefits against the computational costs, especially when deploying models in resource-constrained environments.

Examples

Let’s now turn to some specific examples that illustrate how the object size distribution in a dataset can influence your choice of model architecture.

Example 1: Satellite Imagery Dataset

Consider a satellite imagery dataset, where images generally contain a large number of very small objects – such as individual houses, vehicles, or even people from a bird’s eye view. The objects of interest are relatively small compared to the overall image size. For such a dataset, a model architecture that does not pass high-frequency visual details will not be a good choice.

Instead, a model that uses depthwise separable convolutions and thus has smaller receptive fields, could be more suitable. The smaller receptive fields enable the model to capture the fine-grained details necessary to detect the tiny objects. For such a dataset you would also want to consider eliminating the use of detection heads at scales designed for larger objects. Finally, to ensure your model can detect the very small objects in your images, employ skip connections that pass high-frequency details from the early stages of the model and carry them to the end.

Example 2: Surveillance Camera Dataset

Conversely, consider a surveillance camera dataset for pedestrian detection. The objects of interest here – the people – are comparatively larger and fill a significant portion of the image. For this type of dataset, a model architecture with a larger receptive field might be more beneficial. These architectures can capture a broader context and are capable of detecting larger objects effectively.

Additionally, for such a dataset you could do away with the use of skip connections and detection heads at scales designed for smaller objects.

Example 3: Traffic Monitoring Dataset

Finally, think about a traffic monitoring dataset, where the objects of interest range from large trucks and buses to smaller cars, motorcycles, and pedestrians. Here, the object sizes in your dataset vary greatly, necessitating an architecture that can handle this diversity.

A suitable choice would leverage both large and small receptive fields to detect objects of varying sizes. It would also use skip connections to preserve high-resolution details from lower layers, while also retaining the strong semantic information from higher layers.

These examples underscore the idea that when choosing a model architecture for your object detection task, one key aspect to consider is the size of the objects in your dataset. Understanding this will help guide your selection towards an architecture that can most effectively detect and recognize these objects.